I recently determined that I should be using LVM-Thin instead of LVM for my cluster environment.

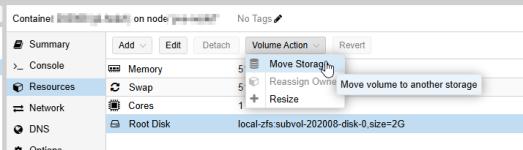

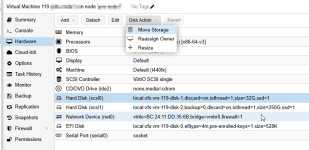

As such, I would like to drain and swap the datastores over to be LVM-Thin.

That said, I have some VM's that need minimal downtime. Is it possible to do a live migration from LVM -> LVM Thin without an inherent risk of dataloss? I am fine if it pre-allocates the entire disk, as I'm less concerned about the size of these VMs than I am the benefits of LVM-Thin for snapshot and PBS backup purposes.

I'm suspecting this isn't feasible, but want to check first.

As such, I would like to drain and swap the datastores over to be LVM-Thin.

That said, I have some VM's that need minimal downtime. Is it possible to do a live migration from LVM -> LVM Thin without an inherent risk of dataloss? I am fine if it pre-allocates the entire disk, as I'm less concerned about the size of these VMs than I am the benefits of LVM-Thin for snapshot and PBS backup purposes.

I'm suspecting this isn't feasible, but want to check first.