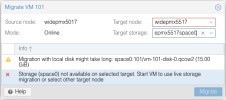

We have a two-node cluster that we upgraded to 8.4.0, both nodes are set up with local storage (directory). Prior to 8.4.0 we could migrate VMs between nodes by specifying the target storage, but this doesn't work after the upgrade:

The "Storage not available on selected target." error doesn't go away after I select target storage, and the Migrate button stays inactive. (The VM is of course running.)

We have another cluster with the same storage setup, where the nodes are on 8.1.0 and 8.2.0 respectively, where migration works with no issues.

"pveversion -v" output from both nodes:

node1:

node2:

The "Storage not available on selected target." error doesn't go away after I select target storage, and the Migrate button stays inactive. (The VM is of course running.)

We have another cluster with the same storage setup, where the nodes are on 8.1.0 and 8.2.0 respectively, where migration works with no issues.

"pveversion -v" output from both nodes:

node1:

Bash:

proxmox-ve: 8.4.0 (running kernel: 6.8.12-9-pve)

pve-manager: 8.4.1 (running version: 8.4.1/2a5fa54a8503f96d)

proxmox-kernel-helper: 8.1.1

proxmox-kernel-6.8: 6.8.12-9

proxmox-kernel-6.8.12-9-pve-signed: 6.8.12-9

ceph-fuse: 16.2.15+ds-0+deb12u1

corosync: 3.1.9-pve1

criu: 3.17.1-2+deb12u1

glusterfs-client: 10.3-5

ifupdown: residual config

ifupdown2: 3.2.0-1+pmx11

intel-microcode: 3.20250211.1~deb12u1

libjs-extjs: 7.0.0-5

libknet1: 1.30-pve2

libproxmox-acme-perl: 1.6.0

libproxmox-backup-qemu0: 1.5.1

libproxmox-rs-perl: 0.3.5

libpve-access-control: 8.2.2

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.1.0

libpve-cluster-perl: 8.1.0

libpve-common-perl: 8.3.1

libpve-guest-common-perl: 5.2.2

libpve-http-server-perl: 5.2.2

libpve-network-perl: 0.11.2

libpve-rs-perl: 0.9.4

libpve-storage-perl: 8.3.6

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.6.0-2

proxmox-backup-client: 3.4.0-1

proxmox-backup-file-restore: 3.4.0-1

proxmox-kernel-helper: 8.1.1

proxmox-mail-forward: 0.3.2

proxmox-mini-journalreader: 1.4.0

proxmox-widget-toolkit: 4.3.10

pve-cluster: 8.1.0

pve-container: 5.2.6

pve-docs: 8.4.0

pve-edk2-firmware: not correctly installed

pve-firewall: 5.1.1

pve-firmware: 3.15-3

pve-ha-manager: 4.0.7

pve-i18n: 3.4.2

pve-qemu-kvm: 9.2.0-5

pve-xtermjs: 5.5.0-2

qemu-server: 8.3.12

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0node2:

Bash:

proxmox-ve: 8.4.0 (running kernel: 6.8.12-10-pve)

pve-manager: 8.4.1 (running version: 8.4.1/2a5fa54a8503f96d)

proxmox-kernel-helper: 8.1.1

proxmox-kernel-6.8.12-10-pve-signed: 6.8.12-10

proxmox-kernel-6.8: 6.8.12-10

proxmox-kernel-6.5.13-6-pve-signed: 6.5.13-6

proxmox-kernel-6.5: 6.5.13-6

proxmox-kernel-6.5.13-1-pve-signed: 6.5.13-1

ceph-fuse: 16.2.15+ds-0+deb12u1

corosync: 3.1.9-pve1

criu: 3.17.1-2+deb12u1

glusterfs-client: 10.3-5

ifupdown: residual config

ifupdown2: 3.2.0-1+pmx11

intel-microcode: 3.20250211.1~deb12u1

libjs-extjs: 7.0.0-5

libknet1: 1.30-pve2

libproxmox-acme-perl: 1.6.0

libproxmox-backup-qemu0: 1.5.1

libproxmox-rs-perl: 0.3.5

libpve-access-control: 8.2.2

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.1.0

libpve-cluster-perl: 8.1.0

libpve-common-perl: 8.3.1

libpve-guest-common-perl: 5.2.2

libpve-http-server-perl: 5.2.2

libpve-network-perl: 0.11.2

libpve-rs-perl: 0.9.4

libpve-storage-perl: 8.3.6

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.6.0-2

proxmox-backup-client: 3.4.1-1

proxmox-backup-file-restore: 3.4.1-1

proxmox-kernel-helper: 8.1.1

proxmox-mail-forward: 0.3.2

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.7

proxmox-widget-toolkit: 4.3.10

pve-cluster: 8.1.0

pve-container: 5.2.6

pve-docs: 8.4.0

pve-edk2-firmware: not correctly installed

pve-firewall: 5.1.1

pve-firmware: 3.15-3

pve-ha-manager: 4.0.7

pve-i18n: 3.4.2

pve-qemu-kvm: 9.2.0-5

pve-xtermjs: 5.5.0-2

qemu-server: 8.3.12

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.7-pve2