Hi,

i'm trying to lower write latency on ceph ssd without success.

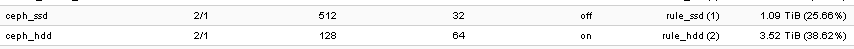

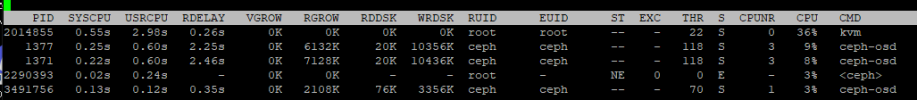

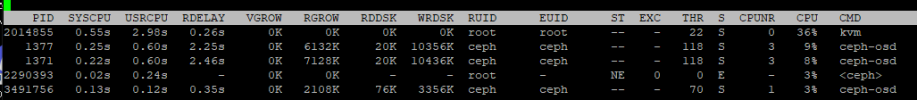

env: small prod site, v 7.1.10.3 pc, 1Gpbs. 13 ceph entry ssd with different sizes, from 200GB do 1TB, older ones. 10 vm linux, not IO demanding. I had similar problem with elastic and hdd, default options was to many threads. After lowering number of threads from 10 to 1 all fine. Atop shows 118 threads for 1 osd, to many ? how to lower that number ? I've tried with lowering threads on osd but nothing.

Through day work is done without: scrub, rebalance.

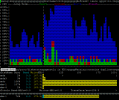

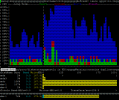

picture with very small IO but with lots of weights and ssd is 100% busy. Not always but with any IO demand all became very slow for all vm.

Please help

i'm trying to lower write latency on ceph ssd without success.

env: small prod site, v 7.1.10.3 pc, 1Gpbs. 13 ceph entry ssd with different sizes, from 200GB do 1TB, older ones. 10 vm linux, not IO demanding. I had similar problem with elastic and hdd, default options was to many threads. After lowering number of threads from 10 to 1 all fine. Atop shows 118 threads for 1 osd, to many ? how to lower that number ? I've tried with lowering threads on osd but nothing.

Through day work is done without: scrub, rebalance.

picture with very small IO but with lots of weights and ssd is 100% busy. Not always but with any IO demand all became very slow for all vm.

Please help

Last edited: