Hello :

I recently encountered a problem with random read iops when performing performance tests.

The scene I built is: pve5.4 + ceph 12.2.12

3 hosts, each host 1ssd + 3hdd (ssd is used for db and wal, hdd is osd)

KVM's disk will enable cache = write back mode, and select VirtIO SCSI single

Zh

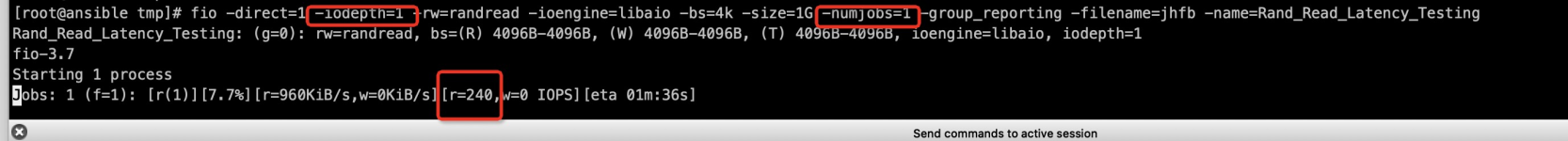

Delay in testing random reads, iodeph = 1, numjobs = 1, the average read iops is 200+

As shown below:

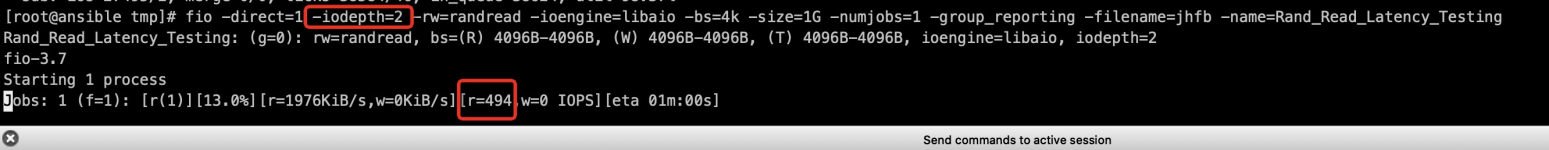

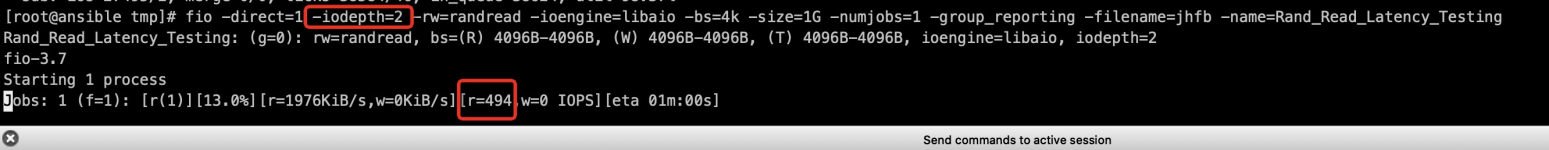

Delay in testing random reads, iodeph = 2, numjobs = 1, average read iops is 500+

As shown below:

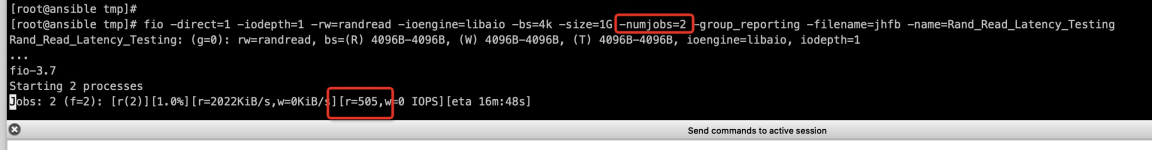

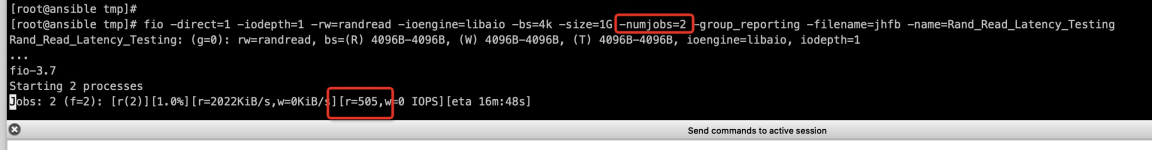

Delay in testing random reads, iodeph = 1, numjobs = 2, the average read iops is 500+

As shown below:

After testing, it is found that single io and single-threaded iops are fixed at a certain value. Is this normal? If it is not normal, how to adjust it to improve it? For example, adjusting the kvm configuration, the operating system or ceph itself?

thank a lot!

I recently encountered a problem with random read iops when performing performance tests.

The scene I built is: pve5.4 + ceph 12.2.12

3 hosts, each host 1ssd + 3hdd (ssd is used for db and wal, hdd is osd)

KVM's disk will enable cache = write back mode, and select VirtIO SCSI single

Zh

Delay in testing random reads, iodeph = 1, numjobs = 1, the average read iops is 200+

As shown below:

Delay in testing random reads, iodeph = 2, numjobs = 1, average read iops is 500+

As shown below:

Delay in testing random reads, iodeph = 1, numjobs = 2, the average read iops is 500+

As shown below:

After testing, it is found that single io and single-threaded iops are fixed at a certain value. Is this normal? If it is not normal, how to adjust it to improve it? For example, adjusting the kvm configuration, the operating system or ceph itself?

thank a lot!