Today I tried to upgrade a server (Hetzner EX101, with Intel i9-13900) with PVE 8.2 to 8.3 via the Web GUI. The server is not part of a cluster. I can provide you the PVE license number if needed.

Hardware configuration: 2x 2 TB NVME in ZFS RAID1 (root), 2x 8 TB NVME in ZFS RAID1 (for PBS datastores etc.), 4x 32 GB memory.

As usual, first I downloaded the current packages ("Refresh" button), then clicked the "Upgrade" button which opened a shell. The package were upgraded quickly and without any errors. Then I did a

as usual to get rid of old packages (I think it removed some very old kernel versions). After clicking the "Reboot" button on my node, the connection was lost. After an hour of no response (no ping, nothing), I ordered a Hetzner remote console.

The remote konsole showed "no video" output.

After online execution of the reset button, the system tried to boot up, but showed the following error:

The system is completely stuck here, and does not react to a simple CTRL ALT DEL (via remote KVM), but only reboots upon execution of reset via Hetzner Robot GUI.

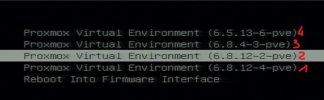

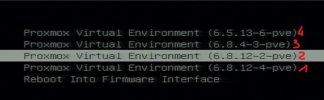

Then I tried booting the old kernel versions:

No 1 is the most current version, which is auto selected if I do nothing. I've tried it several times. Sometimes the systems hangs at this screen:

Here is the output for no 2:

No 3 is stuck at this screen:

No 4 is stuck here:

Furthermore I've tried to shut the system completely off and start it up again, but unfortunately this changed nothing for me.

Hint: I think the server was running PVE 8.2 before trying to upgrade to the most current version. I have installed package upgrades a few times in the last months, but the last server reboot (which loads any newly installed kernel versions!) - excluding today - had been done 5 months ago, so maybe that's the root of the problems here.

I've tried mounting the system with the Hetzner Rescue Linux, but apparently its kernel version is too new and OpenZFS cannot be compiled there, so I can't load ZFS and therefore can't mount my disks. I will notify Hetzner about that ZFS issue.

Fortunately this is only a backup server, and I will try to do a complete new install of PVE 8.3 now.

Hardware configuration: 2x 2 TB NVME in ZFS RAID1 (root), 2x 8 TB NVME in ZFS RAID1 (for PBS datastores etc.), 4x 32 GB memory.

As usual, first I downloaded the current packages ("Refresh" button), then clicked the "Upgrade" button which opened a shell. The package were upgraded quickly and without any errors. Then I did a

Code:

apt-get autoremoveThe remote konsole showed "no video" output.

After online execution of the reset button, the system tried to boot up, but showed the following error:

The system is completely stuck here, and does not react to a simple CTRL ALT DEL (via remote KVM), but only reboots upon execution of reset via Hetzner Robot GUI.

Then I tried booting the old kernel versions:

No 1 is the most current version, which is auto selected if I do nothing. I've tried it several times. Sometimes the systems hangs at this screen:

Here is the output for no 2:

No 3 is stuck at this screen:

No 4 is stuck here:

Furthermore I've tried to shut the system completely off and start it up again, but unfortunately this changed nothing for me.

Hint: I think the server was running PVE 8.2 before trying to upgrade to the most current version. I have installed package upgrades a few times in the last months, but the last server reboot (which loads any newly installed kernel versions!) - excluding today - had been done 5 months ago, so maybe that's the root of the problems here.

I've tried mounting the system with the Hetzner Rescue Linux, but apparently its kernel version is too new and OpenZFS cannot be compiled there, so I can't load ZFS and therefore can't mount my disks. I will notify Hetzner about that ZFS issue.

Fortunately this is only a backup server, and I will try to do a complete new install of PVE 8.3 now.

Last edited: