Iscsi Unknow

- Thread starter cjtg

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

In Proxmox when you add an iSCSI storage and the status shows as unknown, it usually means that Proxmox is not able to verify the availability of the target in the way it does for other storages like NFS or CIFS.

This is by design. The Proxmox storage plugin for iSCSI does not actively mount or check the target itself, it only provides the discovery of LUNs that can then be used as raw block devices or as a base for LVM/ZFS on top. Since there is no filesystem to probe and no real “mount”, Proxmox cannot give you a green/active status. Instead, it just marks it as unknown until you actually consume the LUNs.

So in short: unknown does not mean there is a problem, it just means Proxmox cannot automatically check if the iSCSI target is healthy. To confirm the storage is working, you should run iscsiadm -m session on the host to verify the session is established and check if the LUNs appear under /dev/disk/by-id/.

If the LUNs are visible there, you can go ahead and create an LVM or ZFS volume on top of them inside Proxmox.

Do you want me to show you how to test if your iSCSI target is actually connected and working from the Proxmox CLI?

This is by design. The Proxmox storage plugin for iSCSI does not actively mount or check the target itself, it only provides the discovery of LUNs that can then be used as raw block devices or as a base for LVM/ZFS on top. Since there is no filesystem to probe and no real “mount”, Proxmox cannot give you a green/active status. Instead, it just marks it as unknown until you actually consume the LUNs.

So in short: unknown does not mean there is a problem, it just means Proxmox cannot automatically check if the iSCSI target is healthy. To confirm the storage is working, you should run iscsiadm -m session on the host to verify the session is established and check if the LUNs appear under /dev/disk/by-id/.

If the LUNs are visible there, you can go ahead and create an LVM or ZFS volume on top of them inside Proxmox.

Do you want me to show you how to test if your iSCSI target is actually connected and working from the Proxmox CLI?

Thanks for responde, please show me.In Proxmox when you add an iSCSI storage and the status shows as unknown, it usually means that Proxmox is not able to verify the availability of the target in the way it does for other storages like NFS or CIFS.

This is by design. The Proxmox storage plugin for iSCSI does not actively mount or check the target itself, it only provides the discovery of LUNs that can then be used as raw block devices or as a base for LVM/ZFS on top. Since there is no filesystem to probe and no real “mount”, Proxmox cannot give you a green/active status. Instead, it just marks it as unknown until you actually consume the LUNs.

So in short: unknown does not mean there is a problem, it just means Proxmox cannot automatically check if the iSCSI target is healthy. To confirm the storage is working, you should run iscsiadm -m session on the host to verify the session is established and check if the LUNs appear under /dev/disk/by-id/.

If the LUNs are visible there, you can go ahead and create an LVM or ZFS volume on top of them inside Proxmox.

Do you want me to show you how to test if your iSCSI target is actually connected and working from the Proxmox CLI?

Yes, since you are using several IP connections to reach the same iSCSI storage you should definitely configure multipath. What happens now is that Proxmox sees each path as a separate disk, and if you don’t use multipath you risk confusion or even data corruption because the same LUN is accessible via different devices. Multipathd solves this by presenting a single device mapper path (for example /dev/mapper/mpathX) that automatically handles failover and load-balancing across all your active iSCSI sessions.

The workflow is this: first verify your iSCSI session is established with iscsiadm -m session, then confirm the LUNs are visible under /dev/disk/by-id/. Next install and enable multipath if it’s not already present:

Then in Proxmox you add an LVM storage pointing to the volume group pve-iscsi. This way you are safe against one iSCSI link going down, and you can also take advantage of throughput aggregation if your SAN supports load-balancing.

To answer your original doubt: seeing iSCSI as “unknown” in Proxmox is normal because it’s only a transport layer. The right approach is to put an LVM on top of the multipathed device, then add that LVM as a storage in Proxmox. That storage will show as active and usable. In your setup with multiple IP connections, multipath is strongly recommended to avoid inconsistencies and to get redundancy.

The workflow is this: first verify your iSCSI session is established with iscsiadm -m session, then confirm the LUNs are visible under /dev/disk/by-id/. Next install and enable multipath if it’s not already present:

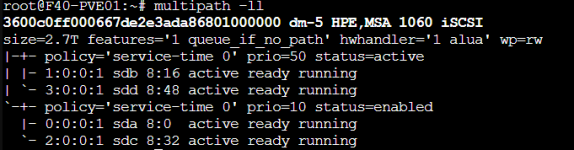

Then generate a default configuration with mpathconf --enable --with_multipathd y. After that you can run multipath -ll to see if your multiple iSCSI paths are grouped into a single mapper device. That new device (for example /dev/mapper/mpatha) is the one you should use for creating the volume group instead of the raw /dev/sdX devices. So the commands would be:apt install multipath-tools

systemctl enable multipathd --now

pvcreate /dev/mapper/mpatha

vgcreate pve-iscsi /dev/mapper/mpatha

Then in Proxmox you add an LVM storage pointing to the volume group pve-iscsi. This way you are safe against one iSCSI link going down, and you can also take advantage of throughput aggregation if your SAN supports load-balancing.

To answer your original doubt: seeing iSCSI as “unknown” in Proxmox is normal because it’s only a transport layer. The right approach is to put an LVM on top of the multipathed device, then add that LVM as a storage in Proxmox. That storage will show as active and usable. In your setup with multiple IP connections, multipath is strongly recommended to avoid inconsistencies and to get redundancy.

Thanks for the feedback, glad to hear you got it working. What you are experiencing with slow migration is expected on classic LVM, because Proxmox has to move the entire disk unless the storage is shared. With LVM-thin you would normally get snapshots and thin provisioning, but there is a limitation: LVM-thin cannot be shared across multiple nodes, so it’s not suitable on top of iSCSI if you want fast live migration. Starting with Proxmox VE 9, you don’t actually need to switch to LVM-thin to solve this. The LVM plugin now supports snapshots through the snapshot-as-volume-chain feature. The right approach is to keep your iSCSI LUN with multipath, create a normal LVM volume group on top of it, and in the storage configuration mark it as shared and enable snapshot-as-volume-chain. This way Proxmox can use snapshots for backup and migration, and migrations will be much faster because the disks are on shared storage instead of being copied over the network.

So to answer your question: with iSCSI you don’t create a thin pool, you create a standard VG on the iSCSI device, then in Datacenter → Storage add it as LVM, set it to shared, and enable snapshot-as-volume-chain. That gives you the benefits you were looking for without moving to LVM-thin, which is unsupported for shared SAN setups.

So to answer your question: with iSCSI you don’t create a thin pool, you create a standard VG on the iSCSI device, then in Datacenter → Storage add it as LVM, set it to shared, and enable snapshot-as-volume-chain. That gives you the benefits you were looking for without moving to LVM-thin, which is unsupported for shared SAN setups.

If you rely on Veeam, you need to stay on Proxmox VE 8 until Veeam officially supports VE 9, since at the moment it’s not compatible. As a certified Veeam instructor my advice is to avoid upgrading for now unless you are ready to move to Proxmox Backup Server, which already has full support on VE 9.

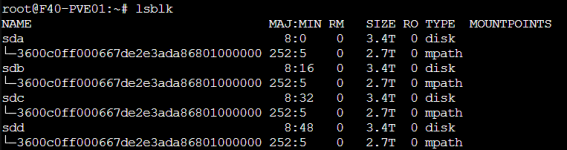

Thanks @desotech for your advice. Now as you notice I'm new on Proxmox, after shutdown all my proxmox infra which still on test last Friday, not able to see the MSA LVM iscsi volume on datacenter storage. Ran lsblk command and saw the disk connections. Any suggestion?