Hi all

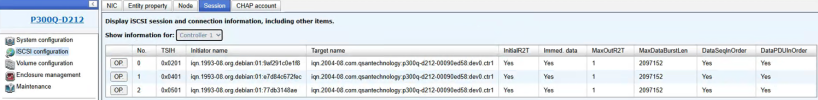

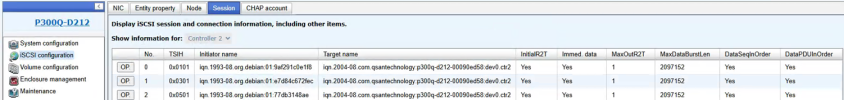

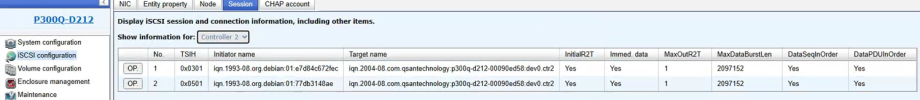

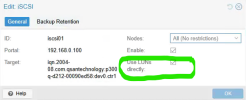

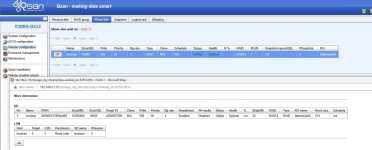

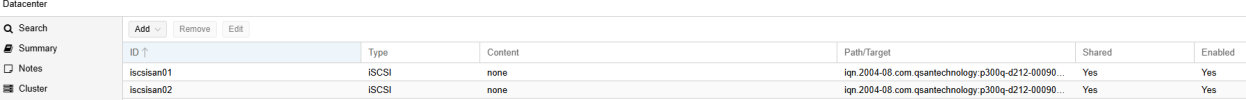

trying to show proxmox cluster ISCSI Storage to create Multipath but even if both controllers ISCSI portal are mapped

iscsiadm -m session

tcp: [1] 192.168.0.100:3260,0 iqn.2004-08.com.qsantechnology 300q-d212-00090ed58:dev0.ctr1 (non-flash)

300q-d212-00090ed58:dev0.ctr1 (non-flash)

tcp: [2] 192.168.0.200:3260,0 iqn.2004-08.com.qsantechnology 300q-d212-00090ed58:dev0.ctr2 (non-flash)

300q-d212-00090ed58:dev0.ctr2 (non-flash)

i got this

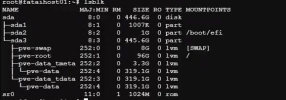

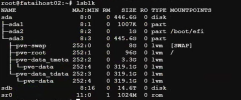

fdisk -l

Disk /dev/sda: 446.62 GiB, 479554568192 bytes, 936630016 sectors

Disk model: PERC H730P Adp

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disklabel type: gpt

Disk identifier: F7450559-4A48-4987-8682-481041407E12

Device Start End Sectors Size Type

/dev/sda1 34 2047 2014 1007K BIOS boot

/dev/sda2 2048 2099199 2097152 1G EFI System

/dev/sda3 2099200 936629982 934530783 445.6G Linux LVM

Partition 1 does not start on physical sector boundary.

Disk /dev/mapper/pve-swap: 8 GiB, 8589934592 bytes, 16777216 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disk /dev/mapper/pve-root: 96 GiB, 103079215104 bytes, 201326592 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Gdisk did it

gdisk -l

GPT fdisk (gdisk) version 1.0.9

Problem opening -l for reading! Error is 2.

The specified file does not exist!

I'm trying to follow this https://pve.proxmox.com/wiki/ISCSI_Multipath but drive does not appear.

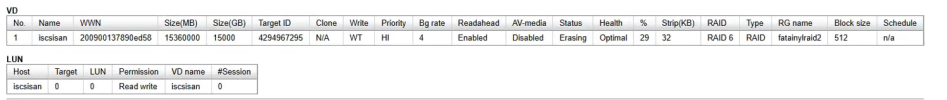

Volume is RAID-6 512bytes 64bit

Any suggest?

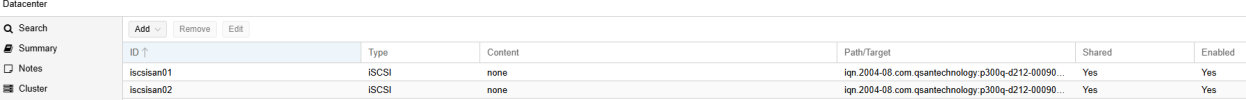

trying to show proxmox cluster ISCSI Storage to create Multipath but even if both controllers ISCSI portal are mapped

iscsiadm -m session

tcp: [1] 192.168.0.100:3260,0 iqn.2004-08.com.qsantechnology

tcp: [2] 192.168.0.200:3260,0 iqn.2004-08.com.qsantechnology

i got this

fdisk -l

Disk /dev/sda: 446.62 GiB, 479554568192 bytes, 936630016 sectors

Disk model: PERC H730P Adp

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disklabel type: gpt

Disk identifier: F7450559-4A48-4987-8682-481041407E12

Device Start End Sectors Size Type

/dev/sda1 34 2047 2014 1007K BIOS boot

/dev/sda2 2048 2099199 2097152 1G EFI System

/dev/sda3 2099200 936629982 934530783 445.6G Linux LVM

Partition 1 does not start on physical sector boundary.

Disk /dev/mapper/pve-swap: 8 GiB, 8589934592 bytes, 16777216 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disk /dev/mapper/pve-root: 96 GiB, 103079215104 bytes, 201326592 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Gdisk did it

gdisk -l

GPT fdisk (gdisk) version 1.0.9

Problem opening -l for reading! Error is 2.

The specified file does not exist!

I'm trying to follow this https://pve.proxmox.com/wiki/ISCSI_Multipath but drive does not appear.

Volume is RAID-6 512bytes 64bit

Any suggest?