Hello everyone. I have 3 Proxmox Nodes connected to an HP MSA 1040 storage. These 3 Nodes are in a cluster and can normally see the 4 LUNs of the storage. However, a new Dell PowerEdge R550 server was purchased, and I added this new server to the existing cluster. But the new Node cannot access the storage LUNs, as you can see in the screenshot below:

I will show below some checks I have already performed.

The

The

The

The

The

The

The

The

The

The

The

The

Additional information: the Storage IP addresses are:

- 172.16.0.101

- 172.16.0.102

- 172.16.0.103

- 172.16.0.104

The IP address of the old Node that accesses the Storage is 172.16.0.11.

The IP address of the new Node that cannot access the Storage is 172.16.0.17. This new Node can successfully ping all 4 Storage IP addresses:

The permissions within the HP MSA 1040 Storage are correctly granted, in the same way they were applied to the other Nodes in the Cluster:

Within Proxmox, the permissions for the new Node were also granted for the 4 LUN units:

I don't know what else I can do, the storage units are not recognized on the new Proxmox Node:

Can anyone help me? I would greatly appreciate it if you could help me solve this issue.

I will show below some checks I have already performed.

The

/etc/pve/storage.cfg file from the new Node that cannot access the Storage:

Code:

root@pmx:~# cat /etc/pve/storage.cfg

dir: local

path /var/lib/vz

content vztmpl,iso

prune-backups keep-last=1

shared 1

lvmthin: local-lvm

thinpool data

vgname pve

content images,rootdir

iscsi: HP_iSCSI

portal 172.16.0.101

target iqn.1986-03.com.hp:storage.msa1040.14471ea67b

content images

nodes pve2,master,pve,pmx

lvm: ST_1

vgname lvm_hp

base HP_iSCSI:0.0.3.scsi-SHP_MSA_1040_SAN_00c0ff25ba360000e611ba5c01000000

content rootdir,images

nodes pve2,master,pve,pmx

saferemove 0

shared 1

lvm: ST_2

vgname lvm_hp_2

base HP_iSCSI:0.0.1.scsi-SHP_MSA_1040_SAN_00c0ff25ba360000da3bf95502000000

content rootdir,images

nodes pmx,pve2,master,pve

saferemove 0

shared 1

lvm: ST_3

vgname lvm_hp_3

base HP_iSCSI:0.0.2.scsi-SHP_MSA_1040_SAN_00c0ff25ba360000db3bf95501000000

content rootdir,images

nodes master,pve,pve2,pmx

saferemove 0

shared 1

lvm: ST_4

vgname lvm_hp_4

base HP_iSCSI:0.0.0.scsi-SHP_MSA_1040_SAN_00c0ff25ba360000da3bf95501000000

content images,rootdir

nodes pmx,pve2,pve,master

saferemove 0

shared 1

pbs: PBS-Cloud

datastore ALJ

server proxmox.rstech.solutions

content backup

fingerprint f3:1c:7b:b1:7c:c1:2e:c4:89:a3:91:4e:61:a7:e4:9f:0e:72:ac:38:10:e9:c0:8c:ae:65:8b:bd:8c:5f:06:bc

nodes pve2,master,pve

prune-backups keep-all=1

username backup_alj@pbs

pbs: PBS-ALJ_interno

datastore backup_interno

server 192.168.0.21

content backup

fingerprint 1f:53:a4:7c:e6:3d:7e:ec:e6:ec:6a:06:9a:e5:4c:b2:42:a1:ec:2d:d7:a3:46:be:2b:25:96:8c:ea:7d:c9:d8

prune-backups keep-all=1

username backup_alj@pbs

pbs: PBS-ALJ

datastore backup3

server 192.168.0.21

content backup

fingerprint 1f:53:a4:7c:e6:3d:7e:ec:e6:ec:6a:06:9a:e5:4c:b2:42:a1:ec:2d:d7:a3:46:be:2b:25:96:8c:ea:7d:c9:d8

prune-backups keep-all=1

username backup_alj@pbsThe

/etc/pve/storage.cfg file from the old Node that accesses the Storage normally:

Code:

root@master:~# cat /etc/pve/storage.cfg

dir: local

path /var/lib/vz

content vztmpl,iso

prune-backups keep-last=1

shared 1

lvmthin: local-lvm

thinpool data

vgname pve

content images,rootdir

iscsi: HP_iSCSI

portal 172.16.0.101

target iqn.1986-03.com.hp:storage.msa1040.14471ea67b

content images

nodes pve2,master,pve,pmx

lvm: ST_1

vgname lvm_hp

base HP_iSCSI:0.0.3.scsi-SHP_MSA_1040_SAN_00c0ff25ba360000e611ba5c01000000

content rootdir,images

nodes pve2,master,pve,pmx

saferemove 0

shared 1

lvm: ST_2

vgname lvm_hp_2

base HP_iSCSI:0.0.1.scsi-SHP_MSA_1040_SAN_00c0ff25ba360000da3bf95502000000

content rootdir,images

nodes pmx,pve2,master,pve

saferemove 0

shared 1

lvm: ST_3

vgname lvm_hp_3

base HP_iSCSI:0.0.2.scsi-SHP_MSA_1040_SAN_00c0ff25ba360000db3bf95501000000

content rootdir,images

nodes master,pve,pve2,pmx

saferemove 0

shared 1

lvm: ST_4

vgname lvm_hp_4

base HP_iSCSI:0.0.0.scsi-SHP_MSA_1040_SAN_00c0ff25ba360000da3bf95501000000

content images,rootdir

nodes pmx,pve2,pve,master

saferemove 0

shared 1

pbs: PBS-Cloud

datastore ALJ

server proxmox.rstech.solutions

content backup

fingerprint f3:1c:7b:b1:7c:c1:2e:c4:89:a3:91:4e:61:a7:e4:9f:0e:72:ac:38:10:e9:c0:8c:ae:65:8b:bd:8c:5f:06:bc

nodes pve2,master,pve

prune-backups keep-all=1

username backup_alj@pbs

pbs: PBS-ALJ_interno

datastore backup_interno

server 192.168.0.21

content backup

fingerprint 1f:53:a4:7c:e6:3d:7e:ec:e6:ec:6a:06:9a:e5:4c:b2:42:a1:ec:2d:d7:a3:46:be:2b:25:96:8c:ea:7d:c9:d8

prune-backups keep-all=1

username backup_alj@pbs

pbs: PBS-ALJ

datastore backup3

server 192.168.0.21

content backup

fingerprint 1f:53:a4:7c:e6:3d:7e:ec:e6:ec:6a:06:9a:e5:4c:b2:42:a1:ec:2d:d7:a3:46:be:2b:25:96:8c:ea:7d:c9:d8

prune-backups keep-all=1

username backup_alj@pbsThe

pvesm status command from the new Node that cannot access the Storage:

Code:

root@pmx:~# pvesm status

Name Type Status Total Used Available %

HP_iSCSI iscsi active 0 0 0 0.00%

PBS-ALJ pbs active 7751272176 4143885528 3216668988 53.46%

PBS-ALJ_interno pbs active 1900570232 1369699328 434253432 72.07%

PBS-Cloud pbs disabled 0 0 0 N/A

ST_1 lvm inactive 0 0 0 0.00%

ST_2 lvm inactive 0 0 0 0.00%

ST_3 lvm inactive 0 0 0 0.00%

ST_4 lvm inactive 0 0 0 0.00%

local dir active 98497780 8338656 85109576 8.47%

local-lvm lvmthin active 334610432 0 334610432 0.00%The

pvesm status command from the old Node that accesses the Storage normally:

Code:

Name Type Status Total Used Available %

HP_iSCSI iscsi active 0 0 0 0.00%

PBS-ALJ pbs active 7751272176 4143885528 3216668988 53.46%

PBS-ALJ_interno pbs active 1900570232 1369699356 434253404 72.07%

PBS-Cloud pbs active 5813177436 5116112340 404022636 88.01%

ST_1 lvm active 1946771456 1625292800 321478656 83.49%

ST_2 lvm active 1953120256 1887436800 65683456 96.64%

ST_3 lvm active 1953120256 1205862400 747257856 61.74%

ST_4 lvm active 1953120256 1807745024 145375232 92.56%

local dir active 98559220 33138412 60371260 33.62%

local-lvm lvmthin active 392933376 0 392933376 0.00%The

iscsiadm -m node command from the new Node that cannot access the Storage:

Code:

root@pmx:~# iscsiadm -m node

172.16.0.101:3260,1 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

172.16.0.102:3260,3 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

172.16.0.103:3260,2 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

172.16.0.104:3260,4 iqn.1986-03.com.hp:storage.msa1040.14471ea67bThe

iscsiadm -m node command from the old Node that accesses the Storage normally:

Code:

root@master:~# iscsiadm -m node

192.168.0.33:3260,1 iqn.1992-04.com.emc:storage.vmstor.stjvl-1

192.168.0.33:3260,1 iqn.1992-04.com.emc:storage.vmstor.stjvl-3

192.168.0.33:3260,1 iqn.1992-04.com.emc:storage.vmstor.stjvl-2

192.168.0.31:3260,1 iqn.2012-07.com.lenovoemc:storage.ix4-300d.st-emc

172.16.0.31:3260,1 iqn.2012-07.com.lenovoemc:storage.ix4-300d.st-emc

192.168.1.252:3260,3 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

192.168.1.253:3260,2 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

172.16.0.102:3260,3 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

192.168.0.243:3260,2 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

192.168.0.245:3260,1 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

192.168.0.244:3260,4 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

192.168.0.232:3260,3 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

172.16.0.3:3260,2 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

192.168.1.251:3260,1 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

192.168.0.233:3260,2 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

192.168.1.254:3260,4 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

172.16.0.104:3260,4 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

192.168.0.234:3260,4 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

172.16.0.101:3260,1 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

172.16.0.4:3260,4 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

192.168.0.231:3260,1 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

172.16.0.2:3260,3 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

192.168.0.246:3260,3 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

172.16.0.103:3260,2 iqn.1986-03.com.hp:storage.msa1040.14471ea67b

172.16.0.1:3260,1 iqn.1986-03.com.hp:storage.msa1040.14471ea67bThe

iscsiadm -m session command from the new Node that cannot access the Storage:

Code:

root@pmx:~# iscsiadm -m session

tcp: [1] 172.16.0.104:3260,4 iqn.1986-03.com.hp:storage.msa1040.14471ea67b (non-flash)

tcp: [2] 172.16.0.101:3260,1 iqn.1986-03.com.hp:storage.msa1040.14471ea67b (non-flash)

tcp: [3] 172.16.0.102:3260,3 iqn.1986-03.com.hp:storage.msa1040.14471ea67b (non-flash)

tcp: [4] 172.16.0.103:3260,2 iqn.1986-03.com.hp:storage.msa1040.14471ea67b (non-flash)The

iscsiadm -m session command from the old Node that accesses the Storage normally:

Code:

root@master:~# iscsiadm -m session

tcp: [3] 172.16.0.101:3260,1 iqn.1986-03.com.hp:storage.msa1040.14471ea67b (non-flash)The

pvesm list [iscsi_storage_name] command from the new Node that cannot access the Storage:

Code:

root@pmx:~# pvesm list ST_1

Volid Format Type Size VMID

root@pmx:~# pvesm list ST_2

Volid Format Type Size VMID

root@pmx:~# pvesm list ST_3

Volid Format Type Size VMID

root@pmx:~# pvesm list ST_4

Volid Format Type Size VMIDThe

pvesm list [iscsi_storage_name] command from the old Node that accesses the Storage normally:

Code:

root@master:~# pvesm list ST_1

Volid Format Type Size VMID

ST_1:vm-106-disk-0 raw images 161061273600 106

ST_1:vm-106-disk-1 raw images 644245094400 106

ST_1:vm-108-disk-0 raw images 429496729600 108

ST_1:vm-110-disk-0 raw images 322122547200 110

ST_1:vm-111-disk-0 raw images 107374182400 111

root@master:~# pvesm list ST_2

Volid Format Type Size VMID

ST_2:vm-104-disk-0 raw images 214748364800 104

ST_2:vm-112-disk-0 raw images 644245094400 112

ST_2:vm-114-disk-0 raw images 107374182400 114

ST_2:vm-203-disk-0 raw images 536870912000 203

ST_2:vm-204-disk-0 raw images 214748364800 204

ST_2:vm-212-disk-0 raw images 214748364800 212

root@master:~# pvesm list ST_3

Volid Format Type Size VMID

ST_3:vm-105-disk-0 raw images 644245094400 105

ST_3:vm-105-disk-1 raw images 214748364800 105

ST_3:vm-107-disk-0 raw rootdir 53687091200 107

ST_3:vm-113-disk-0 raw images 214748364800 113

ST_3:vm-113-disk-1 raw images 107374182400 113

root@master:~# pvesm list ST_4

Volid Format Type Size VMID

ST_4:vm-100-disk-0 raw images 214748364800 100

ST_4:vm-101-disk-0 raw images 214748364800 101

ST_4:vm-109-disk-0 raw images 1099511627776 109

ST_4:vm-213-disk-0 raw images 214748364800 213

ST_4:vm-213-disk-1 raw images 107374182400 213The

pvscan command on the new Node that cannot access the Storage:

Code:

root@pmx:~# pvscan

PV /dev/sdb3 VG pve lvm2 [445.62 GiB / 16.00 GiB free]

PV /dev/sda VG local-vd1 lvm2 [14.55 TiB / 14.55 TiB free]

Total: 2 [<14.99 TiB] / in use: 2 [<14.99 TiB] / in no VG: 0 [0 ]The

pvscan command on the old Node that accesses the Storage normally:

Code:

root@master:~# pvscan

PV /dev/sdp VG lvm_hp lvm2 [1.81 TiB / <306.59 GiB free]

PV /dev/sdn VG lvm_hp_3 lvm2 [<1.82 TiB / 712.64 GiB free]

PV /dev/sdk VG lvm_hp_2 lvm2 [<1.82 TiB / 62.64 GiB free]

PV /dev/sdd VG lvm_hp_4 lvm2 [<1.82 TiB / 138.64 GiB free]

PV /dev/sda3 VG pve lvm2 [<558.38 GiB / <16.00 GiB free]

Total: 5 [<7.82 TiB] / in use: 5 [<7.82 TiB] / in no VG: 0 [0 ]Additional information: the Storage IP addresses are:

- 172.16.0.101

- 172.16.0.102

- 172.16.0.103

- 172.16.0.104

The IP address of the old Node that accesses the Storage is 172.16.0.11.

The IP address of the new Node that cannot access the Storage is 172.16.0.17. This new Node can successfully ping all 4 Storage IP addresses:

Code:

root@pmx:~# ping 172.16.0.101

PING 172.16.0.101 (172.16.0.101) 56(84) bytes of data.

64 bytes from 172.16.0.101: icmp_seq=1 ttl=64 time=0.407 ms

64 bytes from 172.16.0.101: icmp_seq=2 ttl=64 time=0.313 ms

64 bytes from 172.16.0.101: icmp_seq=3 ttl=64 time=0.294 ms

64 bytes from 172.16.0.101: icmp_seq=4 ttl=64 time=0.295 ms

64 bytes from 172.16.0.101: icmp_seq=5 ttl=64 time=0.316 ms

^C

--- 172.16.0.101 ping statistics ---

5 packets transmitted, 5 received, 0% packet loss, time 4088ms

rtt min/avg/max/mdev = 0.294/0.325/0.407/0.041 msThe permissions within the HP MSA 1040 Storage are correctly granted, in the same way they were applied to the other Nodes in the Cluster:

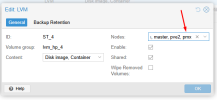

Within Proxmox, the permissions for the new Node were also granted for the 4 LUN units:

I don't know what else I can do, the storage units are not recognized on the new Proxmox Node:

Can anyone help me? I would greatly appreciate it if you could help me solve this issue.