Hello,

I have a big problem on a VM installed by a customer. It boots but when we make some operations that need to use some disk space, it crashes and goes to "io-error".

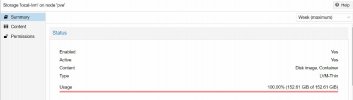

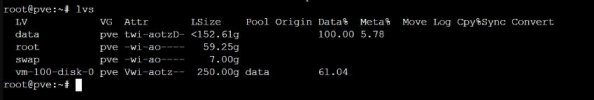

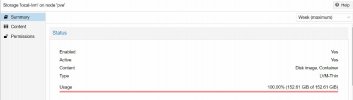

I noticed that local-lvm is full but I can't understand why it is so small.

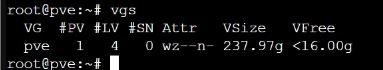

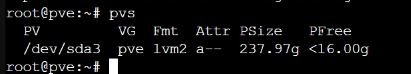

The physical machine has a 250 GB hard disk and the local-lvm is 152GB, and it's full. I don't remember I dedicated this space only. How can I add space?

I have a big problem on a VM installed by a customer. It boots but when we make some operations that need to use some disk space, it crashes and goes to "io-error".

I noticed that local-lvm is full but I can't understand why it is so small.

The physical machine has a 250 GB hard disk and the local-lvm is 152GB, and it's full. I don't remember I dedicated this space only. How can I add space?