Hi,

we are running a 2 node cluster (with a qdevice to keep quorum) for our internal development environment. Both nodes are running proxmox 7.4-16, with each node having local RAID 5 SSD storage. VM's are deployed using a gitlab pipeline that will try to deploy 2 VM's simultaniously through the terraform module. These VM's make use of a template and the deployment process consists of cloning, updating and starting the VM.

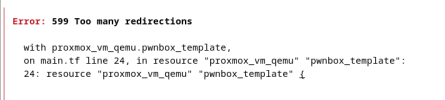

Every now and then however, the pipeline fails with a "599 Too many redirections" error:

Correspondingly in the pveproxy log:

When actually inspecting the task logs however, these seem fine and return with "TASK OK", for example:

From looking at other 599 errors in the this forum this appears to be I/O related. However, when checking the I/O latency graph of the corresponding node, it remains below 1% for the entire timeframe. CPU utilization, Server Load and Network Traffic all remain well under the maximum (below 20%) capacity during the deployment timeframe. Additionally, the disks are 4x SSD in a RAID 5 array with ~17k write IOPS during testing and higher read. The network is 1GB/s and latencies are low.

Checking the journalctl for pveproxy, pvedaemon and corosync there are no obvious errors present. I do see this error in the pveproxy log, but that is 40 minutes later and appears unrelated:

I'm starting to run out of ideas on how to remediate this issue. Any suggestions would be much appreciated!

For completeness sake - here are all the 599 errors in the log for the API/terraform user today:

we are running a 2 node cluster (with a qdevice to keep quorum) for our internal development environment. Both nodes are running proxmox 7.4-16, with each node having local RAID 5 SSD storage. VM's are deployed using a gitlab pipeline that will try to deploy 2 VM's simultaniously through the terraform module. These VM's make use of a template and the deployment process consists of cloning, updating and starting the VM.

Every now and then however, the pipeline fails with a "599 Too many redirections" error:

Code:

2023-11-09T11:26:23.294Z [INFO] provider.terraform-provider-proxmox_v2.9.14: 2023/11/09 11:26:23 599 Too many redirections: timestamp=2023-11-09T11:26:23.294ZCorrespondingly in the pveproxy log:

Code:

IP_ADDRESS - USERNAME@REALM [09/11/2023:11:26:23 +0000] "GET /api2/json/nodes/s11563090/tasks/UPID:s11563090:0027DDCA:0CDD1941:654CC1C5:qmconfig:9111919:USERNAME@REALM:/status HTTP/1.1" 599 -

IP_ADDRESS - USERNAME@REALM [09/11/2023:11:26:23 +0000] "GET /api2/json/nodes/s11563090/tasks/UPID:s11563090:0027E4D5:0CDD1D29:654CC1CF:qmclone:60001:USERNAME@REALM:/status HTTP/1.1" 599 -When actually inspecting the task logs however, these seem fine and return with "TASK OK", for example:

Code:

update VM 9111919: -agent 1 -bios seabios -ciuser cybertron -cores 2 -cpu host -description Acting as: elastic -hotplug network,disk,usb -ipconfig0 ip=dhcp -kvm 1 -memory 49152 -name elastic-node-1.DOMAIN -net0 virtio=A6:CC:47:EC:DF:33,bridge=vnet20,rate=500 -numa 0 -onboot 1 -ostype l26 -scsi0 local:10,iops_max=500,mbps=512,format=qcow2,iops=500,ssd=1 -scsi1 local:450,format=qcow2,mbps=512,iops_max=3000,iops=3000,ssd=1 -scsihw virtio-scsi-single -sockets 2 -sshkeys SSHKEYS -tablet 1 -vcpus 4

Formatting '/var/lib/vz/images/9111919/vm-9111919-disk-1.qcow2', fmt=qcow2 cluster_size=65536 extended_l2=off preallocation=metadata compression_type=zlib size=10737418240 lazy_refcounts=off refcount_bits=16

scsi0: successfully created disk 'local:9111919/vm-9111919-disk-1.qcow2,iops=500,iops_max=500,mbps=512,size=10G,ssd=1'

Formatting '/var/lib/vz/images/9111919/vm-9111919-disk-2.qcow2', fmt=qcow2 cluster_size=65536 extended_l2=off preallocation=metadata compression_type=zlib size=483183820800 lazy_refcounts=off refcount_bits=16

scsi1: successfully created disk 'local:9111919/vm-9111919-disk-2.qcow2,iops=3000,iops_max=3000,mbps=512,size=450G,ssd=1'

TASK OKFrom looking at other 599 errors in the this forum this appears to be I/O related. However, when checking the I/O latency graph of the corresponding node, it remains below 1% for the entire timeframe. CPU utilization, Server Load and Network Traffic all remain well under the maximum (below 20%) capacity during the deployment timeframe. Additionally, the disks are 4x SSD in a RAID 5 array with ~17k write IOPS during testing and higher read. The network is 1GB/s and latencies are low.

Checking the journalctl for pveproxy, pvedaemon and corosync there are no obvious errors present. I do see this error in the pveproxy log, but that is 40 minutes later and appears unrelated:

Code:

Nov 09 12:21:05 s11563090 pveproxy[2887854]: proxy detected vanished client connection

Nov 09 12:21:05 s11563090 pveproxy[2887854]: proxy detected vanished client connection

Nov 09 12:21:05 s11563090 pveproxy[2912387]: proxy detected vanished client connectionI'm starting to run out of ideas on how to remediate this issue. Any suggestions would be much appreciated!

For completeness sake - here are all the 599 errors in the log for the API/terraform user today:

Code:

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:22:41 +0000] "GET /api2/json/nodes/s11563090/tasks/UPID:s11563090:00276162:0CDCC775:654CC0F4:qmclone:60001:USER@REALM:/status HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:24:21 +0000] "GET /api2/json/nodes/s11563090/qemu/9111927/status/current HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:24:38 +0000] "GET /api2/json/nodes/s11563090/tasks/UPID:s11563090:0027A521:0CDCF3E2:654CC165:qmclone:60001:USER@REALM:/status HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:25:23 +0000] "GET /api2/json/nodes/s11563090/tasks/UPID:s11563090:0027BC26:0CDD03DD:654CC18E:qmclone:60001:USER@REALM:/status HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:25:39 +0000] "GET /api2/json/nodes/s11563090/storage/local/status HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:26:23 +0000] "GET /api2/json/nodes/s11563090/tasks/UPID:s11563090:0027DDCA:0CDD1941:654CC1C5:qmconfig:9111919:USER@REALM:/status HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:26:23 +0000] "GET /api2/json/nodes/s11563090/tasks/UPID:s11563090:0027E4D5:0CDD1D29:654CC1CF:qmclone:60001:USER@REALM:/status HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:27:13 +0000] "GET /api2/json/nodes/s11563090/tasks/UPID:s11563090:00280377:0CDD306D:654CC200:qmstart:9112223:USER@REALM:/status HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:27:18 +0000] "GET /api2/json/nodes/s11563090/storage/local/status HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:27:50 +0000] "GET /api2/json/nodes/s11563090/storage/local/content HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:28:09 +0000] "GET /api2/json/nodes/s11563090/storage/local/content HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:28:39 +0000] "GET /api2/json/nodes/s11563090/storage/local/status HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:29:00 +0000] "GET /api2/json/nodes/s11563090/storage/local/content HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:30:10 +0000] "GET /api2/json/nodes/s11563090/qemu/9111926/agent/network-get-interfaces HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:30:38 +0000] "GET /api2/json/nodes/s11563090/storage/local/content HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:35:58 +0000] "GET /api2/json/nodes/s11563090/storage/local/content HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:36:21 +0000] "GET /api2/json/nodes/s11563090/storage/local/status HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:40:36 +0000] "GET /api2/json/nodes/s11563090/tasks/UPID:s11563090:0029AC16:0CDE68C8:654CC520:qmstart:9112221:USER@REALM:/status HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:42:18 +0000] "GET /api2/json/nodes/s11563090/storage/local/content HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:42:40 +0000] "GET /api2/json/nodes/s11563090/storage/local/status HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:42:59 +0000] "GET /api2/json/nodes/s11563090/storage/local/status HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:43:41 +0000] "GET /api2/json/nodes/s11563090/qemu/9112222/status/current HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:47:07 +0000] "GET /api2/json/nodes/s11563090/tasks/UPID:s11563090:002A8B48:0CDEFB74:654CC697:qmclone:60001:USER@REALM:/status HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:49:29 +0000] "GET /api2/json/nodes/s11563090/qemu/9112224/status/current HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:50:10 +0000] "GET /api2/json/nodes/s11563090/storage/local/status HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:50:31 +0000] "GET /api2/json/nodes/s11563090/storage/local/content HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:11:51:02 +0000] "GET /api2/json/nodes/s11563090/storage/local/status HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:12:19:59 +0000] "GET /api2/json/nodes/s11563090/storage/local/content HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:12:20:21 +0000] "GET /api2/json/nodes/s11563090/storage/local/content HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:12:25:04 +0000] "GET /api2/json/nodes/s11563090/storage/local/status HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:12:25:18 +0000] "GET /api2/json/nodes/s11563090/storage/local/content HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:12:25:27 +0000] "GET /api2/json/nodes/s11563090/storage/local/content HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:12:25:27 +0000] "GET /api2/json/nodes/s11563090/storage/local/status HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:12:26:49 +0000] "GET /api2/json/nodes/s11563090/storage/local/content HTTP/1.1" 599 -

::ffff:IP_ADDR - USER@REALM [09/11/2023:12:27:07 +0000] "GET /api2/json/nodes/s11563090/tasks/UPID:s11563090:002D2D56:0CE2A0EA:654CCFED:qmstart:9122102:USER@REALM:/status HTTP/1.1" 599 -[CODE]