Thank you, that is very helpful and looks promising, will try it in the lab soon.@Kollskegg

the udev link files support general globbing (i had to look up what that mean)

this means yuou just make the devpath match parameter and specificy as much or as little as needed to match

see here https://www.man7.org/linux/man-pages/man7/udev.7.html it shows all the UDEV parameters that can be matched on in the 'keys' section of the doc, globbing means you can write expressions if needed (but if its like the person you quoted you should just needDEVPATH=/devices/pci0000:00/0000:00:1c.4/0000:07:00.0/0000:08:00.0/0000:09:00.0/domain0/0-0/0-3/0-1.0

DEVPATH=/devices/pci0000:00/0000:00:1c.4/0000:07:00.0/0000:08:00.0/0000:09:00.0/domain0/0-0/0-3/0-3.0

or to be more precise you need the devpath from the two different sections that mention thunderbolt-net

Code:P: /devices/pci0000:00/0000:00:1c.4/0000:07:00.0/0000:08:00.0/0000:09:00.0/domain0/0-0/0-3/0-3.0 M: 0-3.0 R: 0 U: thunderbolt T: thunderbolt_service V: thunderbolt-net E: DEVPATH=/devices/pci0000:00/0000:00:1c.4/0000:07:00.0/0000:08:00.0/0000:09:00.0/domain0/0-0/0-3/0-3.0 E: SUBSYSTEM=thunderbolt E: DEVTYPE=thunderbolt_service E: DRIVER=thunderbolt-net E: MODALIAS=tbsvc:knetworkp00000001v00000001r00000001

Intel Nuc 13 Pro Thunderbolt Ring Network Ceph Cluster

- Thread starter l-koeln

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hi @Kollskegg, I have having the same issue, and tried a few globs. I can not get it to work, are you having any success?Thank you, that is very helpful and looks promising, will try it in the lab soon.

Sorry no, couldn't get a successful match using the full-length path. The MAC addresses per interface/port have been stable between reboots so far, so I resolved it by using those in the match instead. The downside to this is that I've seen the MAC addresses change between re-installs and firmware upgrades. Some see the MAC addresses changing with cable removal/insertion but for me they don't.Hi @Kollskegg, I have having the same issue, and tried a few globs. I can not get it to work, are you having any success?

thats why i suggest globbing - it allows you do to partial matches, expressions etc - i suggest one of you use chatgpt, if you carefully craft your question i give it an 8 in 10 chance it will give you the correct informationcouldn't get a successful match using the full-length path

I am sure I am missing something here.

I have the following two thunderbolt net devices:

For the systemd link file, I have tried a bunch of different routes, but should something like the following work?

I have the following two thunderbolt net devices:

Code:

P: /devices/pci0000:00/0000:00:0d.2/domain0/0-0/0-1/0-1.0

M: 0-1.0

R: 0

J: +thunderbolt:0-1.0

U: thunderbolt

T: thunderbolt_service

V: thunderbolt-net

E: DEVPATH=/devices/pci0000:00/0000:00:0d.2/domain0/0-0/0-1/0-1.0

E: SUBSYSTEM=thunderbolt

E: DEVTYPE=thunderbolt_service

E: DRIVER=thunderbolt-net

E: MODALIAS=tbsvc:knetworkp00000001v00000001r00000001

P: /devices/pci0000:00/0000:00:0d.2/domain0/0-0/0-3/0-3.0

M: 0-3.0

R: 0

J: +thunderbolt:0-3.0

U: thunderbolt

T: thunderbolt_service

V: thunderbolt-net

E: DEVPATH=/devices/pci0000:00/0000:00:0d.2/domain0/0-0/0-3/0-3.0

E: SUBSYSTEM=thunderbolt

E: DEVTYPE=thunderbolt_service

E: DRIVER=thunderbolt-net

E: MODALIAS=tbsvc:knetworkp00000001v00000001r00000001For the systemd link file, I have tried a bunch of different routes, but should something like the following work?

Code:

[Match]

Property=DEVPATH=/devices/pci0000:00/0000:00:0d.2/domain0/0-0/0-3/0-3.0

Driver=thunderbolt-net

[Link]

MACAddressPolicy=none

Name=ent3I have never tested using the property match

have you tried enclosing it in quotes incase there are escaping issues on the / symbols

you could also try using globbing (property accepts * and ?) if chatgpt is not hallucinating something like

make sure you use udevadms monitor function to see what happens when you remove and add the thunderbolt cables as that will show you what is and isntt getting matched

have you tried enclosing it in quotes incase there are escaping issues on the / symbols

you could also try using globbing (property accepts * and ?) if chatgpt is not hallucinating something like

Property=DEVPATH=*/0-3 though of course that could still have the issue if the / needs to be escaped in some form - i recommend this approach because it may not be the full end point that udevadm matches - thats why you dont see a full path match in my pcie path reference version, to avoid issues like thatmake sure you use udevadms monitor function to see what happens when you remove and add the thunderbolt cables as that will show you what is and isntt getting matched

Last edited:

evidently you didn't eve read the last 10 posts, if you had you would have realised why the wya you do it doesn't work for these other people - the bus address is not unique for each port for them, so what you posted is unhelpful to them as it wont solve their issue and redunant as it is no different to the base instructionsPS: didn't read whole theard

Question for others who might be using a TB for Ceph. Is your

In the config snippets I have seen both here and online it looks as though most are running the public network from a physical link/VLAN, which makes sense if you need to share CEPH on the network with VMs, K8S for storage etc, but I assume this is going to be at a 1GB or 2.5GB network speed rather than the speed of the TB mesh?

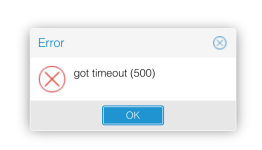

I have taken the approach of using a shared public and cluster network, but when doing so, although Ceph replication, VMs etc is all healthy, some of the Proxmox GUI (Datacenter > Ceph and ceph > osd > manage global settings) returns HTTPS 500s. While using a separate public network all is ok.

Keen to understand how others are using it and if they have experienced similar?

public_network shared with your cluster_network?In the config snippets I have seen both here and online it looks as though most are running the public network from a physical link/VLAN, which makes sense if you need to share CEPH on the network with VMs, K8S for storage etc, but I assume this is going to be at a 1GB or 2.5GB network speed rather than the speed of the TB mesh?

I have taken the approach of using a shared public and cluster network, but when doing so, although Ceph replication, VMs etc is all healthy, some of the Proxmox GUI (Datacenter > Ceph and ceph > osd > manage global settings) returns HTTPS 500s. While using a separate public network all is ok.

Keen to understand how others are using it and if they have experienced similar?

The documentation recommends following:

So for me this looks like having just a 1/2.5 Gbit public network isn't suitable.

We recommend a network bandwidth of at least 10 Gbps, or more, to be used exclusively for Ceph traffic. A meshed network setup [https://pve.proxmox.com/wiki/Full_Mesh_Network_for_Ceph_Server ] is also an option for three to five node clusters, if there are no 10+ Gbps switches available.

The volume of traffic, especially during recovery, will interfere with other services on the same network, especially the latency sensitive Proxmox VE corosync cluster stack can be affected, resulting in possible loss of cluster quorum. Moving the Ceph traffic to dedicated and physical separated networks will avoid such interference, not only for corosync, but also for the networking services provided by any virtual guests.

For estimating your bandwidth needs, you need to take the performance of your disks into account.. While a single HDD might not saturate a 1 Gb link, multiple HDD OSDs per node can already saturate 10 Gbps too. If modern NVMe-attached SSDs are used, a single one can already saturate 10 Gbps of bandwidth, or more. For such high-performance setups we recommend at least a 25 Gpbs, while even 40 Gbps or 100+ Gbps might be required to utilize the full performance potential of the underlying disks.

If unsure, we recommend using three (physical) separate networks for high-performance setups:

https://pve.proxmox.com/wiki/Deploy...r#_recommendations_for_a_healthy_ceph_cluster

- one very high bandwidth (25+ Gbps) network for Ceph (internal) cluster traffic.

- one high bandwidth (10+ Gpbs) network for Ceph (public) traffic between the ceph server and ceph client storage traffic. Depending on your needs this can also be used to host the virtual guest traffic and the VM live-migration traffic.

- one medium bandwidth (1 Gbps) exclusive for the latency sensitive corosync cluster communication.

So for me this looks like having just a 1/2.5 Gbit public network isn't suitable.

Agree... though in my setup it's ~30Gbit over TB. In a homelab with NUCS (which this thread is largely related to) for testing it sounds as though people are doing it and its probably fine with understood risks.The documentation recommends following:

So for me this looks like having just a 1/2.5 Gbit public network isn't suitable.

In any case, that doesn't appear to be related to my issue.

Last edited:

I'm running with a shared

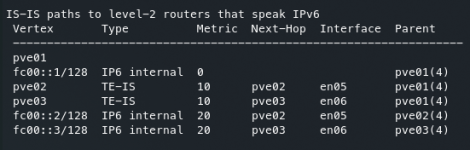

For external access i use direct host routes to the PVE hosts over IPv6.

Here's an example:

I had to disable

With this approach I also added a 4th (absolutely uncritical) Proxmox host to the cluster with no direct TB connection but access to the Ceph cluster.

cluster_network and public_network on the TB link.For external access i use direct host routes to the PVE hosts over IPv6.

Here's an example:

I had to disable

multicast_snooping on the Proxmox vmbr0 interface to have IPv6 NDP, DaD and so on IPv6 itself to work as expected.With this approach I also added a 4th (absolutely uncritical) Proxmox host to the cluster with no direct TB connection but access to the Ceph cluster.

Last edited:

Thanks for the info. That makes sense and was looking at a direct route option for my k3s once I’m confident of the stability as I want to ditch my longhorn setup. Good to see it’s working for you.

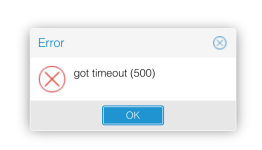

I assume your Proxmox Ceph GUI doesn’t have the issues that I’m experiencing with 500 status timeouts when clicking the datacentre > ceph option. Clicking the node > ceph option is ok. I have a similar error when I click the OSD manage globals button. If I try to click create button in the Ceph > Pools menu in the GUI, I also see a timeout before the modal even pops up, however I’ve just created several new pools via the CLI without any issue.

I might try to switch my OpenFabric to IPv6 and see if it’s any different.

I assume your Proxmox Ceph GUI doesn’t have the issues that I’m experiencing with 500 status timeouts when clicking the datacentre > ceph option. Clicking the node > ceph option is ok. I have a similar error when I click the OSD manage globals button. If I try to click create button in the Ceph > Pools menu in the GUI, I also see a timeout before the modal even pops up, however I’ve just created several new pools via the CLI without any issue.

I might try to switch my OpenFabric to IPv6 and see if it’s any different.

Last edited:

Thanks!! Good to know. Not sure what's going on on my side then as it all seems to be perfect otherwise.

I'm gonna switch out IPv4 to IPv6 and see if it makes any difference.I'm using OpenFabric with IPv6:

View attachment 93016

Thanks for the confirmation, much appreciated!!

@frankyyy

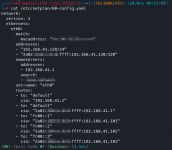

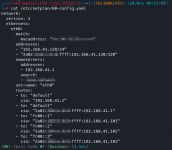

My ceph cluster and ceph public are the TB network, and in my case they are both the same IPv6 /124 subnet, note it is very critical that you have the /124 i found if using the FC00 subnet or weird crap can happen, i used to have a smaller mask and found it had some very odd issues when you start doing advanced FRR

If any physical node not participating in the ring needs access to ceph i use FRR routing

lan-access-to-mesh.md

if they are a VM running the proxmox nodes with access to the TB network i do it this way

how to access proxmox ceph mesh from VMs on the same proxmox nodes

this are considered guidance only as i havent validated them with anyone else to see if i made doc mistakes yet

My ceph cluster and ceph public are the TB network, and in my case they are both the same IPv6 /124 subnet, note it is very critical that you have the /124 i found if using the FC00 subnet or weird crap can happen, i used to have a smaller mask and found it had some very odd issues when you start doing advanced FRR

Code:

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = fc00::80/124

fsid = 5e55fd50-d135-413d-bffe-9d0fae0ef5fa

mon_allow_pool_delete = true

mon_host = fc00::82 fc00::81 fc00::83

ms_bind_ipv4 = false

ms_bind_ipv6 = true

public_network = fc00::80/124If any physical node not participating in the ring needs access to ceph i use FRR routing

lan-access-to-mesh.md

if they are a VM running the proxmox nodes with access to the TB network i do it this way

how to access proxmox ceph mesh from VMs on the same proxmox nodes

this are considered guidance only as i havent validated them with anyone else to see if i made doc mistakes yet

Last edited:

Great, thanks, good to know. I did see a mention of this in your doc as well@frankyyy

My ceph cluster and ceph public are the TB network, and in my case they are both the same IPv6 /124 subnet, note it is very critical that you have the /124 i found if using the FC00 subnet or weird crap can happen, i used to have a smaller mask and found it had some very odd issues when you start doing advanced FRR

Code:[global] auth_client_required = cephx auth_cluster_required = cephx auth_service_required = cephx cluster_network = fc00::80/124 fsid = 5e55fd50-d135-413d-bffe-9d0fae0ef5fa mon_allow_pool_delete = true mon_host = fc00::82 fc00::81 fc00::83 ms_bind_ipv4 = false ms_bind_ipv6 = true public_network = fc00::80/124

If any physical node not participating in the ring needs access to ceph i use FRR routing

lan-access-to-mesh.md

if they are a VM running the proxmox nodes with access to the TB network i do it this way

how to access proxmox ceph mesh from VMs on the same proxmox nodes

this are considered guidance only as i havent validated them with anyone else to see if i made doc mistakes yet

I did switch IPv4 to IPv6 but saw the same issue... might shut VMs down, do a backup, delete, purge etc etc and rebuild Ceph and OpenFabric from scratch in case something is off.

@frankyyy whats the exact issue

i have encounted all sorts of issues when the metadata accidentally registers. OSDs and MDs etc to things like 127.0.0.1 or 0.0.0.0 - it can be super hard to fix, i got chatgpt to write me some diagnostics etc to help

rebuilding is probably faster if you can do that safely

before recreating anything ceph make ssure everything is rebooted, routing and routes is perfect and there are ZERO mistakes in hosts files, resolv.conf etc etc and you should be ok

i have encounted all sorts of issues when the metadata accidentally registers. OSDs and MDs etc to things like 127.0.0.1 or 0.0.0.0 - it can be super hard to fix, i got chatgpt to write me some diagnostics etc to help

rebuilding is probably faster if you can do that safely

before recreating anything ceph make ssure everything is rebooted, routing and routes is perfect and there are ZERO mistakes in hosts files, resolv.conf etc etc and you should be ok

Last edited: