00:00.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14e8

00:00.2 IOMMU: Advanced Micro Devices, Inc. [AMD] Device 14e9

00:01.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14ea

00:01.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Device 14ed

00:01.2 PCI bridge: Advanced Micro Devices, Inc. [AMD] Device 14ed

00:02.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14ea

00:02.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Device 14ee

00:02.2 PCI bridge: Advanced Micro Devices, Inc. [AMD] Device 14ee

00:03.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14ea

00:04.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14ea

00:08.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14ea

00:08.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Device 14eb

00:08.2 PCI bridge: Advanced Micro Devices, Inc. [AMD] Device 14eb

00:08.3 PCI bridge: Advanced Micro Devices, Inc. [AMD] Device 14eb

00:14.0 SMBus: Advanced Micro Devices, Inc. [AMD] FCH SMBus Controller (rev 71)

00:14.3 ISA bridge: Advanced Micro Devices, Inc. [AMD] FCH LPC Bridge (rev 51)

00:18.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14f0

00:18.1 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14f1

00:18.2 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14f2

00:18.3 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14f3

00:18.4 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14f4

00:18.5 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14f5

00:18.6 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14f6

00:18.7 Host bridge: Advanced Micro Devices, Inc. [AMD] Device 14f7

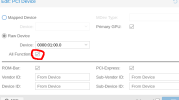

01:00.0 VGA compatible controller: NVIDIA Corporation GP107 [GeForce GTX 1050 Ti] (rev a1)

01:00.1 Audio device: NVIDIA Corporation GP107GL High Definition Audio Controller (rev a1)

02:00.0 Non-Volatile memory controller: Micron Technology Inc 7450 PRO NVMe SSD (rev 01)

03:00.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] 600 Series Chipset PCIe Switch Upstream Port (rev 01)

04:00.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] 600 Series Chipset PCIe Switch Downstream Port (rev 01)

04:08.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] 600 Series Chipset PCIe Switch Downstream Port (rev 01)

04:09.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] 600 Series Chipset PCIe Switch Downstream Port (rev 01)

04:0a.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] 600 Series Chipset PCIe Switch Downstream Port (rev 01)

04:0c.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] 600 Series Chipset PCIe Switch Downstream Port (rev 01)

04:0d.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] 600 Series Chipset PCIe Switch Downstream Port (rev 01)

05:00.0 Ethernet controller: Solarflare Communications SFC9120 10G Ethernet Controller (rev 01)

05:00.1 Ethernet controller: Solarflare Communications SFC9120 10G Ethernet Controller (rev 01)

08:00.0 Ethernet controller: Realtek Semiconductor Co., Ltd. RTL8125 2.5GbE Controller (rev 05)

09:00.0 USB controller: Advanced Micro Devices, Inc. [AMD] 600 Series Chipset USB 3.2 Controller (rev 01)

0a:00.0 SATA controller: Advanced Micro Devices, Inc. [AMD] 600 Series Chipset SATA Controller (rev 01)

0b:00.0 Non-Volatile memory controller: Seagate Technology PLC FireCuda 530 SSD (rev 01)

0c:00.0 VGA compatible controller: Advanced Micro Devices, Inc. [AMD/ATI] Phoenix1 (rev 05)

0c:00.1 Audio device: Advanced Micro Devices, Inc. [AMD/ATI] Rembrandt Radeon High Definition Audio Controller

0c:00.2 Encryption controller: Advanced Micro Devices, Inc. [AMD] Family 19h (Model 74h) CCP/PSP 3.0 Device

0c:00.3 USB controller: Advanced Micro Devices, Inc. [AMD] Device 15b9

0c:00.4 USB controller: Advanced Micro Devices, Inc. [AMD] Device 15ba

0c:00.6 Audio device: Advanced Micro Devices, Inc. [AMD] Family 17h/19h HD Audio Controller

0d:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Device 14ec

0d:00.1 Signal processing controller: Advanced Micro Devices, Inc. [AMD] AMD IPU Device

0e:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Device 14ec

0e:00.3 USB controller: Advanced Micro Devices, Inc. [AMD] Device 15c0

0e:00.4 USB controller: Advanced Micro Devices, Inc. [AMD] Device 15c1