I haven't had much luck myself. I logged this to the ipex-llm project:

https://github.com/intel/ipex-llm/issues/12994

I was intending to go with an LXC container but that meant using the 6.11 kernel, which wasn't going to work with the B580. So similar to what you guys did, I installed the 6.11 proxmox kernel, installed a ubuntu 24.10 vm with 6.13 kernel and PCI raw passthrough. Running update-pciids on proxmox helped with identifying the B580 in the list, otherwise I was using the lspci to find the address.

My original plan with the LXC was to easily mount a local directory so I could store the models outside a blob store, with a VM it was even more important to get these things out of file store. So I added a 9p device also:

args: -fsdev local,security_model=mapped,id=fsdev0,path=/mnt/rocket/llm/ -device virtio-9p-pci,id=fs0,fsdev=fsdev0,mount_tag=hostshare

Mounted and added it to /etc/fstab and then in the ipex-llm and open-webui containers I pointed to the mount. All my files are read/write outside the VM now so I don't have to worry about it bloating up with cruft and old blobs I don't know how to manage properly.

I used the

https://syslynx.net/llm-intel-b580-linux/ guide but found the ipex-llm would fail to load the model. The logs would indicate that ZES_ENABLE_SYSMAN wasn't enabled, but its in the container env. Further the B580 is noted as being used.

I tried a bunch of models just in case, it looks like that can have their own logs, etc... So I tried phi, llama and Gemma, all the small ones.

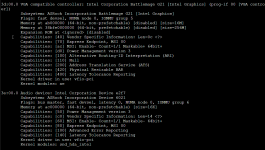

ggml_sycl_init: found 1 SYCL devices:

time=2025-03-23T10:51:50.559+08:00 level=INFO source=runner.go:967 msg="starting go runner"

time=2025-03-23T10:51:50.559+08:00 level=INFO source=runner.go:968 msg=system info="CPU : SSE3 = 1 | SSSE3 = 1 | AVX = 1 | AVX2 = 1 | F16C = 1 | FMA = 1 | LLAMAFILE = 1 | OPENMP = 1 | AARCH64_REPACK = 1 | CPU : SSE3 = 1 | SSSE3 = 1 | AVX = 1 | AVX2 = 1 | F16C = 1 | FMA = 1 | LLAMAFILE = 1 | OPENMP = 1 | AARCH64_REPACK = 1 | cgo(gcc)" threads=6

get_memory_info: [warning] ext_intel_free_memory is not supported (export/set ZES_ENABLE_SYSMAN=1 to support), use total memory as free memory

llama_load_model_from_file: using device SYCL0 (Intel(R) Graphics [0xe20b]) - 11605 MiB free

time=2025-03-23T10:51:50.559+08:00 level=INFO source=runner.go:1026 msg="Server listening on 127.0.0.1:45721"

time=2025-03-23T10:51:50.704+08:00 level=INFO source=server.go:605 msg="waiting for server to become available" status="llm server loading model"

llama_model_loader: loaded meta data with 34 key-value pairs and 883 tensors from /root/.ollama/models/blobs/sha256-377655e65351a68cddfbd69b7c8dc60c1890466254628c3e494661a52c2c5ada (version GGUF V3 (latest))

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

...

llama_model_loader: - type q4_K: 205 tensors

llama_model_loader: - type q6_K: 34 tensors

llama_model_load: error loading model: error loading model hyperparameters: key not found in model: gemma3.attention.layer_norm_rms_epsilon

llama_load_model_from_file: failed to load model

panic: unable to load model: /root/.ollama/models/blobs/sha256-377655e65351a68cddfbd69b7c8dc60c1890466254628c3e494661a52c2c5ada

goroutine 8 [running]:

ollama/llama/runner.(*Server).loadModel(0xc000119560, {0x3e7, 0x0, 0x0, 0x0, {0x0, 0x0, 0x0}, 0xc000503690, 0x0}, ...)

ollama/llama/runner/runner.go:861 +0x4ee

created by ollama/llama/runner.Execute in goroutine 1

ollama/llama/runner/runner.go:1001 +0xd0d

time=2025-03-23T10:51:50.954+08:00 level=ERROR source=sched.go:455 msg="error loading llama server" error="

llama runner process has terminated: error loading model: error loading model hyperparameters: key not found in model: gemma3.attention.layer_norm_rms_epsilon\nllama_load_model_from_file: failed to load model"