Hello,

For a long time now when I access my Windows 10 VM (and all the other ones like MAC, Ubuntu, Windows 11...), I have had tremendous input lag, even frustrating me. However, when I access that VM via RDP, the input lag is normal or there is none. I have already tried changing the CPU to Host as other posts suggested years ago but this does not solve what is happening to me.

The VM has 8GB RAM and 2 cpus x4 threads assigned and I think it is more than enough to run that machine without lag.

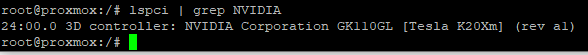

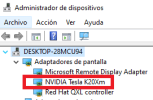

I have a graphics card but I have never known how to make it work in Proxmox. For example, assigning X resources of that graphics card to a VM.

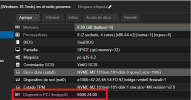

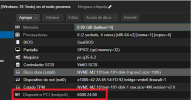

I have configured the VM like this:

The CPU x86-64-v2 and q35-6.2 option is the one which i have notice less lag.

Any suggestions on how I can reduce or eliminate the input lag of the NoVNC console?

If anyone wants the output of a command, I will be very attentive to the topic to respond as soon as possible.

Thank you

For a long time now when I access my Windows 10 VM (and all the other ones like MAC, Ubuntu, Windows 11...), I have had tremendous input lag, even frustrating me. However, when I access that VM via RDP, the input lag is normal or there is none. I have already tried changing the CPU to Host as other posts suggested years ago but this does not solve what is happening to me.

The VM has 8GB RAM and 2 cpus x4 threads assigned and I think it is more than enough to run that machine without lag.

I have a graphics card but I have never known how to make it work in Proxmox. For example, assigning X resources of that graphics card to a VM.

I have configured the VM like this:

The CPU x86-64-v2 and q35-6.2 option is the one which i have notice less lag.

Any suggestions on how I can reduce or eliminate the input lag of the NoVNC console?

If anyone wants the output of a command, I will be very attentive to the topic to respond as soon as possible.

Thank you

Last edited: