Hi All,

I've made a massive screw up and somehow in a script I was testing on a host inside a cluster, I deleted alot of files from the host which instantly replicated across to the other host in the cluster.

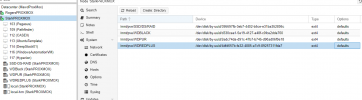

I don't know what got deleted. All I got was this output suggesting it was trying everything:

INFO: /bin/rm: cannot remove './etc/pve/.debug': Permission denied

INFO: /bin/rm: cannot remove './etc/pve/.vmlist': Permission denied

INFO: /bin/rm: cannot remove './etc/pve/.members': Permission denied

INFO: /bin/rm: cannot remove './etc/pve/.rrd': Permission denied

INFO: /bin/rm: cannot remove './etc/pve/.version': Permission denied

INFO: /bin/rm: cannot remove './etc/pve/.clusterlog': Permission denied

INFO: /bin/rm: cannot remove './run/rpc_pipefs/gssd/clntXX/info': Operation not permitted

INFO: find: '/bin/rm': No such file or directory

INFO: find: '/bin/rm': No such file or directory

In the console which I still had connected, that host pretty much disapeared and whilst the VM's were still up - it wasn't reporting. I rebooted that Host and it's dead - failing to boot. The remaining host I can no longer connect to in the GUI, this host I had a backup of /pve /etc and /root which I've restored but it hasn't automatically come back online - whilst I can't connect to the GUI, it's still up and has critical items like my pfsense router, domain controllers etc running. If I reboot it and it doesn't come back up, I am absolutley up the river without a paddle.

I can still access the current host via SSH. Massivley in need of help. Anything is appreciated.

I've made a massive screw up and somehow in a script I was testing on a host inside a cluster, I deleted alot of files from the host which instantly replicated across to the other host in the cluster.

I don't know what got deleted. All I got was this output suggesting it was trying everything:

INFO: /bin/rm: cannot remove './etc/pve/.debug': Permission denied

INFO: /bin/rm: cannot remove './etc/pve/.vmlist': Permission denied

INFO: /bin/rm: cannot remove './etc/pve/.members': Permission denied

INFO: /bin/rm: cannot remove './etc/pve/.rrd': Permission denied

INFO: /bin/rm: cannot remove './etc/pve/.version': Permission denied

INFO: /bin/rm: cannot remove './etc/pve/.clusterlog': Permission denied

INFO: /bin/rm: cannot remove './run/rpc_pipefs/gssd/clntXX/info': Operation not permitted

INFO: find: '/bin/rm': No such file or directory

INFO: find: '/bin/rm': No such file or directory

In the console which I still had connected, that host pretty much disapeared and whilst the VM's were still up - it wasn't reporting. I rebooted that Host and it's dead - failing to boot. The remaining host I can no longer connect to in the GUI, this host I had a backup of /pve /etc and /root which I've restored but it hasn't automatically come back online - whilst I can't connect to the GUI, it's still up and has critical items like my pfsense router, domain controllers etc running. If I reboot it and it doesn't come back up, I am absolutley up the river without a paddle.

I can still access the current host via SSH. Massivley in need of help. Anything is appreciated.