Hello everyone,

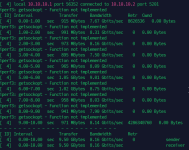

I’m currently testing migration performance between two test servers connected directly with a 10Gbps DAC SFP+ cable, but I cannot achieve anywhere near 10Gbps. My migration speed is stuck around 70 MB/s (~560 Mbps), which is far below expectations.

Also, both servers also have regular RJ45 NICs for GUI access and ISO uploads not to migrate.

I've also tried to install Server B with Ubuntu and use ftp to transfer file which averaging about 400MB/s - 800MB/s.

I expected to reach:

I’m currently testing migration performance between two test servers connected directly with a 10Gbps DAC SFP+ cable, but I cannot achieve anywhere near 10Gbps. My migration speed is stuck around 70 MB/s (~560 Mbps), which is far below expectations.

Also, both servers also have regular RJ45 NICs for GUI access and ISO uploads not to migrate.

I've also tried to install Server B with Ubuntu and use ftp to transfer file which averaging about 400MB/s - 800MB/s.

Hardware Setup

Server A (Proxmox VE 9.0)

- Model: Dell PowerEdge R620 (Server model may differ in the future)

- NIC: Chelsio T320 10GbE Dual-Port Adapter

- OS: Proxmox VE 9.0

- Disk: Dell Enterprise VJM47

- Network configuration:

auto vmbr1

iface vmbr1 inet static

address 10.10.10.2/24

mtu 9000

bridge-ports enp5s0

bridge-stp off

bridge-fd 0

Server B ( VMware ESXi 6.5)

- Model: HP ProLiant DL160 G6 (Server model may differ in the future and VMware version)

- NIC: Intel X520 10GbE (SFP+)

- OS: ESXi 6.5

- Disk: Dell Enterprise VJM47

- Network configuration:

- Virtual Switches: Created a vSwitch1 using the 10GbE NIC and set the MTU to 9000.

- Port group: PortGroup1, no VLAN, using vSwitch1.

- VMkernel NICs: Added new VMKernel NIC using PortGroup1 and set the IP static 10.10.10.3/24 and set the MTU to 9000.

- Physical NICs: Changing from Auto-negotiable to 1000Mbps, full Duplex

Problem

Even though the DAC SFP+ link is 10Gbps and MTU is set to 9000 on both sides, during VM migration I cannot get more than ~70 MB/s.I expected to reach:

- at least 500–800 MB/s or ideally 900+ MB/s for 10GbE direct connection

- Note: I have also tried to change the Migration Option in the Datacenter but the migration speed is still low.

Last edited: