Hello.

This morning I was doing some stability testing on our staging cluster after a reconfiguration to a dedicated corosync network (we had "spontaneous" reboots when doing live migrations before). The cluster has been stable so far, no more reboots which is nice.

One thing I didn't expect is that when live migrating all vms from one host to another (all have the same RAM amount), the source having about 60% RAM usage, and destination 1% (no VMs running at all), it started swapping to a point that load went to 45.

All my servers have vm.swappiness = 0 in their sysctl.conf to prevent any swap usage unless absolutely necessary, so I really don't know why it decided to use it. I had noticed it happen before, but never to a point where the VMs running on that host became unresponsive for a few minutes.

After a few minutes I finally managed to get a working ssh prompt on that host and did a vmstat1

procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

r b swpd free buff cache si so bi bo in cs us sy id wa st

5 5 3259756 29066428 73292 247168 0 1 1 2 1 5 3 1 96 0 0

3 4 3258648 29064704 73292 247248 1404 0 1404 0 9682 18232 11 1 77 12 0

2 4 3257200 29059968 73296 247228 1592 0 1673 44 12906 22503 12 1 77 10 0

2 4 3255564 29058876 73296 247276 1948 0 1948 0 9749 16625 11 1 78 10 0

1 5 3253336 29056668 73296 247200 2260 0 2260 0 6643 12255 7 0 81 12 0

...

Lots and lots of swapping activity as you can see

Even though free shows this (a few minutes after these vmstat):

total used free shared buffers cached

Mem: 65960840 36858404 29102436 69200 73268 247232

-/+ buffers/cache: 36537904 29422936

Swap: 8388604 3292632 5095972

All my servers are running this kernel: 4.4.40-1-pve #1 SMP PVE 4.4.40-82 (Thu, 23 Feb 2017 15:14:06 +0100) x86_64 GNU/Linux which seems to be the very latest (edit: Nope, not the latest one, but the one before). I did not update the pve packages to the ones released a few days ago on the pve-entreprise apt repository, but I don't think this is the issue.

All my others servers I've migrated VMs too have huge swap usage, but at least they did not become unresponsive, here is another one for example, right now almost 2 hours after the migration:

total used free shared buffers cached

Mem: 65960844 50532992 15427852 67364 67168 185576

-/+ buffers/cache: 50280248 15680596

Swap: 8388604 8314972 73632

Am I supposed to simply disable swap altogether, or am I missing something? This is quite a problem as I'm live migrating vms from hosts to hosts every time I do an upgrade.

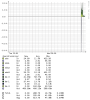

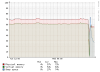

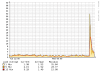

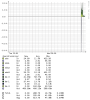

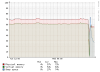

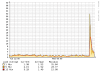

I've attached graphs showing the IO activity and load when it started swapping...

This morning I was doing some stability testing on our staging cluster after a reconfiguration to a dedicated corosync network (we had "spontaneous" reboots when doing live migrations before). The cluster has been stable so far, no more reboots which is nice.

One thing I didn't expect is that when live migrating all vms from one host to another (all have the same RAM amount), the source having about 60% RAM usage, and destination 1% (no VMs running at all), it started swapping to a point that load went to 45.

All my servers have vm.swappiness = 0 in their sysctl.conf to prevent any swap usage unless absolutely necessary, so I really don't know why it decided to use it. I had noticed it happen before, but never to a point where the VMs running on that host became unresponsive for a few minutes.

After a few minutes I finally managed to get a working ssh prompt on that host and did a vmstat1

procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

r b swpd free buff cache si so bi bo in cs us sy id wa st

5 5 3259756 29066428 73292 247168 0 1 1 2 1 5 3 1 96 0 0

3 4 3258648 29064704 73292 247248 1404 0 1404 0 9682 18232 11 1 77 12 0

2 4 3257200 29059968 73296 247228 1592 0 1673 44 12906 22503 12 1 77 10 0

2 4 3255564 29058876 73296 247276 1948 0 1948 0 9749 16625 11 1 78 10 0

1 5 3253336 29056668 73296 247200 2260 0 2260 0 6643 12255 7 0 81 12 0

...

Lots and lots of swapping activity as you can see

Even though free shows this (a few minutes after these vmstat):

total used free shared buffers cached

Mem: 65960840 36858404 29102436 69200 73268 247232

-/+ buffers/cache: 36537904 29422936

Swap: 8388604 3292632 5095972

All my servers are running this kernel: 4.4.40-1-pve #1 SMP PVE 4.4.40-82 (Thu, 23 Feb 2017 15:14:06 +0100) x86_64 GNU/Linux which seems to be the very latest (edit: Nope, not the latest one, but the one before). I did not update the pve packages to the ones released a few days ago on the pve-entreprise apt repository, but I don't think this is the issue.

All my others servers I've migrated VMs too have huge swap usage, but at least they did not become unresponsive, here is another one for example, right now almost 2 hours after the migration:

total used free shared buffers cached

Mem: 65960844 50532992 15427852 67364 67168 185576

-/+ buffers/cache: 50280248 15680596

Swap: 8388604 8314972 73632

Am I supposed to simply disable swap altogether, or am I missing something? This is quite a problem as I'm live migrating vms from hosts to hosts every time I do an upgrade.

I've attached graphs showing the IO activity and load when it started swapping...

Last edited: