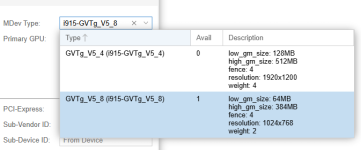

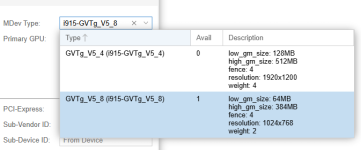

Have a Proxmox 8.3.3 up to date cluster with 4 nodes running i5-8500T (6 cores), mediated GPU detected and properly configured:

The VM is a Windows 11 which detects the GPU corretly and works very well.

I've set up a scheduled for the night backup job to create a backup to a mounted storage, compression ZSTD (fast and good) in Snapshot mode. In most cases this results in a complete hang of the VM. Apparently the VM runs, but it doesn't. Force stopping has no effect.

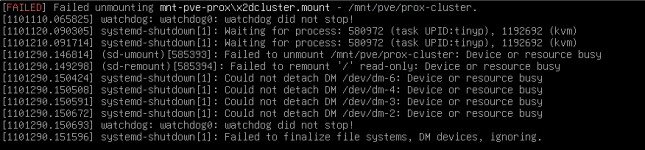

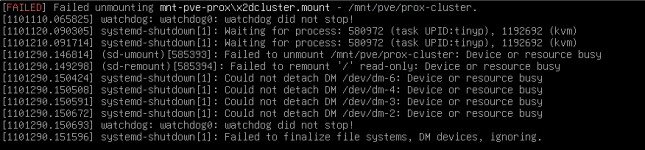

Had a desperate attempt to reboot the node, it hangs at console with:

Only way to recover is to pull the plug.

Tried to change the backup mode to Suspend, same thing happens in most cases.

It's not consistent, sometimes it succeedes, sometimes it doesn't, but mostly it doesn't. It's very annoying because by the time we realise this lockup, we're remote from the site, thus pulling the plug is not immediately feasible. I was expecting the watchdog to reboot the node but it doesn't.

My other issue is that I can't migrate the VM between the nodes unless it's completely shut down. I'm aware that live migration is not possible (even despite using mapped devices) but I was hoping that at least suspend or restart mode would be available as a choice in the Migrate functionality.

The VM is a Windows 11 which detects the GPU corretly and works very well.

I've set up a scheduled for the night backup job to create a backup to a mounted storage, compression ZSTD (fast and good) in Snapshot mode. In most cases this results in a complete hang of the VM. Apparently the VM runs, but it doesn't. Force stopping has no effect.

Had a desperate attempt to reboot the node, it hangs at console with:

Only way to recover is to pull the plug.

Tried to change the backup mode to Suspend, same thing happens in most cases.

It's not consistent, sometimes it succeedes, sometimes it doesn't, but mostly it doesn't. It's very annoying because by the time we realise this lockup, we're remote from the site, thus pulling the plug is not immediately feasible. I was expecting the watchdog to reboot the node but it doesn't.

My other issue is that I can't migrate the VM between the nodes unless it's completely shut down. I'm aware that live migration is not possible (even despite using mapped devices) but I was hoping that at least suspend or restart mode would be available as a choice in the Migrate functionality.

Last edited: