I'm testing PBS atm, to find out if it will be the solution for my backup needs.

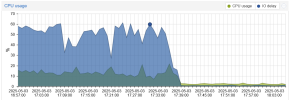

On PVE in the running Backup Job logs, I can see the read/write rates going up and down in waves with write rates from > 300 MiB/s to <30 MiB/s.

I'm trying to understand where the problem comes from. My conclusions so far:

Anything i can improve in this case? Are there any potential BIOS settings to improve the transfer/cache handling? Anything else other than getting a faster harddisk (I'm aware these external USB drives are rather low end performance-wise - so maybe this is just what it is)? Other Filesystem?

For comparisoni had been doing backups to a second internal NVMe disk where i had much faster rates and didn't see these waves. I don't remember if I have seen iowait as i did not had an focus on this then, but i guess there wasn't as I might have noticed it.

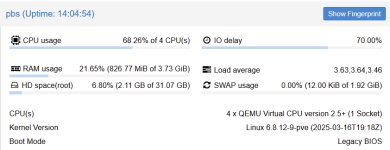

- PBS is running in a VM on PVE.

- The VMs are stored in local NVMes.

- An external USB 3.0 harddisk is passed through to PBS. Filessystem is ext4.

- Backups are running using snapshots.

- Backups are encrypted.

On PVE in the running Backup Job logs, I can see the read/write rates going up and down in waves with write rates from > 300 MiB/s to <30 MiB/s.

I'm trying to understand where the problem comes from. My conclusions so far:

- Encryption needs CPU power. The CPU never comes near 100%, so it doesn't seem to be the bottleneck.

- However the iowait indicates the CPU is waiting.

- So the question is, is it waiting to read more data or is it waiting to write data?

- Since read rates constantly are bigger than write rates, I conclude the CPU is reading at highest possible speed, is processing what ever it gets and then tries to write it to the target drive. And this write transfer does not seem to be fast enough. This is why we see the iowait.

- USB 3.0 (Super Speed) should be able to do 5 GBps = 400 MB/s net. At the very begining of each transfer write rates start somewhere near 400 MB/s. I see this as a "proof" the USB drive is operating on Superspeed indeed. However, rates drop fast to a fraction of the inital rate and move in waves after this, never coming near the 400 MB/s again.

- These waves to me looks like a faster cache in front of the acutally harddisk being filled faster than the disk itself is able to write. So each time the cache is full, the cpu goes into iowait while the disk tries to write the cache. I wondering if this is a cache on the disk or the USB controller or ....

Anything i can improve in this case? Are there any potential BIOS settings to improve the transfer/cache handling? Anything else other than getting a faster harddisk (I'm aware these external USB drives are rather low end performance-wise - so maybe this is just what it is)? Other Filesystem?

For comparisoni had been doing backups to a second internal NVMe disk where i had much faster rates and didn't see these waves. I don't remember if I have seen iowait as i did not had an focus on this then, but i guess there wasn't as I might have noticed it.

Last edited: