Hello

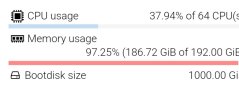

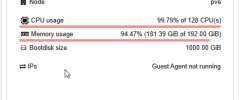

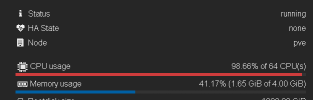

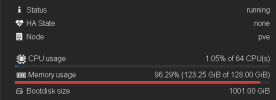

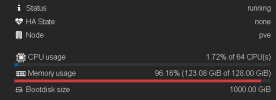

I have an odd issue that started after my server crashed. My current setup is as followed:

256 core EPYC Milan

512GB memory

I have this split into three Vms (windows servers) and it's running the latest proxmox version.

My server crashed last week, not sure what happened but I had to force shut it down. Once it came back online, one of my Vms runs terrible now. CPU immediately goes to 50-60 percent and stays there everytime I start it. When I remote in, it feels so slow. Any tips or tricks to see what could be causing this poor performance from one of my vms after the crash?

I have an odd issue that started after my server crashed. My current setup is as followed:

256 core EPYC Milan

512GB memory

I have this split into three Vms (windows servers) and it's running the latest proxmox version.

My server crashed last week, not sure what happened but I had to force shut it down. Once it came back online, one of my Vms runs terrible now. CPU immediately goes to 50-60 percent and stays there everytime I start it. When I remote in, it feels so slow. Any tips or tricks to see what could be causing this poor performance from one of my vms after the crash?