Hello,

I am relatively new tto Proxmox so bare with me as I am still learning the right terms butt so far Proxmox is amazing.

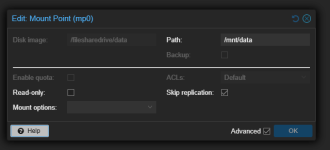

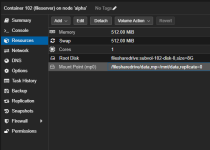

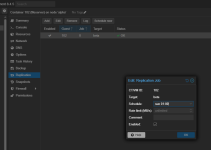

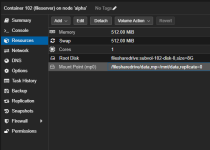

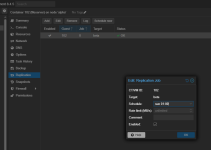

I just managed to get 3 PCs in a cluster. I have an extra drive in each of them and have them as a zfs drive so I can move containers between them without an issue. I have a turkey lxc that has a mountpoint for Samba server with a mountpoint. When I try to replicate it I get the error that mountpoints cannot be replicated. I did go to the container and chose skip replication but that does not copy the data in the mountpoint but just the lxc.

Is there a way to replicate the mountpoint data between the clusters in the interface? The most I can think of is a cronjob or something to just copy the data.

Any suggestions on what the best way to backup/replicate the data to the other drive? Any advice is appreciated.

I am relatively new tto Proxmox so bare with me as I am still learning the right terms butt so far Proxmox is amazing.

I just managed to get 3 PCs in a cluster. I have an extra drive in each of them and have them as a zfs drive so I can move containers between them without an issue. I have a turkey lxc that has a mountpoint for Samba server with a mountpoint. When I try to replicate it I get the error that mountpoints cannot be replicated. I did go to the container and chose skip replication but that does not copy the data in the mountpoint but just the lxc.

Is there a way to replicate the mountpoint data between the clusters in the interface? The most I can think of is a cronjob or something to just copy the data.

Any suggestions on what the best way to backup/replicate the data to the other drive? Any advice is appreciated.