Hello,

I am new to proxmox and googled alot before opening this thread, I am stuck with an infinite loop of IO-Error and I'm desperately seeking for help, here is a brief information of the installation process, troubleshooting and some more data that I hope will help to get to solve the problem

I am using Proxmox because I want to add another VM with HomeAssistant OS (currently i'm on Raspberry Pi) with Frigate (Thats the graphic card for) and because when I've installed TrueNAS Scale as OS I had troubles that it didn't recognized the Graphic card and the iGPU if there is nothing connected the the HDMI ports on the Motherboard and on the Graphic card

some logs:

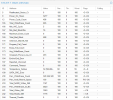

syslog from proxmox - the error occured at 21:37:

I am new to proxmox and googled alot before opening this thread, I am stuck with an infinite loop of IO-Error and I'm desperately seeking for help, here is a brief information of the installation process, troubleshooting and some more data that I hope will help to get to solve the problem

- Installed Proxmox on SSD and created a VM (ID 100) with TrueNAS Scale in it (youtube)

- From Proxmox Shell configured the 3 HDDs (4TB each) using the command (SCSI0 is the SSD where the VM is installed on)

qm set 100 -scsi1-3 /dev/disk/by-id/XXX(youtube) - In TrueNAS I've created a pool from the 3 HDDs with ZFS RaidZ1, Then created a dataset with the name "prox-share" and made it SMB, created a user and gave it the premissions to SMB dataset (via ACL Editor). (youtube from minute 7:23)

- Installed only Jellyfin app version 10.9.2 (the truenas official, not from truecharts) and gave it the SMB folder as additional storage

- Started playing with jellyfin only

- After 20 minute of transferring some doctor who episodes I've got the io-error

- Restarted the VM, logged in the the TrueNAS CLI and the Linux CLI (using PuTTY SSH) and got no error when the problem occured, even the RAM was at 13GB out of 20GB, there was no error on task history and on cluster log

- Removed the 3 HDDS, reinstalled the proxmox on the SSD and replaced the HDDs with a single 128GB SSD, and got the exact same error after doing the same process (but without the ZFS pool)

- Now I'm back again to the 3 HDDs setup and still the same error, when I restart and do nothing the error occures again after 20 minutes, I even tried to start truenas VM but stop the Jellyfin App and still the error occures with out doing anything after 20 minutes

Code:

Motherboard - Gigabyte GA-Z77MD3H

CPU - i7-3770 (only 4GB to the VM)

Graphic Card - Radeon HD 7770 (not on the VM)

Memory (RAM) - 8GB*2 + 4GB*2 = 24GB (only 20GB to the VM)

SSD - SanDisk 240GB (only 42GB to the VM)

HDD - WD Red Plus 3*4TB (all to the VM)

PSU - 450W

Proxmox Version - 8.1.4

TrueNAS Scale Version - 23.10.2I am using Proxmox because I want to add another VM with HomeAssistant OS (currently i'm on Raspberry Pi) with Frigate (Thats the graphic card for) and because when I've installed TrueNAS Scale as OS I had troubles that it didn't recognized the Graphic card and the iGPU if there is nothing connected the the HDMI ports on the Motherboard and on the Graphic card

some logs:

pveversion -v results

Code:

root@MyServer:~# pveversion -v

proxmox-ve: 8.1.0 (running kernel: 6.5.11-8-pve)

pve-manager: 8.1.4 (running version: 8.1.4/ec5affc9e41f1d79)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.5: 6.5.11-8

proxmox-kernel-6.5.11-8-pve-signed: 6.5.11-8

ceph-fuse: 17.2.7-pve2

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx8

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.0

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.3

libpve-access-control: 8.0.7

libpve-apiclient-perl: 3.3.1

libpve-common-perl: 8.1.0

libpve-guest-common-perl: 5.0.6

libpve-http-server-perl: 5.0.5

libpve-network-perl: 0.9.5

libpve-rs-perl: 0.8.8

libpve-storage-perl: 8.0.5

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 5.0.2-4

lxcfs: 5.0.3-pve4

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.1.4-1

proxmox-backup-file-restore: 3.1.4-1

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.3

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.4

proxmox-widget-toolkit: 4.1.3

pve-cluster: 8.0.5

pve-container: 5.0.8

pve-docs: 8.1.3

pve-edk2-firmware: 4.2023.08-3

pve-firewall: 5.0.3

pve-firmware: 3.9-1

pve-ha-manager: 4.0.3

pve-i18n: 3.2.0

pve-qemu-kvm: 8.1.5-2

pve-xtermjs: 5.3.0-3

qemu-server: 8.0.10

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.2-pve1qm config 100 result

Code:

root@MyServer:~# qm config 100

balloon: 0

boot: order=scsi0;net0

cores: 4

cpu: x86-64-v2-AES

machine: q35

memory: 20480

meta: creation-qemu=8.1.5,ctime=1711715877

name: TrueNAS

net0: virtio=BC:24:11:38:96:29,bridge=vmbr0,firewall=1

numa: 0

onboot: 1

ostype: l26

scsi0: local-lvm:vm-100-disk-0,iothread=1,size=42G

scsi1: /dev/disk/by-id/ata-WDC_WD40EFPX-68C6CN0_WD-WX12D93PDT62,size=3907018584K

scsi2: /dev/disk/by-id/ata-WDC_WD40EFPX-68C6CN0_WD-WX42D835CYA0,size=3907018584K

scsi3: /dev/disk/by-id/ata-WDC_WD40EFPX-68C6CN0_WD-WX52D934X53L,size=3907018584K

scsihw: virtio-scsi-single

smbios1: uuid=f8b3d36f-a7a7-478e-a315-f5d50e07b7dc

sockets: 1

unused0: local-lvm:vm-100-disk-1

unused1: local-lvm:vm-100-disk-2

vmgenid: f25088f8-13f6-4b93-961c-8957a62f18e3syslog from proxmox - the error occured at 21:37:

Code:

May 24 21:17:14 MyServer pvedaemon[22128]: <root@pam> starting task UPID:MyServer:00006A55:000E6431:6650D9AA:qmstart:100:root@pam:

May 24 21:17:14 MyServer pvedaemon[27221]: start VM 100: UPID:MyServer:00006A55:000E6431:6650D9AA:qmstart:100:root@pam:

May 24 21:17:14 MyServer kernel: sdd: sdd1 sdd2

May 24 21:17:14 MyServer kernel: sdb: sdb1 sdb2

May 24 21:17:14 MyServer kernel: sdc: sdc1 sdc2

May 24 21:17:14 MyServer systemd[1]: Started 100.scope.

May 24 21:17:15 MyServer kernel: tap100i0: entered promiscuous mode

May 24 21:17:15 MyServer kernel: vmbr0: port 2(fwpr100p0) entered blocking state

May 24 21:17:15 MyServer kernel: vmbr0: port 2(fwpr100p0) entered disabled state

May 24 21:17:15 MyServer kernel: fwpr100p0: entered allmulticast mode

May 24 21:17:15 MyServer kernel: fwpr100p0: entered promiscuous mode

May 24 21:17:15 MyServer kernel: vmbr0: port 2(fwpr100p0) entered blocking state

May 24 21:17:15 MyServer kernel: vmbr0: port 2(fwpr100p0) entered forwarding state

May 24 21:17:15 MyServer kernel: fwbr100i0: port 1(fwln100i0) entered blocking state

May 24 21:17:15 MyServer kernel: fwbr100i0: port 1(fwln100i0) entered disabled state

May 24 21:17:15 MyServer kernel: fwln100i0: entered allmulticast mode

May 24 21:17:15 MyServer kernel: fwln100i0: entered promiscuous mode

May 24 21:17:15 MyServer kernel: fwbr100i0: port 1(fwln100i0) entered blocking state

May 24 21:17:15 MyServer kernel: fwbr100i0: port 1(fwln100i0) entered forwarding state

May 24 21:17:15 MyServer kernel: fwbr100i0: port 2(tap100i0) entered blocking state

May 24 21:17:15 MyServer kernel: fwbr100i0: port 2(tap100i0) entered disabled state

May 24 21:17:15 MyServer kernel: tap100i0: entered allmulticast mode

May 24 21:17:15 MyServer kernel: fwbr100i0: port 2(tap100i0) entered blocking state

May 24 21:17:15 MyServer kernel: fwbr100i0: port 2(tap100i0) entered forwarding state

May 24 21:17:15 MyServer pvedaemon[22128]: <root@pam> end task UPID:MyServer:00006A55:000E6431:6650D9AA:qmstart:100:root@pam: OK

May 24 21:17:15 MyServer pvedaemon[22128]: <root@pam> starting task UPID:MyServer:00006AB5:000E64A3:6650D9AB:vncproxy:100:root@pam:

May 24 21:17:15 MyServer pvedaemon[27317]: starting vnc proxy UPID:MyServer:00006AB5:000E64A3:6650D9AB:vncproxy:100:root@pam:

May 24 21:17:16 MyServer pvedaemon[22128]: <root@pam> end task UPID:MyServer:00006AB5:000E64A3:6650D9AB:vncproxy:100:root@pam: OK

May 24 21:17:22 MyServer pvedaemon[27342]: starting vnc proxy UPID:MyServer:00006ACE:000E673B:6650D9B2:vncproxy:100:root@pam:

May 24 21:17:22 MyServer pvedaemon[25363]: <root@pam> starting task UPID:MyServer:00006ACE:000E673B:6650D9B2:vncproxy:100:root@pam:

May 24 21:17:43 MyServer pveproxy[24716]: worker exit

May 24 21:17:43 MyServer pveproxy[1183]: worker 24716 finished

May 24 21:17:43 MyServer pveproxy[1183]: starting 1 worker(s)

May 24 21:17:43 MyServer pveproxy[1183]: worker 27444 started

May 24 21:18:32 MyServer pveproxy[24175]: worker exit

May 24 21:18:32 MyServer pveproxy[1183]: worker 24175 finished

May 24 21:18:32 MyServer pveproxy[1183]: starting 1 worker(s)

May 24 21:18:32 MyServer pveproxy[1183]: worker 27634 started

May 24 21:23:30 MyServer pveproxy[1183]: worker 25432 finished

May 24 21:23:30 MyServer pveproxy[1183]: starting 1 worker(s)

May 24 21:23:30 MyServer pveproxy[1183]: worker 28631 started

May 24 21:23:31 MyServer pveproxy[28630]: got inotify poll request in wrong process - disabling inotify

May 24 21:26:39 MyServer pvedaemon[24851]: <root@pam> successful auth for user 'root@pam'

May 24 21:33:07 MyServer pveproxy[27444]: worker exit

May 24 21:33:07 MyServer pveproxy[1183]: worker 27444 finished

May 24 21:33:07 MyServer pveproxy[1183]: starting 1 worker(s)

May 24 21:33:07 MyServer pveproxy[1183]: worker 30268 started

May 24 21:38:27 MyServer pveproxy[27634]: worker exit

May 24 21:38:27 MyServer pveproxy[1183]: worker 27634 finished

May 24 21:38:27 MyServer pveproxy[1183]: starting 1 worker(s)

May 24 21:38:27 MyServer pveproxy[1183]: worker 31143 started

May 24 21:40:25 MyServer smartd[805]: Device: /dev/sda [SAT], SMART Usage Attribute: 194 Temperature_Celsius changed from 67 to 65

May 24 21:40:25 MyServer smartd[805]: Device: /dev/sdb [SAT], SMART Usage Attribute: 194 Temperature_Celsius changed from 114 to 110

May 24 21:40:25 MyServer smartd[805]: Device: /dev/sdc [SAT], SMART Usage Attribute: 194 Temperature_Celsius changed from 112 to 109

May 24 21:40:25 MyServer smartd[805]: Device: /dev/sdd [SAT], SMART Usage Attribute: 194 Temperature_Celsius changed from 112 to 109

May 24 21:41:19 MyServer pvedaemon[22128]: <root@pam> starting task UPID:MyServer:00007B3C:001098AB:6650DF4F:vncshell::root@pam:

May 24 21:41:19 MyServer pvedaemon[31548]: starting termproxy UPID:MyServer:00007B3C:001098AB:6650DF4F:vncshell::root@pam:

May 24 21:41:19 MyServer pvedaemon[25363]: <root@pam> successful auth for user 'root@pam'

May 24 21:41:19 MyServer login[31551]: pam_unix(login:session): session opened for user root(uid=0) by root(uid=0)

May 24 21:41:19 MyServer systemd[1]: Created slice user-0.slice - User Slice of UID 0.

May 24 21:41:19 MyServer systemd[1]: Starting user-runtime-dir@0.service - User Runtime Directory /run/user/0...

May 24 21:41:19 MyServer systemd-logind[807]: New session 16 of user root.

May 24 21:41:19 MyServer systemd[1]: Finished user-runtime-dir@0.service - User Runtime Directory /run/user/0.

May 24 21:41:19 MyServer systemd[1]: Starting user@0.service - User Manager for UID 0...

May 24 21:41:19 MyServer (systemd)[31557]: pam_unix(systemd-user:session): session opened for user root(uid=0) by (uid=0)

May 24 21:41:19 MyServer systemd[31557]: Queued start job for default target default.target.

May 24 21:41:19 MyServer systemd[31557]: Created slice app.slice - User Application Slice.

May 24 21:41:19 MyServer systemd[31557]: Reached target paths.target - Paths.

May 24 21:41:19 MyServer systemd[31557]: Reached target timers.target - Timers.

May 24 21:41:19 MyServer systemd[31557]: Listening on dirmngr.socket - GnuPG network certificate management daemon.

May 24 21:41:19 MyServer systemd[31557]: Listening on gpg-agent-browser.socket - GnuPG cryptographic agent and passphrase cache (access for web browsers).

May 24 21:41:19 MyServer systemd[31557]: Listening on gpg-agent-extra.socket - GnuPG cryptographic agent and passphrase cache (restricted).

May 24 21:41:19 MyServer systemd[31557]: Listening on gpg-agent-ssh.socket - GnuPG cryptographic agent (ssh-agent emulation).

May 24 21:41:19 MyServer systemd[31557]: Listening on gpg-agent.socket - GnuPG cryptographic agent and passphrase cache.

May 24 21:41:19 MyServer systemd[31557]: Reached target sockets.target - Sockets.

May 24 21:41:19 MyServer systemd[31557]: Reached target basic.target - Basic System.

May 24 21:41:19 MyServer systemd[31557]: Reached target default.target - Main User Target.

May 24 21:41:19 MyServer systemd[31557]: Startup finished in 146ms.

May 24 21:41:19 MyServer systemd[1]: Started user@0.service - User Manager for UID 0.

May 24 21:41:19 MyServer systemd[1]: Started session-16.scope - Session 16 of User root.

May 24 21:41:19 MyServer login[31572]: ROOT LOGIN on '/dev/pts/0'

May 24 21:41:39 MyServer pvedaemon[22128]: <root@pam> successful auth for user 'root@pam'

May 24 21:43:58 MyServer pveproxy[1183]: worker 30268 finished

May 24 21:43:58 MyServer pveproxy[1183]: starting 1 worker(s)

May 24 21:43:58 MyServer pveproxy[1183]: worker 31944 started

May 24 21:44:02 MyServer pveproxy[31943]: got inotify poll request in wrong process - disabling inotify

May 24 21:46:06 MyServer pveproxy[28631]: worker exit

May 24 21:46:06 MyServer pveproxy[1183]: worker 28631 finished

May 24 21:46:06 MyServer pveproxy[1183]: starting 1 worker(s)

May 24 21:46:06 MyServer pveproxy[1183]: worker 32276 started

May 24 21:46:45 MyServer systemd-logind[807]: Session 16 logged out. Waiting for processes to exit.

May 24 21:46:45 MyServer systemd[1]: session-16.scope: Deactivated successfully.

May 24 21:46:45 MyServer systemd[1]: session-16.scope: Consumed 2min 25.232s CPU time.

May 24 21:46:45 MyServer systemd-logind[807]: Removed session 16.

May 24 21:46:45 MyServer pvedaemon[22128]: <root@pam> end task UPID:MyServer:00007B3C:001098AB:6650DF4F:vncshell::root@pam: OK

May 24 21:46:45 MyServer pveproxy[31943]: worker exit

May 24 21:46:48 MyServer pvedaemon[25363]: <root@pam> end task UPID:MyServer:00006ACE:000E673B:6650D9B2:vncproxy:100:root@pam: OK

May 24 21:46:48 MyServer pveproxy[28630]: worker exit

May 24 21:46:54 MyServer pveproxy[31143]: worker exit

May 24 21:46:54 MyServer pveproxy[1183]: worker 31143 finished

May 24 21:46:54 MyServer pveproxy[1183]: starting 1 worker(s)

May 24 21:46:54 MyServer pveproxy[1183]: worker 33001 started

May 24 21:46:55 MyServer systemd[1]: Stopping user@0.service - User Manager for UID 0...

May 24 21:46:55 MyServer systemd[31557]: Activating special unit exit.target...

May 24 21:46:55 MyServer systemd[31557]: Stopped target default.target - Main User Target.

May 24 21:46:55 MyServer systemd[31557]: Stopped target basic.target - Basic System.

May 24 21:46:55 MyServer systemd[31557]: Stopped target paths.target - Paths.

May 24 21:46:55 MyServer systemd[31557]: Stopped target sockets.target - Sockets.

May 24 21:46:55 MyServer systemd[31557]: Stopped target timers.target - Timers.

May 24 21:46:55 MyServer systemd[31557]: Closed dirmngr.socket - GnuPG network certificate management daemon.

May 24 21:46:55 MyServer systemd[31557]: Closed gpg-agent-browser.socket - GnuPG cryptographic agent and passphrase cache (access for web browsers).

May 24 21:46:55 MyServer systemd[31557]: Closed gpg-agent-extra.socket - GnuPG cryptographic agent and passphrase cache (restricted).

May 24 21:46:55 MyServer systemd[31557]: Closed gpg-agent-ssh.socket - GnuPG cryptographic agent (ssh-agent emulation).

May 24 21:46:55 MyServer systemd[31557]: Closed gpg-agent.socket - GnuPG cryptographic agent and passphrase cache.

May 24 21:46:55 MyServer systemd[31557]: Removed slice app.slice - User Application Slice.

May 24 21:46:55 MyServer systemd[31557]: Reached target shutdown.target - Shutdown.

May 24 21:46:55 MyServer systemd[31557]: Finished systemd-exit.service - Exit the Session.

May 24 21:46:55 MyServer systemd[31557]: Reached target exit.target - Exit the Session.

May 24 21:46:55 MyServer systemd[1]: user@0.service: Deactivated successfully.

May 24 21:46:55 MyServer systemd[1]: Stopped user@0.service - User Manager for UID 0.

May 24 21:46:55 MyServer systemd[1]: Stopping user-runtime-dir@0.service - User Runtime Directory /run/user/0...

May 24 21:46:55 MyServer systemd[1]: run-user-0.mount: Deactivated successfully.

May 24 21:46:55 MyServer systemd[1]: user-runtime-dir@0.service: Deactivated successfully.

May 24 21:46:55 MyServer systemd[1]: Stopped user-runtime-dir@0.service - User Runtime Directory /run/user/0.

May 24 21:46:55 MyServer systemd[1]: Removed slice user-0.slice - User Slice of UID 0.

May 24 21:46:55 MyServer systemd[1]: user-0.slice: Consumed 2min 25.383s CPU time.

May 24 21:56:40 MyServer pvedaemon[25363]: <root@pam> successful auth for user 'root@pam'