Daz,

I'm also building a pbs server with same drives, and I'd like to add a third option to your query. But first a description of my main objective: I want restores to the pve hosts to saturate the 10Gbps links (100% utilization) of the network. Since a single SATA interface has an effective throughput of approx. 5Gbps (for SSDs such as the DC600M), you need to add up the bandwidth of at least 2 drives to get 10Gbps. How you organize those drives (parity raid, mirror, stripe...) is the next logical question. Contrary to common wisdom, I chose stripe (raid0). This is a homelab and my resources are limited. Backups are very important to me, but not required to run the "production" load. To alleviate the future failure of the stripe, after each backup window the pbs datastore residing on the stripe is synched to a removable datastore (external sata HDD). The pbs server is powered-on only a few hours every week, most of this time spent synching the just finished backup to the external drive. I have 2 external drives, which are rotated offsite.

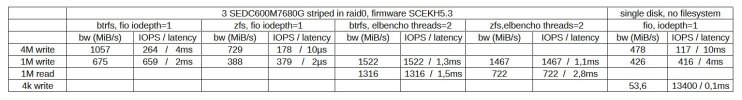

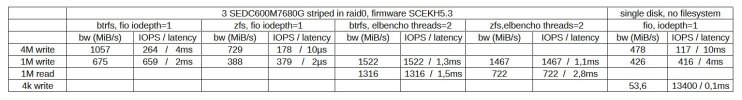

Now, back to the original question. Hardware raid has been out of my scope from the start. The pbs "server" (a desktop tower, i7-10700 with dual-ports 10Gbps nic) has no pci slot avalaible. As for software raid options with pbs, I benchmarked zfs and btrfs. btrfs consumes less cpu and much less RAM than zfs. It also came on top for effective throughput. I tried to tweak the benchmark to account for the very specific load of pbs, a continuous stream of files, sizes between 1Mbytes and 4Mbytes. Here are the results for a stripe of three DC600M 7.6TB:

I can provide detailed configuration of setup and also exact commands used for the benchmark. My hypothesis: zfs is hindered by it's cache and all associated overhead for a continuous stream of files that are referenced only once during a job.

I copied a .chunks directory over the network to confirm the benchmark results. To rule out the impact of encryption, I used rsync. The pbs server was configured with the rsync daemon and the pve host pulled the .chunks directory from pbs, writing it to the VMs datastore (a pair of mirrored pcie4.0 NVMe). This flow mimicks a restore from the network and storage points of view. With a single rsync task, the observed throughput:

tlsx3008f-1#show system-info int te 1/0/2

Port RX Utilization - 0.18%

Port TX Utilization - 77.39%

tlsx3008f-1#show system-info int te 1/0/2

Port RX Utilization - 0.18%

Port TX Utilization - 75.87%

tlsx3008f-1#show system-info int te 1/0/2

Port RX Utilization - 0.15%

Port TX Utilization - 74.68%

tlsx3008f-1#show system-info int te 1/0/2

Port RX Utilization - 0.19%

Port TX Utilization - 77.43%

tlsx3008f-1#show system-info int te 1/0/2

Port RX Utilization - 0.18%

Port TX Utilization - 76.52%

Note the throughput isn't perfectly steady in this sample, varying between 7,46Gbps and 7,74Gbps. I then started two simultaneous instances of rsync, the first copying chunks from 0000 to 7fff and the second copying chunks from 8000 to ffff, saturating the link:

tlsx3008f-1#show system-info int te 1/0/2

Port RX Utilization - 0.77%

Port TX Utilization - 99.94%

tlsx3008f-1#show system-info int te 1/0/2

Port RX Utilization - 1.02%

Port TX Utilization - 99.92%

tlsx3008f-1#show system-info int te 1/0/2

Port RX Utilization - 0.73%

Port TX Utilization - 99.97%

tlsx3008f-1#show system-info int te 1/0/2

Port RX Utilization - 0.89%

Port TX Utilization - 99.94%

tlsx3008f-1#show system-info int te 1/0/2

Port RX Utilization - 0.80%

Port TX Utilization - 100.00%

Next steps: finish customizing the pbs server, then rebuild the two pve hosts, from 8.1 to 8.3. Again, if you have any questions about my setup, please don't hesitate.

François