can you provide us with the output of the following commands?

Bash:

cat /etc/pve/storage.cfg

qm config <VMID>

pveversion -v

Done

sudo cat /etc/pve/storage.cfg

dir: local-ssd

path /mnt/pve/local-ssd

content iso,backup,rootdir,snippets,vztmpl,images

is_mountpoint 1

nodes pve-88240,pve-88239,pve-88247

shared 0

sudo qm config 274

agent: 1

boot: order=scsi0;net0;ide1

cores: 2

ide1: local-ssd:274/vm-274-cloudinit.raw,media=cdrom,size=4M

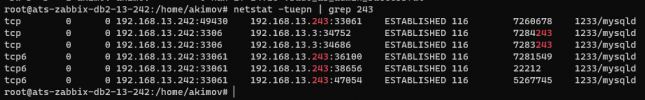

ipconfig0: ip=192.168.13.243/23,gw=192.168.12.1

memory: 12288

meta: creation-qemu=6.2.0,ctime=1652695615

name: ats-zabbix-db3-13-243-local

net0: virtio=32:1D:23:55:76:55,bridge=vmbr0,rate=50

numa: 0

ostype: l26

parent: autodaily220818001941

scsi0: local-ssd:274/vm-274-disk-0.qcow2,cache=directsync,size=10G

scsi1: local-ssd:274/vm-274-disk-1.qcow2,cache=directsync,size=250G

scsihw: virtio-scsi-pci

smbios1: uuid=af5a8aac-552f-45bf-a367-1e18f767435e

sockets: 2

vmgenid: d4b73ae0-4a72-4d90-88ab-6fcfeeb0885d

sudo pveversion -v

proxmox-ve: 7.2-1 (running kernel: 5.15.35-2-pve)

pve-manager: 7.2-4 (running version: 7.2-4/ca9d43cc)

pve-kernel-5.15: 7.2-4

pve-kernel-helper: 7.2-4

pve-kernel-5.13: 7.1-9

pve-kernel-5.11: 7.0-10

pve-kernel-5.15.35-2-pve: 5.15.35-5

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-1-pve: 5.13.19-3

pve-kernel-5.11.22-7-pve: 5.11.22-12

pve-kernel-5.11.22-4-pve: 5.11.22-9

ceph-fuse: 15.2.16-pve1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve1

libproxmox-acme-perl: 1.4.2

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.2-2

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.2-2

libpve-guest-common-perl: 4.1-2

libpve-http-server-perl: 4.1-2

libpve-storage-perl: 7.2-4

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.12-1

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.2.3-1

proxmox-backup-file-restore: 2.2.3-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.5.1

pve-cluster: 7.2-1

pve-container: 4.2-1

pve-docs: 7.2-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.4-2

pve-ha-manager: 3.3-4

pve-i18n: 2.7-2

pve-qemu-kvm: 6.2.0-10

pve-xtermjs: 4.16.0-1

qemu-server: 7.2-3

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.7.1~bpo11+1

vncterm: 1.7-1

zfsutils-linux: 2.1.4-pve1