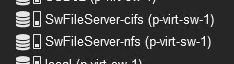

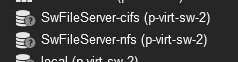

Hi, I am using a cluster of 4 PVE 8.2.4 machines. One of the VMs serves a CIFS share that is mounted as a "SMB/CIFS" Storage mount.

That normally works well but now the storage VM failed and this lead unstable results in the PVE UI.

I see the typical "grey question mark" problem and the VM names are not resolved.

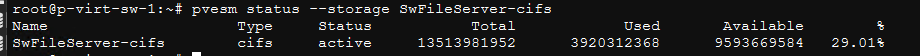

And I can't access any of the PVE storages. I just receive a "Connection timed out (596)"

I tried to disable and re enable the CIFS mont from "Datacenter" -> "Storage". The network drive disappears and reappears under the different nodes but the access is still not possible.

If possible I really don't want to reboot my nodes. Hopefully just something needs to be restarted to get it up and running.

Here is an overview of one of the nodes services:

Not sure how to look through the logs. The "System Log" Tab is empty in the UI.

Hope someone can help without the need of a reboot.

Thanks.

That normally works well but now the storage VM failed and this lead unstable results in the PVE UI.

I see the typical "grey question mark" problem and the VM names are not resolved.

And I can't access any of the PVE storages. I just receive a "Connection timed out (596)"

I tried to disable and re enable the CIFS mont from "Datacenter" -> "Storage". The network drive disappears and reappears under the different nodes but the access is still not possible.

If possible I really don't want to reboot my nodes. Hopefully just something needs to be restarted to get it up and running.

Here is an overview of one of the nodes services:

Bash:root@p-virt-sw-3:~# systemctl status ● p-virt-sw-3 State: running Units: 596 loaded (incl. loaded aliases) Jobs: 0 queued Failed: 0 units Since: Tue 2024-09-10 15:49:09 CEST; 4 weeks 1 day ago systemd: 252.30-1~deb12u2 CGroup: / ├─init.scope │ └─1 /sbin/init ├─qemu.slice │ ├─1027.scope │ │ └─811359 /usr/bin/kvm -id 1027 -name SwarmIomProc-Win7,debug-threads=on -no-shutdown -chardev socket,id=qmp,path=/var/run/qemu-server/1027.qmp,server=on,wait=off -mon chardev=qmp,mode=control -chardev socket,id=qmp-event,path=/var/run/qmev> │ ├─2003.scope │ │ ├─6327 swtpm socket --tpmstate backend-uri=file:///dev/zvol/ssd2-nvme-zfs/vm-2003-disk-1,mode=0600 --ctrl type=unixio,path=/var/run/qemu-server/2003.swtpm,mode=0600 --pid file=/var/run/qemu-server/2003.swtpm.pid --terminate --daemon --log > │ │ └─6334 /usr/bin/kvm -id 2003 -name SwDevZes-Win10,debug-threads=on -no-shutdown -chardev socket,id=qmp,path=/var/run/qemu-server/2003.qmp,server=on,wait=off -mon chardev=qmp,mode=control -chardev socket,id=qmp-event,path=/var/run/qmeventd.> │ ├─2008.scope │ │ ├─6571 swtpm socket --tpmstate backend-uri=file:///dev/zvol/rpool/vm-2008-disk-1,mode=0600 --ctrl type=unixio,path=/var/run/qemu-server/2008.swtpm,mode=0600 --pid file=/var/run/qemu-server/2008.swtpm.pid --terminate --daemon --log "file=/r> │ │ └─6578 /usr/bin/kvm -id 2008 -name SwDevJel-Win10,debug-threads=on -no-shutdown -chardev socket,id=qmp,path=/var/run/qemu-server/2008.qmp,server=on,wait=off -mon chardev=qmp,mode=control -chardev socket,id=qmp-event,path=/var/run/qmeventd.> │ ├─2010.scope │ │ ├─6980 swtpm socket --tpmstate backend-uri=file:///dev/zvol/rpool/vm-2010-disk-1,mode=0600 --ctrl type=unixio,path=/var/run/qemu-server/2010.swtpm,mode=0600 --pid file=/var/run/qemu-server/2010.swtpm.pid --terminate --daemon --log "file=/r> │ │ └─6986 /usr/bin/kvm -id 2010 -name SwDevBoc-Win10,debug-threads=on -no-shutdown -chardev socket,id=qmp,path=/var/run/qemu-server/2010.qmp,server=on,wait=off -mon chardev=qmp,mode=control -chardev socket,id=qmp-event,path=/var/run/qmeventd.> │ └─2027.scope │ └─37935 /usr/bin/kvm -id 2027 -name SwarmIomLive-Win7,debug-threads=on -no-shutdown -chardev socket,id=qmp,path=/var/run/qemu-server/2027.qmp,server=on,wait=off -mon chardev=qmp,mode=control -chardev socket,id=qmp-event,path=/var/run/qmeve> ├─system.slice │ ├─chrony.service │ │ ├─3225 /usr/sbin/chronyd -F 1 │ │ └─3234 /usr/sbin/chronyd -F 1 │ ├─corosync.service │ │ └─3377 /usr/sbin/corosync -f │ ├─cron.service │ │ └─3379 /usr/sbin/cron -f │ ├─dbus.service │ │ └─2941 /usr/bin/dbus-daemon --system --address=systemd: --nofork --nopidfile --systemd-activation --syslog-only │ ├─ksmtuned.service │ │ ├─ 2950 /bin/bash /usr/sbin/ksmtuned │ │ └─1430211 sleep 60 │ ├─lxc-monitord.service │ │ └─3171 /usr/libexec/lxc/lxc-monitord --daemon │ ├─lxcfs.service │ │ └─2966 /usr/bin/lxcfs /var/lib/lxcfs │ ├─proxmox-firewall.service │ │ └─3380 /usr/libexec/proxmox/proxmox-firewall │ ├─pve-cluster.service │ │ └─3294 /usr/bin/pmxcfs │ ├─pve-firewall.service │ │ └─3479 pve-firewall │ ├─pve-ha-crm.service │ │ └─3519 pve-ha-crm │ ├─pve-ha-lrm.service │ │ └─3538 pve-ha-lrm │ ├─pve-lxc-syscalld.service │ │ └─2947 /usr/lib/x86_64-linux-gnu/pve-lxc-syscalld/pve-lxc-syscalld --system /run/pve/lxc-syscalld.sock │ ├─pvedaemon.service │ │ ├─ 3507 pvedaemon │ │ ├─ 463060 "pvedaemon worker" │ │ ├─ 470732 "pvedaemon worker" │ │ ├─ 488150 "pvedaemon worker" │ │ ├─1399185 "pvedaemon worker" │ │ ├─1399248 "pvedaemon worker" │ │ └─1399262 "pvedaemon worker" │ ├─pvefw-logger.service │ │ └─1059595 /usr/sbin/pvefw-logger │ ├─pveproxy.service │ │ ├─ 3530 pveproxy │ │ ├─1059599 "pveproxy worker" │ │ ├─1059600 "pveproxy worker" │ │ └─1059601 "pveproxy worker" │ ├─pvescheduler.service │ │ └─7496 pvescheduler │ ├─pvestatd.service │ │ ├─ 3491 pvestatd │ │ └─1394818 pvestatd │ ├─qmeventd.service │ │ └─2953 /usr/sbin/qmeventd /var/run/qmeventd.sock │ ├─rpc-statd.service │ │ └─3712 /sbin/rpc.statd │ ├─rpcbind.service │ │ └─2926 /sbin/rpcbind -f -w │ ├─rrdcached.service │ │ └─3268 /usr/bin/rrdcached -B -b /var/lib/rrdcached/db/ -j /var/lib/rrdcached/journal/ -p /var/run/rrdcached.pid -l unix:/var/run/rrdcached.sock │ ├─smartmontools.service │ │ └─2952 /usr/sbin/smartd -n -q never │ ├─spiceproxy.service │ │ ├─ 3536 spiceproxy │ │ └─1059593 "spiceproxy worker" │ ├─ssh.service │ │ └─3192 "sshd: /usr/sbin/sshd -D [listener] 0 of 10-100 startups" │ ├─system-getty.slice │ │ └─getty@tty1.service │ │ └─3188 /sbin/agetty -o "-p -- \\u" --noclear - linux │ ├─system-postfix.slice │ │ └─postfix@-.service │ │ ├─ 3370 /usr/lib/postfix/sbin/master -w │ │ ├─ 3372 qmgr -l -t unix -u │ │ └─1401054 pickup -l -t unix -u -c │ ├─systemd-journald.service │ │ └─1470 /lib/systemd/systemd-journald │ ├─systemd-logind.service │ │ └─2956 /lib/systemd/systemd-logind │ ├─systemd-udevd.service │ │ └─udev │ │ └─1491 /lib/systemd/systemd-udevd │ ├─watchdog-mux.service │ │ └─2957 /usr/sbin/watchdog-mux │ └─zfs-zed.service │ └─2962 /usr/sbin/zed -F └─user.slice └─user-0.slice ├─session-16.scope │ └─23349 /usr/bin/kvm -id 1036 -name FpgaLibero-Ubuntu,debug-threads=on -no-shutdown -chardev socket,id=qmp,path=/var/run/qemu-server/1036.qmp,server=on,wait=off -mon chardev=qmp,mode=control -chardev socket,id=qmp-event,path=/var/run/qme> ├─session-829.scope │ ├─1408393 "sshd: root@pts/0" │ ├─1408400 /bin/login -f │ ├─1408405 -bash │ ├─1430303 systemctl status │ └─1430304 pager └─user@0.service └─init.scope ├─22358 /lib/systemd/systemd --user └─22359 "(sd-pam)"

Not sure how to look through the logs. The "System Log" Tab is empty in the UI.

Hope someone can help without the need of a reboot.

Thanks.