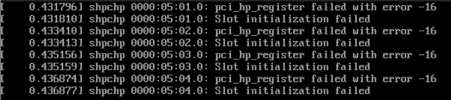

TL;DR: I added "intel_iommu=on" to an AMD server, update-grub then reboot the host, then I realized it was not right, edited grub again with "amd_iommu=on", update-grub and reboot again, then completed rest of the steps, and I got black screen, I can tell the monitor is on (backlit), but nothing is printed,

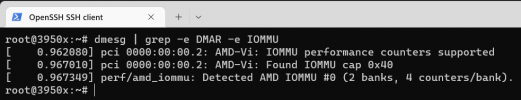

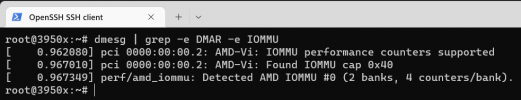

Output from dmesg | grep -e DMAR -e IOMMU

(I don't see anything like "DMAR: IOMMU enabled" as mentioned in Wiki and worried the wrong intel_iommu caused some damage here, although I fixed it later)

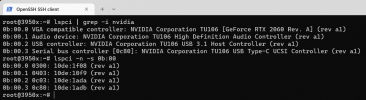

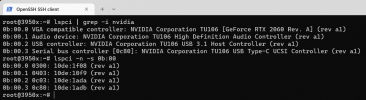

PCI information

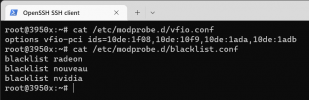

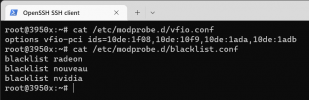

vfio and blocked drivers

VM configuration

Is there anything obviously wrong? how can I troubleshoot further? thanks!!

Output from dmesg | grep -e DMAR -e IOMMU

(I don't see anything like "DMAR: IOMMU enabled" as mentioned in Wiki and worried the wrong intel_iommu caused some damage here, although I fixed it later)

PCI information

vfio and blocked drivers

VM configuration

Is there anything obviously wrong? how can I troubleshoot further? thanks!!

Last edited: