Is there a way to force downgrade of a specific PCIe port or device on boot? (I cannot seem to change it for this port in BIOS.)

Or some other way to solve these PCIe device errors?

I have one machine with an M.2 2242 slot, which supports PCIe 3.0 x4. I have an adapter riser with a 25cm cable to convert the M.2 to PCIe x4. I have used this in another PC (based on Xeon D-1518) with great results, with an Intel 10Gb adapter (X540-AT2, using only one port), running Proxmox 8.4.

With the new system, Asrock Rack workstation motherboard based on the C246 chipset, I am having some trouble. This is a new installation of Proxmox 9.

AER reports correctable errors (most of them reported as "multiple correctable errors") from the NIC when placed in the M.2 riser adapter. When the X540 card is idle, there are only a few errors. But, when I try to run a network test (iperf3) from one VM to another machine using a vmbridge linked to this NIC, I get a lot of the errors in the console / dmesg log, even when throughput is good. Short tests seem to run fine, but leaving it longer causes the interface to break completely. It took 40 seconds of iperf3 (TCP sending at 9+ Gbps), and then it enters a reset loop.

ASPM shows as disabled for this port (even without kernel command line modification), and I have also tried with kernel command line additions "pci=nommconf pcie_aspm=off" without any improvement.

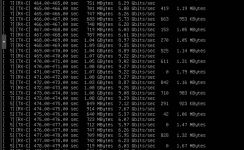

Errors during the iperf3 transfer:

After a 40 second continuous run of iperf3, the NIC resets and enters into a loop of resets. No further network activity is possible, my VM can no longer run iperf or ping to my remote server.

First reset, and then it loops every 5-20 seconds. Connectivity is not possible even when the device says it is back up.

As a comparison, I have tried to put other PCIe cards I have available into this riser on the new system. Some cards seem to work without issue, and other cards are behaving even worse (especially the card that runs at PCIe 3.0 speeds.)

Intel PRO/1000 PT (PCIe 1.0 x1): No issues, no AER messages. Tested with continuous iperf3 data, bidirectionally.

Mellanox MNPA19-XTR ConnectX-2 EN (PCIe 2.0 x4): No AER messages during idle. I currently cannot test it in connected mode.

LSI 2008 (RAID FW), no disks attached (PCIe 2.0 x4): Steady stream of AER messages during idle (6-8 "correctable" errors per second, very few "multiple correctable" errors).

LSI 3008 (RAID FW), no disks attached (PCIe 3.0 x4): Flood of AER messages during idle (20 "correctable" and 400 "multiple correctable" errors per second). System cannot even reboot or shut down successfully, and needs to be forcefully powered off.

Or some other way to solve these PCIe device errors?

I have one machine with an M.2 2242 slot, which supports PCIe 3.0 x4. I have an adapter riser with a 25cm cable to convert the M.2 to PCIe x4. I have used this in another PC (based on Xeon D-1518) with great results, with an Intel 10Gb adapter (X540-AT2, using only one port), running Proxmox 8.4.

With the new system, Asrock Rack workstation motherboard based on the C246 chipset, I am having some trouble. This is a new installation of Proxmox 9.

AER reports correctable errors (most of them reported as "multiple correctable errors") from the NIC when placed in the M.2 riser adapter. When the X540 card is idle, there are only a few errors. But, when I try to run a network test (iperf3) from one VM to another machine using a vmbridge linked to this NIC, I get a lot of the errors in the console / dmesg log, even when throughput is good. Short tests seem to run fine, but leaving it longer causes the interface to break completely. It took 40 seconds of iperf3 (TCP sending at 9+ Gbps), and then it enters a reset loop.

ASPM shows as disabled for this port (even without kernel command line modification), and I have also tried with kernel command line additions "pci=nommconf pcie_aspm=off" without any improvement.

Errors during the iperf3 transfer:

code_language.shell:

[ 1600.726157] pcieport 0000:00:1d.0: AER: Multiple Correctable error message received from 0000:05:00.1

[ 1600.726832] pcieport 0000:00:1d.0: PCIe Bus Error: severity=Correctable, type=Data Link Layer, (Transmitter ID)

[ 1600.727491] pcieport 0000:00:1d.0: device [8086:a330] error status/mask=00001000/00002000

[ 1600.728160] pcieport 0000:00:1d.0: [12] Timeout

[ 1600.728819] ixgbe 0000:05:00.0: PCIe Bus Error: severity=Correctable, type=Physical Layer, (Transmitter ID)

[ 1600.729476] ixgbe 0000:05:00.0: device [8086:1528] error status/mask=000010c1/00002000

[ 1600.730134] ixgbe 0000:05:00.0: [ 0] RxErr (First)

[ 1600.730788] ixgbe 0000:05:00.0: [ 6] BadTLP

[ 1600.731432] ixgbe 0000:05:00.0: [ 7] BadDLLP

[ 1600.732074] ixgbe 0000:05:00.0: [12] Timeout

[ 1600.732717] ixgbe 0000:05:00.1: PCIe Bus Error: severity=Correctable, type=Physical Layer, (Transmitter ID)

[ 1600.733370] ixgbe 0000:05:00.1: device [8086:1528] error status/mask=000010c1/00002000

[ 1600.734012] ixgbe 0000:05:00.1: [ 0] RxErr (First)

[ 1600.734652] ixgbe 0000:05:00.1: [ 6] BadTLP

[ 1600.735295] ixgbe 0000:05:00.1: [ 7] BadDLLP

[ 1600.735933] ixgbe 0000:05:00.1: [12] Timeout

[ 1600.736577] ixgbe 0000:05:00.1: AER: Error of this Agent is reported firstAfter a 40 second continuous run of iperf3, the NIC resets and enters into a loop of resets. No further network activity is possible, my VM can no longer run iperf or ping to my remote server.

First reset, and then it loops every 5-20 seconds. Connectivity is not possible even when the device says it is back up.

code_language.shell:

[ 1604.914955] pcieport 0000:00:1d.0: AER: Multiple Correctable error message received from 0000:05:00.1

[ 1604.915623] pcieport 0000:00:1d.0: PCIe Bus Error: severity=Correctable, type=Data Link Layer, (Transmitter ID)

[ 1604.916267] pcieport 0000:00:1d.0: device [8086:a330] error status/mask=00001000/00002000

[ 1604.916914] pcieport 0000:00:1d.0: [12] Timeout

[ 1604.917554] ixgbe 0000:05:00.0: PCIe Bus Error: severity=Correctable, type=Physical Layer, (Transmitter ID)

[ 1604.918196] ixgbe 0000:05:00.0: device [8086:1528] error status/mask=000030c1/00002000

[ 1604.918838] ixgbe 0000:05:00.0: [ 0] RxErr (First)

[ 1604.919477] ixgbe 0000:05:00.0: [ 6] BadTLP

[ 1604.920109] ixgbe 0000:05:00.0: [ 7] BadDLLP

[ 1604.920733] ixgbe 0000:05:00.0: [12] Timeout

[ 1604.921375] ixgbe 0000:05:00.1: PCIe Bus Error: severity=Correctable, type=Physical Layer, (Transmitter ID)

[ 1604.922008] ixgbe 0000:05:00.1: device [8086:1528] error status/mask=000010c1/00002000

[ 1604.922643] ixgbe 0000:05:00.1: [ 0] RxErr (First)

[ 1604.923275] ixgbe 0000:05:00.1: [ 6] BadTLP

[ 1604.923899] ixgbe 0000:05:00.1: [ 7] BadDLLP

[ 1604.924523] ixgbe 0000:05:00.1: [12] Timeout

[ 1604.925145] ixgbe 0000:05:00.1: AER: Error of this Agent is reported first

[ 1610.702615] ixgbe 0000:05:00.0 enp5s0f0: NETDEV WATCHDOG: CPU: 1: transmit queue 11 timed out 5788 ms

[ 1610.703255] ixgbe 0000:05:00.0 enp5s0f0: initiating reset due to tx timeout

[ 1610.703922] ixgbe 0000:05:00.0 enp5s0f0: Reset adapter

[ 1610.738230] ixgbe 0000:05:00.0 enp5s0f0: TXDCTL.ENABLE for one or more queues not cleared within the polling period

[ 1610.915903] ixgbe 0000:05:00.0: primary disable timed out

[ 1611.117242] vmbr1: port 1(enp5s0f0) entered disabled state

[ 1615.727657] ixgbe 0000:05:00.0 enp5s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX

[ 1615.728353] vmbr1: port 1(enp5s0f0) entered blocking state

[ 1615.728994] vmbr1: port 1(enp5s0f0) entered forwarding state

[ 1621.390419] ixgbe 0000:05:00.0 enp5s0f0: Detected Tx Unit Hang

Tx Queue <11>

TDH, TDT <0>, <2>

next_to_use <2>

next_to_clean <0>

tx_buffer_info[next_to_clean]

time_stamp <100141bac>

jiffies <1001429c0>

[ 1621.395268] ixgbe 0000:05:00.0 enp5s0f0: tx hang 2 detected on queue 11, resetting adapter

[ 1621.395876] ixgbe 0000:05:00.0 enp5s0f0: initiating reset due to tx timeout

[ 1621.396531] ixgbe 0000:05:00.0 enp5s0f0: Reset adapter

[ 1621.430844] ixgbe 0000:05:00.0 enp5s0f0: RXDCTL.ENABLE for one or more queues not cleared within the polling period

[ 1621.464912] ixgbe 0000:05:00.0 enp5s0f0: TXDCTL.ENABLE for one or more queues not cleared within the polling period

[ 1621.642822] ixgbe 0000:05:00.0: primary disable timed out

[ 1621.848719] vmbr1: port 1(enp5s0f0) entered disabled state

[ 1626.457105] ixgbe 0000:05:00.0 enp5s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX

[ 1626.457772] vmbr1: port 1(enp5s0f0) entered blocking state

[ 1626.458396] vmbr1: port 1(enp5s0f0) entered forwarding state

[ 1630.094255] ixgbe 0000:05:00.0 enp5s0f0: Detected Tx Unit Hang

Tx Queue <5>

TDH, TDT <0>, <2>

next_to_use <2>

next_to_clean <0>

tx_buffer_info[next_to_clean]

time_stamp <1001440a3>

jiffies <100144bc0>

[ 1630.094255] ixgbe 0000:05:00.0 enp5s0f0: Detected Tx Unit Hang

Tx Queue <11>

TDH, TDT <0>, <2>

next_to_use <2>

next_to_clean <0>

tx_buffer_info[next_to_clean]

time_stamp <100143e3d>

jiffies <100144bc0>

[ 1630.094265] ixgbe 0000:05:00.0 enp5s0f0: tx hang 3 detected on queue 11, resetting adapter

[ 1630.099224] ixgbe 0000:05:00.0 enp5s0f0: tx hang 3 detected on queue 5, resetting adapter

[ 1630.103892] ixgbe 0000:05:00.0 enp5s0f0: initiating reset due to tx timeout

[ 1630.104472] ixgbe 0000:05:00.0 enp5s0f0: initiating reset due to tx timeout

[ 1630.105056] ixgbe 0000:05:00.0 enp5s0f0: Reset adapterAs a comparison, I have tried to put other PCIe cards I have available into this riser on the new system. Some cards seem to work without issue, and other cards are behaving even worse (especially the card that runs at PCIe 3.0 speeds.)

Intel PRO/1000 PT (PCIe 1.0 x1): No issues, no AER messages. Tested with continuous iperf3 data, bidirectionally.

Mellanox MNPA19-XTR ConnectX-2 EN (PCIe 2.0 x4): No AER messages during idle. I currently cannot test it in connected mode.

LSI 2008 (RAID FW), no disks attached (PCIe 2.0 x4): Steady stream of AER messages during idle (6-8 "correctable" errors per second, very few "multiple correctable" errors).

LSI 3008 (RAID FW), no disks attached (PCIe 3.0 x4): Flood of AER messages during idle (20 "correctable" and 400 "multiple correctable" errors per second). System cannot even reboot or shut down successfully, and needs to be forcefully powered off.