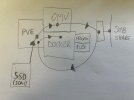

Hello, if this is off topic please someone point me to the right place. I have a PVE 8 on my machine, with a 2TB SATA SSD drive with 2 partitions that I use for media storage, shared on network with OMV. Suddenly one of the partitions the other day disappeared and on log journal I found it's not mounted anymore.

With a bit of research (I am pretty new to Linux) I found that the filesystem EXT4 is not recognised anymore, I am looking (if there is) a way to repair and avoid al lot of data loss.

Is there some other tool I can use to try to repair? Eventually I can detach the drive and connect to a Windows 11 system for some attempts.

Thanks in advance for your help!

With a bit of research (I am pretty new to Linux) I found that the filesystem EXT4 is not recognised anymore, I am looking (if there is) a way to repair and avoid al lot of data loss.

Code:

root@pve:~# fsck /dev/sda1

fsck from util-linux 2.38.1

e2fsck 1.47.0 (5-Feb-2023)

ext2fs_open2: Bad magic number in super-block

fsck.ext4: Superblock invalid, trying backup blocks...

fsck.ext4: Bad magic number in super-block while trying to open /dev/sda1

The superblock could not be read or does not describe a valid ext2/ext3/ext4

filesystem. If the device is valid and it really contains an ext2/ext3/ext4

filesystem (and not swap or ufs or something else), then the superblock

is corrupt, and you might try running e2fsck with an alternate superblock:

e2fsck -b 8193 <device>

or

e2fsck -b 32768 <device>

Found a gpt partition table in /dev/sda1

Code:

root@pve:~# e2fsck -b 32768 /dev/sda1

e2fsck 1.47.0 (5-Feb-2023)

e2fsck: Bad magic number in super-block while trying to open /dev/sda1

The superblock could not be read or does not describe a valid ext2/ext3/ext4

filesystem. If the device is valid and it really contains an ext2/ext3/ext4

filesystem (and not swap or ufs or something else), then the superblock

is corrupt, and you might try running e2fsck with an alternate superblock:

e2fsck -b 8193 <device>

or

e2fsck -b 32768 <device>

Found a gpt partition table in /dev/sda1Is there some other tool I can use to try to repair? Eventually I can detach the drive and connect to a Windows 11 system for some attempts.

Thanks in advance for your help!