https://blogs.oracle.com/linux/post/virtioblk-using-iothread-vq-mapping

@bund69 proxmox tests:

My own iothread-vq-mapping tests with proxmox are pretty similar to this.

5 TIMES iops improvment on NVME! Please add iothread-vq-mapping to new proxmox 9.0.

@bund69 proxmox tests:

Code:

args: -object iothread,id=iothread0 -object iothread,id=iothread1 -object iothread,id=iothread2 -object iothread,id=iothread3 -object iothread,id=iothread4 -object iothread,id=iothread5 -object iothread,id=iothread6 -object iothread,id=iothread7 -object iothread,id=iothread8 -object iothread,id=iothread9 -object iothread,id=iothread10 -object iothread,id=iothread11 -object iothread,id=iothread12 -object iothread,id=iothread13 -object iothread,id=iothread14 -object iothread,id=iothread15

-drive file=/mnt/pmem0fs/images/103/vm-103-disk-1.raw,if=none,id=drive-virtio1,aio=io_uring,format=raw,cache=none

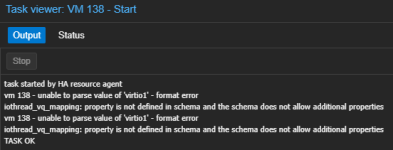

--device '{"driver":"virtio-blk-pci","iothread-vq-mapping":[{"iothread":"iothread0"},{"iothread":"iothread1"},{"iothread":"iothread2"},{"iothread":"iothread3"},{"iothread":"iothread4"},{"iothread":"iothread5"},{"iothread":"iothread6"},{"iothread":"iothread7"},{"iothread":"iothread8"},{"iothread":"iothread9"},{"iothread":"iothread10"},{"iothread":"iothread11"},{"iothread":"iothread12"},{"iothread":"iothread13"},{"iothread":"iothread14"},{"iothread":"iothread15"}],"drive":"drive-virtio1","queue-size":1024,"config-wce":false}'

Code:

~150K iops, 600MB/s with 1 io thread, fedora VM

~1000k iops, 4200MB/s with 16 io threads and iothread-vq-mapping, fedora VM

~3300k iops 12,800MB/s on proxmox host

fio bs=4k, iodepth=128, numjobs=30, 40vcpu's

dl380 gen 10 with 2x 6230 and 2x 256GB optane dimmsMy own iothread-vq-mapping tests with proxmox are pretty similar to this.

5 TIMES iops improvment on NVME! Please add iothread-vq-mapping to new proxmox 9.0.