Hi all,

I recently needed to reboot my windows 10 vm for updates and I have ran into issues where I can no longer boot the VM. The initial boot results in a windows blue screen "video memory management error" then when rebooting the VM hangs on the proxmox boot splash screen. I thought I might need to ammend lines of code in my QemuServer.pm script. However, from this thread I noticed that the ammendments were already included in my QemuServer.pm (as I am running Proxmox 8.1.4). So I am wondering what else could be the problem/fix. Included are my logs of the blue screen event and the reboot and hang. Please let me know if you see a simply fix to this or if you have any suggestions. Thanks for your time and expertise. Best Regards,

-Jo

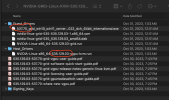

included syslog as the (.txt) seems to butcher the readability:

Mar 12 16:04:26 systemd[1]: Started 103.scope.

Mar 12 16:04:27 kernel: tap103i0: entered promiscuous mode

Mar 12 16:04:27 kernel: vmbr0: port 4(fwpr103p0) entered blocking state

Mar 12 16:04:27 kernel: vmbr0: port 4(fwpr103p0) entered disabled state

Mar 12 16:04:27 kernel: fwpr103p0: entered allmulticast mode

Mar 12 16:04:27 kernel: fwpr103p0: entered promiscuous mode

Mar 12 16:04:27 kernel: vmbr0: port 4(fwpr103p0) entered blocking state

Mar 12 16:04:27 kernel: vmbr0: port 4(fwpr103p0) entered forwarding state

Mar 12 16:04:27 kernel: fwbr103i0: port 1(fwln103i0) entered blocking state

Mar 12 16:04:27 kernel: fwbr103i0: port 1(fwln103i0) entered disabled state

Mar 12 16:04:27 kernel: fwln103i0: entered allmulticast mode

Mar 12 16:04:27 kernel: fwln103i0: entered promiscuous mode

Mar 12 16:04:27 kernel: fwbr103i0: port 1(fwln103i0) entered blocking state

Mar 12 16:04:27 kernel: fwbr103i0: port 1(fwln103i0) entered forwarding state

Mar 12 16:04:27 kernel: fwbr103i0: port 2(tap103i0) entered blocking state

Mar 12 16:04:27 kernel: fwbr103i0: port 2(tap103i0) entered disabled state

Mar 12 16:04:27 kernel: tap103i0: entered allmulticast mode

Mar 12 16:04:27 kernel: fwbr103i0: port 2(tap103i0) entered blocking state

Mar 12 16:04:27 kernel: fwbr103i0: port 2(tap103i0) entered forwarding state

Mar 12 16:04:27 nvidia-vgpu-mgr[1220]: Nv0000CtrlVgpuGetStartDataParams {

mdev_uuid: {00000000-0000-0000-0000-000000000103},

config_params: "vgpu_type_id=65",

qemu_pid: 10156,

gpu_pci_id: 0x300,

vgpu_id: 1,

gpu_pci_bdf: 768,

}

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: notice: vmiop_env_log: vmiop-env: guest_max_gpfn:0x0

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: notice: vmiop_env_log: (0x0): Received start call from nvidia-vgpu-vfio module: mdev uuid 00000000-0000-0000-0000-000000000103 GPU PCI id 00:03:00.0 config params vgpu_type_id=65

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: notice: vmiop_env_log: (0x0): pluginconfig: vgpu_type_id=65

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: notice: vmiop_env_log: Successfully updated env symbols!

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: NvA081CtrlVgpuConfigGetVgpuTypeInfoParams {

vgpu_type: 65,

vgpu_type_info: NvA081CtrlVgpuInfo {

vgpu_type: 65,

vgpu_name: "GRID P4-4Q",

vgpu_class: "Quadro",

vgpu_signature: [],

license: "Quadro-Virtual-DWS,5.0;GRID-Virtual-WS,2.0;GRID-Virtual-WS-Ext,2.0",

max_instance: 2,

num_heads: 4,

max_resolution_x: 7680,

max_resolution_y: 4320,

max_pixels: 58982400,

frl_config: 60,

cuda_enabled: 1,

ecc_supported: 1,

gpu_instance_size: 0,

multi_vgpu_supported: 0,

vdev_id: 0x1bb31206,

pdev_id: 0x1bb3,

profile_size: 0x100000000,

fb_length: 0xec000000,

gsp_heap_size: 0x0,

fb_reservation: 0x14000000,

mappable_video_size: 0x400000,

encoder_capacity: 0x64,

bar1_length: 0x100,

frl_enable: 1,

adapter_name: "GRID P4-4Q",

adapter_name_unicode: "GRID P4-4Q",

short_gpu_name_string: "GP104GL-A",

licensed_product_name: "NVIDIA RTX Virtual Workstation",

vgpu_extra_params: "",

ftrace_enable: 0,

gpu_direct_supported: 0,

nvlink_p2p_supported: 0,

multi_vgpu_exclusive: 0,

exclusive_type: 0,

exclusive_size: 1,

gpu_instance_profile_id: 4294967295,

},

}

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: Applying profile nvidia-65 overrides

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: Patching nvidia-65/num_heads: 4 -> 1

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: Patching nvidia-65/max_resolution_x: 7680 -> 1920

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: Patching nvidia-65/max_resolution_y: 4320 -> 1080

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: Patching nvidia-65/max_pixels: 58982400 -> 2073600

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: Patching nvidia-65/cuda_enabled: 1 -> 1

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: Patching nvidia-65/fb_length: 3959422976 -> 3959422976

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: Patching nvidia-65/fb_reservation: 335544320 -> 335544320

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: Patching nvidia-65/frl_enable: 1 -> 1

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: cmd: 0xa0810115 failed.

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: notice: vmiop_log: (0x0): gpu-pci-id : 0x300

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: notice: vmiop_log: (0x0): vgpu_type : Quadro

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: notice: vmiop_log: (0x0): Framebuffer: 0xec000000

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: notice: vmiop_log: (0x0): Virtual Device Id: 0x1bb3:0x1206

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: notice: vmiop_log: (0x0): FRL Value: 60 FPS

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: notice: vmiop_log: ######## vGPU Manager Information: ########

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: notice: vmiop_log: Driver Version: 535.129.03

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: notice: vmiop_log: (0x0): Detected ECC enabled on physical GPU.

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: notice: vmiop_log: (0x0): Guest usable FB size is reduced due to ECC.

Mar 12 16:04:27 kernel: NVRM: Software scheduler timeslice set to 2083uS.

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: notice: vmiop_log: (0x0): vGPU supported range: (0x70001, 0x120001)

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: notice: vmiop_log: (0x0): Init frame copy engine: syncing...

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: notice: vmiop_log: (0x0): vGPU migration enabled

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: notice: vmiop_log: (0x0): vGPU manager is running in non-SRIOV mode.

Mar 12 16:04:27 nvidia-vgpu-mgr[10268]: notice: vmiop_log: display_init inst: 0 successful

Mar 12 16:04:27 kernel: [nvidia-vgpu-vfio] 00000000-0000-0000-0000-000000000103: vGPU migration enabled with upstream V2 migration protocol

Mar 12 16:04:27 pvedaemon[1571]: <root@pam> end task UPID:murphy:00002786:0003B495:65F0C353:qmstart:103:root@pam: OK

Mar 12 16:04:27 pvedaemon[1570]: <root@pam> starting task UPID:murphy:00002822:0003B80E:65F0C35B:vncproxy:103:root@pam:

Mar 12 16:04:27 pvedaemon[10274]: starting vnc proxy UPID:murphy:00002822:0003B80E:65F0C35B:vncproxy:103:root@pam:

Mar 12 16:04:39 nvidia-vgpu-mgr[10268]: error: vmiop_log: (0x0): RPC RINGs are not valid

Mar 12 16:04:46 pvedaemon[1570]: <root@pam> end task UPID:murphy:00002822:0003B80E:65F0C35B:vncproxy:103:root@pam: OK

Mar 12 16:06:03 nvidia-vgpu-mgr[10268]: notice: vmiop_log: (0x0): Detected ECC enabled by guest.

Mar 12 16:06:03 nvidia-vgpu-mgr[10268]: notice: vmiop_log: ######## Guest NVIDIA Driver Information: ########

Mar 12 16:06:03 nvidia-vgpu-mgr[10268]: notice: vmiop_log: Driver Version: 537.70

Mar 12 16:06:03 nvidia-vgpu-mgr[10268]: notice: vmiop_log: vGPU version: 0x120001

Mar 12 16:06:03 nvidia-vgpu-mgr[10268]: notice: vmiop_log: (0x0): vGPU license state: Unlicensed (Unrestricted)

Mar 12 16:06:33 pvedaemon[10795]: starting vnc proxy UPID:murphy:00002A2B:0003E905:65F0C3D9:vncproxy:103:root@pam:

Mar 12 16:06:33 pvedaemon[1571]: <root@pam> starting task UPID:murphy:00002A2B:0003E905:65F0C3D9:vncproxy:103:root@pam:

Mar 12 16:06:37 pvedaemon[1571]: <root@pam> end task UPID:murphy:00002A2B:0003E905:65F0C3D9:vncproxy:103:root@pam: OK