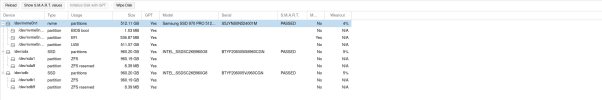

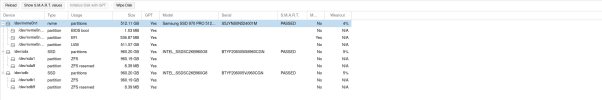

PVE packages are below.

I have the current backup settings configured in PVE. Note that I'm not using PBS, I'm backing up the entire VMs/LXCs.

My Proxmox VMs live on 2x Intel SSD D3-S4510 960GB datacenter SSDs in a RAID1 mirror. The backup target is a Synology running 2x WD Red Pro 22TB NAS 7200 RPM HDDs. The HDDs are CMR, not SMR, and they are mounted to Proxmox via SMB.

This is a backup from March 24th. Note the time running and size. Only 41mins.

Here a backup from March 31st. Also very fast at 43mins.

April 7th takes a little bit longer at just over 1hr.

However, April 14th is very slow, almost 6hrs.

Last night's backup on April 21st was also almost 6hrs.

Nothing changed on the Synology side. I haven't updated it or changed anything. I'm not sure where to start troubleshooting this. I don't think it's a disk issue, both SSDs show no SMART problems and only 5% wearout.

Code:

proxmox-ve: 8.4.0 (running kernel: 6.8.12-9-pve)

pve-manager: 8.4.1 (running version: 8.4.1/2a5fa54a8503f96d)

proxmox-kernel-helper: 8.1.1

proxmox-kernel-6.8: 6.8.12-9

proxmox-kernel-6.8.12-9-pve-signed: 6.8.12-9

proxmox-kernel-6.8.12-8-pve-signed: 6.8.12-8

proxmox-kernel-6.5.13-6-pve-signed: 6.5.13-6

proxmox-kernel-6.5: 6.5.13-6

pve-kernel-5.4: 6.4-4

pve-kernel-5.13.19-2-pve: 5.13.19-4

pve-kernel-5.4.124-1-pve: 5.4.124-1

pve-kernel-5.4.106-1-pve: 5.4.106-1

ceph-fuse: 16.2.15+ds-0+deb12u1

corosync: 3.1.9-pve1

criu: 3.17.1-2+deb12u1

glusterfs-client: 10.3-5

ifupdown: not correctly installed

ifupdown2: 3.2.0-1+pmx11

intel-microcode: 3.20230214.1~deb11u1

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-5

libknet1: 1.30-pve2

libproxmox-acme-perl: 1.6.0

libproxmox-backup-qemu0: 1.5.1

libproxmox-rs-perl: 0.3.5

libpve-access-control: 8.2.2

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.1.0

libpve-cluster-perl: 8.1.0

libpve-common-perl: 8.3.1

libpve-guest-common-perl: 5.2.2

libpve-http-server-perl: 5.2.2

libpve-network-perl: 0.11.2

libpve-rs-perl: 0.9.4

libpve-storage-perl: 8.3.6

libqb0: 1.0.5-1

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.6.0-2

proxmox-backup-client: 3.4.0-1

proxmox-backup-file-restore: 3.4.0-1

proxmox-kernel-helper: 8.1.1

proxmox-mail-forward: 0.3.2

proxmox-mini-journalreader: 1.4.0

proxmox-widget-toolkit: 4.3.10

pve-cluster: 8.1.0

pve-container: 5.2.6

pve-docs: 8.4.0

pve-edk2-firmware: 4.2025.02-3

pve-firewall: 5.1.1

pve-firmware: 3.15-3

pve-ha-manager: 4.0.7

pve-i18n: 3.4.2

pve-qemu-kvm: 9.2.0-5

pve-xtermjs: 5.5.0-2

qemu-server: 8.3.12

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.7-pve2I have the current backup settings configured in PVE. Note that I'm not using PBS, I'm backing up the entire VMs/LXCs.

My Proxmox VMs live on 2x Intel SSD D3-S4510 960GB datacenter SSDs in a RAID1 mirror. The backup target is a Synology running 2x WD Red Pro 22TB NAS 7200 RPM HDDs. The HDDs are CMR, not SMR, and they are mounted to Proxmox via SMB.

This is a backup from March 24th. Note the time running and size. Only 41mins.

Code:

Details

=======

VMID Name Status Time Size Filename

102 db06 ok 25s 938.31 MiB /mnt/pve/backup_proxmox/dump/vzdump-lxc-102-2025_03_24-06_00_07.tar.zst

103 jump01 ok 18s 368.614 MiB /mnt/pve/backup_proxmox/dump/vzdump-lxc-103-2025_03_24-06_00_32.tar.zst

104 vpn01 ok 54s 3.515 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-104-2025_03_24-06_00_50.vma.zst

105 kube01 ok 7m 4s 31.751 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-105-2025_03_24-06_01_44.vma.zst

106 docker05 ok 16m 38s 105.104 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-106-2025_03_24-06_08_48.vma.zst

107 nut01 ok 47s 3.382 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-107-2025_03_24-06_25_26.vma.zst

108 backup06 ok 42s 2.484 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-108-2025_03_24-06_26_13.vma.zst

109 iac06 ok 51s 1.526 GiB /mnt/pve/backup_proxmox/dump/vzdump-lxc-109-2025_03_24-06_26_55.tar.zst

201 hass02 ok 1m 3s 3.86 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-201-2025_03_24-06_27_46.vma.zst

300 W10-LoganVM ok 9m 6s 56.977 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-300-2025_03_24-06_28_49.vma.zst

301 W11-LoganVM ok 3m 38s 20.735 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-301-2025_03_24-06_37_55.vma.zst

Total running time: 41m 26s

Total size: 230.611 GiBHere a backup from March 31st. Also very fast at 43mins.

Code:

Details

=======

VMID Name Status Time Size Filename

102 db06 ok 24s 937.49 MiB /mnt/pve/backup_proxmox/dump/vzdump-lxc-102-2025_03_31-06_00_00.tar.zst

103 jump01 ok 19s 369.153 MiB /mnt/pve/backup_proxmox/dump/vzdump-lxc-103-2025_03_31-06_00_24.tar.zst

104 vpn01 ok 56s 3.523 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-104-2025_03_31-06_00_43.vma.zst

105 kube01 ok 6m 52s 31.239 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-105-2025_03_31-06_01_39.vma.zst

106 docker05 ok 16m 31s 104.813 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-106-2025_03_31-06_08_31.vma.zst

107 nut01 ok 48s 3.424 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-107-2025_03_31-06_25_02.vma.zst

108 backup06 ok 3m 18s 2.512 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-108-2025_03_31-06_25_50.vma.zst

109 iac06 ok 52s 1.58 GiB /mnt/pve/backup_proxmox/dump/vzdump-lxc-109-2025_03_31-06_29_08.tar.zst

201 hass02 ok 1m 2s 3.432 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-201-2025_03_31-06_30_00.vma.zst

300 W10-LoganVM ok 9m 9s 56.923 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-300-2025_03_31-06_31_02.vma.zst

301 W11-LoganVM ok 2m 50s 17.174 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-301-2025_03_31-06_40_11.vma.zst

Total running time: 43m 1s

Total size: 225.897 GiBApril 7th takes a little bit longer at just over 1hr.

Code:

Details

=======

VMID Name Status Time Size Filename

102 db06 ok 25s 941.316 MiB /mnt/pve/backup_proxmox/dump/vzdump-lxc-102-2025_04_07-06_00_00.tar.zst

103 jump01 ok 20s 370.332 MiB /mnt/pve/backup_proxmox/dump/vzdump-lxc-103-2025_04_07-06_00_25.tar.zst

104 vpn01 ok 56s 3.511 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-104-2025_04_07-06_00_45.vma.zst

105 kube01 ok 6m 56s 31.425 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-105-2025_04_07-06_01_41.vma.zst

106 docker05 ok 17m 35s 104.631 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-106-2025_04_07-06_08_37.vma.zst

107 nut01 ok 49s 3.418 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-107-2025_04_07-06_26_12.vma.zst

108 backup06 ok 3m 36s 2.529 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-108-2025_04_07-06_27_01.vma.zst

109 iac06 ok 51s 1.557 GiB /mnt/pve/backup_proxmox/dump/vzdump-lxc-109-2025_04_07-06_30_37.tar.zst

201 hass02 ok 1m 1s 3.471 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-201-2025_04_07-06_31_28.vma.zst

300 W10-LoganVM ok 10m 6s 56.868 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-300-2025_04_07-06_32_29.vma.zst

301 W11-LoganVM err 28m 18s 0 B null

Total running time: 1h 10m 53s

Total size: 208.69 GiBHowever, April 14th is very slow, almost 6hrs.

Code:

Details

=======

VMID Name Status Time Size Filename

102 db06 ok 1m 41s 947.146 MiB /mnt/pve/backup_proxmox/dump/vzdump-lxc-102-2025_04_14-06_00_01.tar.zst

103 jump01 ok 51s 378.599 MiB /mnt/pve/backup_proxmox/dump/vzdump-lxc-103-2025_04_14-06_01_42.tar.zst

104 vpn01 ok 5m 35s 3.534 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-104-2025_04_14-06_02_34.vma.zst

105 kube01 ok 49m 38s 31.265 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-105-2025_04_14-06_08_09.vma.zst

106 docker05 ok 2h 39m 51s 104.259 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-106-2025_04_14-06_57_47.vma.zst

107 nut01 ok 5m 23s 3.413 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-107-2025_04_14-09_37_38.vma.zst

108 backup06 ok 4m 1s 2.529 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-108-2025_04_14-09_43_01.vma.zst

109 iac06 ok 3m 14s 1.78 GiB /mnt/pve/backup_proxmox/dump/vzdump-lxc-109-2025_04_14-09_47_02.tar.zst

201 hass02 ok 5m 42s 3.492 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-201-2025_04_14-09_50_16.vma.zst

300 W10-LoganVM ok 1h 28m 50s 57.803 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-300-2025_04_14-09_55_58.vma.zst

301 W11-LoganVM ok 26m 23s 17.174 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-301-2025_04_14-11_24_48.vma.zst

Total running time: 5h 51m 10s

Total size: 226.542 GiBLast night's backup on April 21st was also almost 6hrs.

Code:

Details

=======

VMID Name Status Time Size Filename

102 db06 ok 1m 37s 955.047 MiB /mnt/pve/backup_proxmox/dump/vzdump-lxc-102-2025_04_21-06_00_01.tar.zst

103 jump01 ok 48s 370.447 MiB /mnt/pve/backup_proxmox/dump/vzdump-lxc-103-2025_04_21-06_01_38.tar.zst

104 vpn01 ok 5m 29s 3.487 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-104-2025_04_21-06_02_26.vma.zst

105 kube01 ok 49m 52s 31.547 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-105-2025_04_21-06_07_55.vma.zst

106 docker05 ok 2h 42m 15s 105.837 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-106-2025_04_21-06_57_47.vma.zst

107 nut01 ok 5m 27s 3.473 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-107-2025_04_21-09_40_02.vma.zst

108 backup06 ok 4m 16s 2.703 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-108-2025_04_21-09_45_29.vma.zst

109 iac06 ok 2m 58s 1.657 GiB /mnt/pve/backup_proxmox/dump/vzdump-lxc-109-2025_04_21-09_49_45.tar.zst

201 hass02 ok 5m 41s 3.523 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-201-2025_04_21-09_52_43.vma.zst

300 W10-LoganVM ok 1h 27m 51s 57.25 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-300-2025_04_21-09_58_24.vma.zst

301 W11-LoganVM ok 26m 25s 17.236 GiB /mnt/pve/backup_proxmox/dump/vzdump-qemu-301-2025_04_21-11_26_15.vma.zst

Total running time: 5h 52m 39s

Total size: 228.008 GiBNothing changed on the Synology side. I haven't updated it or changed anything. I'm not sure where to start troubleshooting this. I don't think it's a disk issue, both SSDs show no SMART problems and only 5% wearout.

Last edited: