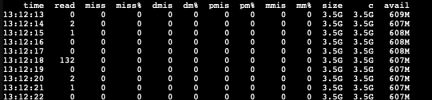

I've recently setup a few lxc's on my pve node and am having terrible performance due to ridiculously high i/o delay (like a minimum of 5-10% under little to no load, spiking to 40-70% when doing pretty much anything). My containers are running on a mirrored zfs array of two 2tb hdds and I've made sure to allocate 6gb as the max ram usage for the arc cache. Not sure what the culprit is but any help is appreciated.