External PBS copy

- Thread starter wernii

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hi,

currently, this can be achieved by doing a local sync job.

currently, this can be achieved by doing a local sync job.

- Add the path where the external hard-drive will be mounted as datastore

- Add the PBS instance itself as "local" remote (just use

localhost,127.0.0.1or::1as server address) - Add a sync job to the datastore of the external hard disk, use the "fake" local remote and the desired source datastore where you want to pull the syncs from.

Set either no schedule at all and run that job only manually, or if the external drive is normally always attached you can also just configure a sync schedule as normally.

Last edited:

Thank you for your answer. But wouldn't it be possible to do it somehow in the form of an export, e.g. via LAN to another server?Hi,

currently, this can be achieved by doing a local sync job.

- Add the path where the external hard-drive will be mounted as datastore

- Add the PBS instance itself as "local" remote (just use

localhost,127.0.0.1or::1as server address)- Add a sync job to the datastore of the external hard disk, use the "fake" local remote and the desired source datastore where you want to pull the syncs from.

Set either no schedule at all and run that job only manually, or if the external drive is normally always attached you can also just configure a sync schedule as normally.

Or as export to cloud?

If you can mount your cloud drive or whatever you are using to your PBS instance, a sync job to localhost would probably be the best way.Thank you for your answer. But wouldn't it be possible to do it somehow in the form of an export, e.g. via LAN to another server?

Or as export to cloud?

How to do it? I can't see this PBS documentation.If you can mount your cloud drive or whatever you are using to your PBS instance, a sync job to localhost would probably be the best way.

I would just like to say that it is about making a copy of what is in Datastore, in my case only VMs - but in a version so that they are saved in one copy, which is taken outside the company's premises.

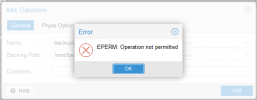

Quick rundown:How to do it?

- Mount your drive to the location you want (e.g. /mnt/), in this example we will use /the/mountpoint/of/the/drive.

- Add a Datastore and point it to the external drive:

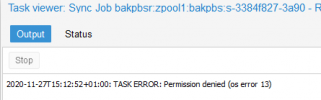

- Now add the PBS itself as a "Remote" like so:

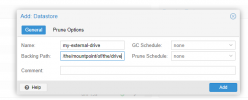

You will need to set the Userid to a user that actually exists and has rights to the datastores, as it is local you might as well use root@pam here. Enter the fingerprint of your PBS Server. The "Host" is set to localhost since we want to sync to a drive connected to the PBS itself. - Click on your newly created Datastore (here called my-external-drive), switch to "Sync Jobs" and add a Sync Job like this:

Make sure to enter your "real" Datastore in the "Source Datastore" field, in my case that is store02. Choose a Schedule that fits you or just delete the text in "Schedule" to make it a manual task. - Run the Sync Job for the first time.

Last edited:

Quick rundown:

Thats it, you are now syncing your source datastore to a datastore on your external drive.

- Mount your drive to the location you want (e.g. /mnt/), in this example we will use /the/mountpoint/of/the/drive.

- Add a Datastore and point it to the external drive:

View attachment 20832- Now add the PBS itself as a "Remote" like so:

View attachment 20833

You will need to set the Userid to a user that actually exists and has rights to the datastores, as it is local you might as well use root@pam here. Enter the fingerprint of your PBS Server. The "Host" is set to localhost since we want to sync to a drive connected to the PBS itself.- Click on your newly created Datastore (here called my-external-drive), switch to "Sync Jobs" and add a Sync Job like this:

View attachment 20834

Make sure to enter your "real" Datastore in the "Source Datastore" field, in my case that is store02. Choose a Schedule that fits you or just delete the text in "Schedule" to make it a manual task.- Run the Sync Job for the first time.

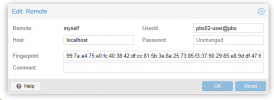

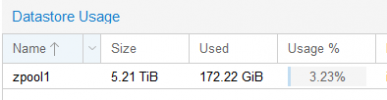

Thank you very much for the tutorial. I also have a question whether the external drive must have the same capacity as the Datastore, in the photo:

It doesnt have to be the same capacity, but you should have more capacity than storage space beeing used on your main pool.Thank you very much for the tutorial. I also have a question whether the external drive must have the same capacity as the Datastore, in the photo:

View attachment 20835

So in your case any drive >172GiB is sufficient. Those storage requirements will probably grow in the future though, so make sure you get a large enough drive.

I used these excellent instructions to sync a datastore to a shared folder on a Synology NAS using the following command to mount it on the PBS server:

I then followed the rest of your instructions and it worked fine and I can see the backups in the PBS GUI when I look at the content of the datastore.

My question, however is about what this looks like on the Synology side.

The directory I backed up to has been in use for VZDumps, so it has all the usual directory structure for that. When I first mounted the directory before doing a sync, it looked like this:

After I did the sync, none of those directories are visible any more, but there is a new directory structure :

When I look on the NAS's file browser itself, all the old dirs/files still appear, and ".chunks" and ".lock" appear, but I don't see the vm directory and its contents.

This is not necessarily a problem (since it all seems to work), but it is puzzling. Can anyone explain what is going on?

mount -t cifs "//172.16.0.66/foobar" -o username=MYUSER,password=MYPASS,vers=2\.0 /mnt/foobar.I then followed the rest of your instructions and it worked fine and I can see the backups in the PBS GUI when I look at the content of the datastore.

My question, however is about what this looks like on the Synology side.

The directory I backed up to has been in use for VZDumps, so it has all the usual directory structure for that. When I first mounted the directory before doing a sync, it looked like this:

ls

configuration_backups dump id_rsa.pub images old_deletable private scripts server_backups snippets template test.txt vztmpAfter I did the sync, none of those directories are visible any more, but there is a new directory structure :

ls -al

total 1044

drwxr-xr-x 4 backup backup 4096 Nov 16 15:06 .

drwxr-xr-x 5 root root 4096 Nov 16 14:36 ..

drwxr-x--- 1 backup backup 1056768 Nov 16 14:59 .chunks

-rw-r--r-- 1 backup backup 0 Nov 16 14:59 .lock

drwxr-xr-x 3 backup backup 4096 Nov 16 15:06 vmWhen I look on the NAS's file browser itself, all the old dirs/files still appear, and ".chunks" and ".lock" appear, but I don't see the vm directory and its contents.

This is not necessarily a problem (since it all seems to work), but it is puzzling. Can anyone explain what is going on?