I have been experimenting with this for a while now (see notes under the post as well), to avoid any false positives with the whole stack:

Installed:

Standalone pmxcfs [1] launched - this is no cluster:

While running iotop, I wrote only twice into

This is what iotop was showing:

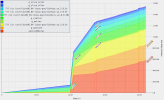

I then repeated the same test but checked SMART values before:

... and after:

Yes, that is 1,250 net delta [2], so ~640M written onto the SSD - there's literally nothing else writing on that system.

I understand there are typically not meant to be 5K let alone 500K file writes, but ...

... at 5K ... >100x amplified write

... at 500K ... >1000x amplified write

pmxcfs tested

I originally did this with pmxcfs built from source - gcc version 12.2.0 (Debian 12.2.0-14):

https://git.proxmox.com/?p=pve-cluster.git;a=blob;f=src/pmxcfs/Makefile

But it is - of course - identical (save for the symbol table) when using the "official" one from pve-cluster 8.0.7 - both tested.

bs 512 / 4k / 128k

With a bs = 4K, the 500K writes amount to 80M+.

With a bs = 128K, i.e. 4x 128k write causes 5M written, the amplification is still 10x.

[1] https://pve.proxmox.com/wiki/Proxmox_Cluster_File_System_(pmxcfs)

[2] Manufacturer SMART specs on this field:

Installed:

- Debian 12 stable netinst;

- kernel 6.1.0-25-amd64;

- ext4;

- no swap;

- tasksel standard ssh-server.

Standalone pmxcfs [1] launched - this is no cluster:

- no CPG (corosync) to be posting phantom transactions;

- no other services;

- even journald is barely whizzling around.

/var/lib/pve-cluster/config.db* and mounted its FUSE with the rudimentary structure.While running iotop, I wrote only twice into

/etc/pve - 5K and 500K, the second write took over 1.5s, this is an NVMe Gen3 SSD.

Code:

root@guinea:/etc/pve# time dd if=/dev/random bs=512 count=10 of=/etc/pve/dd.out

10+0 records in

10+0 records out

5120 bytes (5.1 kB, 5.0 KiB) copied, 0.00205373 s, 2.5 MB/s

real 0m0.010s

user 0m0.002s

sys 0m0.001s

root@guinea:/etc/pve# time dd if=/dev/random bs=512 count=1000 of=/etc/pve/dd.out

1000+0 records in

1000+0 records out

512000 bytes (512 kB, 500 KiB) copied, 1.55845 s, 329 kB/s

real 0m1.562s

user 0m0.008s

sys 0m0.013sThis is what iotop was showing:

Code:

root@guinea:~# iotop -bao

Total DISK READ: 0.00 B/s | Total DISK WRITE: 0.00 B/s

Current DISK READ: 0.00 B/s | Current DISK WRITE: 0.00 B/s

TID PRIO USER DISK READ DISK WRITE SWAPIN IO COMMAND

Total DISK READ: 0.00 B/s | Total DISK WRITE: 674.73 K/s

Current DISK READ: 0.00 B/s | Current DISK WRITE: 3.97 K/s

TID PRIO USER DISK READ DISK WRITE SWAPIN IO COMMAND

534502 be/4 root 0.00 B 56.00 K 0.00 % 0.00 % pmxcfs

534503 be/4 root 0.00 B 624.00 K 0.00 % 0.00 % pmxcfs

Total DISK READ: 0.00 B/s | Total DISK WRITE: 0.00 B/s

Current DISK READ: 0.00 B/s | Current DISK WRITE: 0.00 B/s

TID PRIO USER DISK READ DISK WRITE SWAPIN IO COMMAND

534502 be/4 root 0.00 B 56.00 K 0.00 % 0.00 % pmxcfs

534503 be/4 root 0.00 B 624.00 K 0.00 % 0.00 % pmxcfs

Total DISK READ: 0.00 B/s | Total DISK WRITE: 0.00 B/s

Current DISK READ: 0.00 B/s | Current DISK WRITE: 0.00 B/s

TID PRIO USER DISK READ DISK WRITE SWAPIN IO COMMAND

534502 be/4 root 0.00 B 56.00 K 0.00 % 0.00 % pmxcfs

534503 be/4 root 0.00 B 624.00 K 0.00 % 0.00 % pmxcfs

Total DISK READ: 0.00 B/s | Total DISK WRITE: 0.00 B/s

Current DISK READ: 0.00 B/s | Current DISK WRITE: 0.00 B/s

TID PRIO USER DISK READ DISK WRITE SWAPIN IO COMMAND

534502 be/4 root 0.00 B 56.00 K 0.00 % 0.00 % pmxcfs

534503 be/4 root 0.00 B 624.00 K 0.00 % 0.00 % pmxcfs

Total DISK READ: 0.00 B/s | Total DISK WRITE: 0.00 B/s

Current DISK READ: 0.00 B/s | Current DISK WRITE: 0.00 B/s

TID PRIO USER DISK READ DISK WRITE SWAPIN IO COMMAND

534502 be/4 root 0.00 B 56.00 K 0.00 % 0.00 % pmxcfs

534503 be/4 root 0.00 B 624.00 K 0.00 % 0.00 % pmxcfs

Total DISK READ: 0.00 B/s | Total DISK WRITE: 0.00 B/s

Current DISK READ: 0.00 B/s | Current DISK WRITE: 0.00 B/s

TID PRIO USER DISK READ DISK WRITE SWAPIN IO COMMAND

534502 be/4 root 0.00 B 56.00 K 0.00 % 0.00 % pmxcfs

534503 be/4 root 0.00 B 624.00 K 0.00 % 0.00 % pmxcfs

Total DISK READ: 0.00 B/s | Total DISK WRITE: 3.97 K/s

Current DISK READ: 0.00 B/s | Current DISK WRITE: 11.90 K/s

TID PRIO USER DISK READ DISK WRITE SWAPIN IO COMMAND

202 be/3 root 0.00 B 4.00 K 0.00 % 0.00 % [jbd2/nvme0n1p2-8]

534502 be/4 root 0.00 B 56.00 K 0.00 % 0.00 % pmxcfs

534503 be/4 root 0.00 B 624.00 K 0.00 % 0.00 % pmxcfs

Total DISK READ: 0.00 B/s | Total DISK WRITE: 0.00 B/s

Current DISK READ: 0.00 B/s | Current DISK WRITE: 0.00 B/s

TID PRIO USER DISK READ DISK WRITE SWAPIN IO COMMAND

202 be/3 root 0.00 B 4.00 K 0.00 % 0.00 % [jbd2/nvme0n1p2-8]

534502 be/4 root 0.00 B 56.00 K 0.00 % 0.00 % pmxcfs

534503 be/4 root 0.00 B 624.00 K 0.00 % 0.00 % pmxcfs

Total DISK READ: 0.00 B/s | Total DISK WRITE: 86.20 M/s

Current DISK READ: 0.00 B/s | Current DISK WRITE: 86.82 M/s

TID PRIO USER DISK READ DISK WRITE SWAPIN IO COMMAND

202 be/3 root 0.00 B 4.00 K 0.00 % 0.00 % [jbd2/nvme0n1p2-8]

534502 be/4 root 0.00 B 41.88 M 0.00 % 0.00 % pmxcfs

534503 be/4 root 0.00 B 45.69 M 0.00 % 0.00 % pmxcfs

Total DISK READ: 0.00 B/s | Total DISK WRITE: 401.75 M/s

Current DISK READ: 0.00 B/s | Current DISK WRITE: 398.56 M/s

TID PRIO USER DISK READ DISK WRITE SWAPIN IO COMMAND

202 be/3 root 0.00 B 4.00 K 0.00 % 0.00 % [jbd2/nvme0n1p2-8]

534502 be/4 root 0.00 B 259.89 M 0.00 % 0.00 % pmxcfs

534503 be/4 root 0.00 B 231.52 M 0.00 % 0.00 % pmxcfs

Total DISK READ: 0.00 B/s | Total DISK WRITE: 117.91 M/s

Current DISK READ: 0.00 B/s | Current DISK WRITE: 121.10 M/s

TID PRIO USER DISK READ DISK WRITE SWAPIN IO COMMAND

202 be/3 root 0.00 B 4.00 K 0.00 % 0.00 % [jbd2/nvme0n1p2-8]

534502 be/4 root 0.00 B 321.38 M 0.00 % 0.00 % pmxcfs

534503 be/4 root 0.00 B 288.67 M 0.00 % 0.00 % pmxcfs

Total DISK READ: 0.00 B/s | Total DISK WRITE: 0.00 B/s

Current DISK READ: 0.00 B/s | Current DISK WRITE: 0.00 B/s

TID PRIO USER DISK READ DISK WRITE SWAPIN IO COMMAND

202 be/3 root 0.00 B 4.00 K 0.00 % 0.00 % [jbd2/nvme0n1p2-8]

534502 be/4 root 0.00 B 321.38 M 0.00 % 0.00 % pmxcfs

534503 be/4 root 0.00 B 288.67 M 0.00 % 0.00 % pmxcfs

Total DISK READ: 0.00 B/s | Total DISK WRITE: 0.00 B/s

Current DISK READ: 0.00 B/s | Current DISK WRITE: 0.00 B/s

TID PRIO USER DISK READ DISK WRITE SWAPIN IO COMMAND

202 be/3 root 0.00 B 4.00 K 0.00 % 0.00 % [jbd2/nvme0n1p2-8]

534502 be/4 root 0.00 B 321.38 M 0.00 % 0.00 % pmxcfs

534503 be/4 root 0.00 B 288.67 M 0.00 % 0.00 % pmxcfs

Total DISK READ: 0.00 B/s | Total DISK WRITE: 11.90 K/s

Current DISK READ: 0.00 B/s | Current DISK WRITE: 19.84 K/s

TID PRIO USER DISK READ DISK WRITE SWAPIN IO COMMAND

202 be/3 root 0.00 B 16.00 K 0.00 % 0.00 % [jbd2/nvme0n1p2-8]

534502 be/4 root 0.00 B 321.38 M 0.00 % 0.00 % pmxcfs

534503 be/4 root 0.00 B 288.67 M 0.00 % 0.00 % pmxcfs

Total DISK READ: 0.00 B/s | Total DISK WRITE: 0.00 B/s

Current DISK READ: 0.00 B/s | Current DISK WRITE: 0.00 B/s

TID PRIO USER DISK READ DISK WRITE SWAPIN IO COMMAND

202 be/3 root 0.00 B 16.00 K 0.00 % 0.00 % [jbd2/nvme0n1p2-8]

534502 be/4 root 0.00 B 321.38 M 0.00 % 0.00 % pmxcfs

534503 be/4 root 0.00 B 288.67 M 0.00 % 0.00 % pmxcfsI then repeated the same test but checked SMART values before:

Code:

Data Units Written: 85,358,591 [43.7 TB]

Host Write Commands: 1,865,050,181... and after:

Code:

Data Units Written: 85,359,841 [43.7 TB]

Host Write Commands: 1,865,053,472Yes, that is 1,250 net delta [2], so ~640M written onto the SSD - there's literally nothing else writing on that system.

I understand there are typically not meant to be 5K let alone 500K file writes, but ...

... at 5K ... >100x amplified write

... at 500K ... >1000x amplified write

pmxcfs tested

I originally did this with pmxcfs built from source - gcc version 12.2.0 (Debian 12.2.0-14):

https://git.proxmox.com/?p=pve-cluster.git;a=blob;f=src/pmxcfs/Makefile

But it is - of course - identical (save for the symbol table) when using the "official" one from pve-cluster 8.0.7 - both tested.

bs 512 / 4k / 128k

With a bs = 4K, the 500K writes amount to 80M+.

With a bs = 128K, i.e. 4x 128k write causes 5M written, the amplification is still 10x.

[1] https://pve.proxmox.com/wiki/Proxmox_Cluster_File_System_(pmxcfs)

[2] Manufacturer SMART specs on this field:

Data Units Written: Contains the number of 512 byte data units the host has written to the controller;

this value does not include metadata. This value is reported in thousands (i.e., a value of 1 corresponds to

1000 units of 512 bytes written) and is rounded up. When the LBA size is a value other than 512 bytes, the

controller shall convert the amount of data written to 512 byte units.

For the NVM command set, logical blocks written as part of Write operations shall be included in this

value. Write Uncorrectable commands shall not impact this value.

this value does not include metadata. This value is reported in thousands (i.e., a value of 1 corresponds to

1000 units of 512 bytes written) and is rounded up. When the LBA size is a value other than 512 bytes, the

controller shall convert the amount of data written to 512 byte units.

For the NVM command set, logical blocks written as part of Write operations shall be included in this

value. Write Uncorrectable commands shall not impact this value.

Last edited: