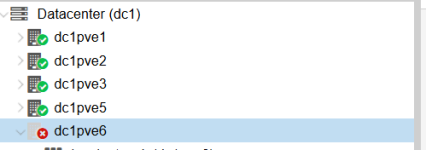

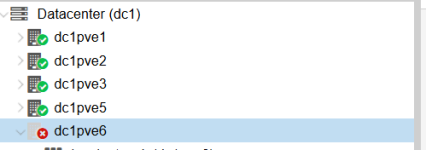

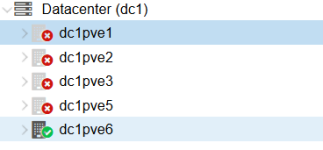

Hello, I'm facing the problem with adding the new node to existing PVE cluster. After adding the node through the web-interface, it shows as offline:

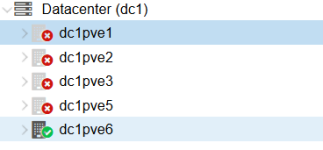

And on the new node all other nodes are shown offline:

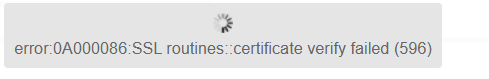

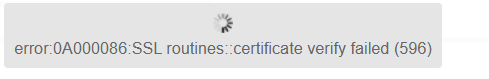

And the error I'm getting is:

I've tried to update certificates on the new node, but I'm getting an error:

Pvecm status on existing node:

pvecm status on new node:

Any suggestion is going to be highly appreciated, thank you!

And on the new node all other nodes are shown offline:

And the error I'm getting is:

I've tried to update certificates on the new node, but I'm getting an error:

Code:

dc1pve6# pvecm updatecerts -F

waiting for pmxcfs mount to appear and get quorate...

waiting for pmxcfs mount to appear and get quorate...

waiting for pmxcfs mount to appear and get quorate...

waiting for pmxcfs mount to appear and get quorate...

waiting for pmxcfs mount to appear and get quorate...

waiting for pmxcfs mount to appear and get quorate...

got timeout when trying to ensure cluster certificates and base file hierarchy is set up - no quorum (yet) or hung pmxcfs?Pvecm status on existing node:

Code:

dc1pve6# pvecm status

Cluster information

-------------------

Name: dc1

Config Version: 9

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Tue Jan 6 16:52:11 2026

Quorum provider: corosync_votequorum

Nodes: 4

Node ID: 0x00000004

Ring ID: 1.48d

Quorate: Yes

Votequorum information

----------------------

Expected votes: 5

Highest expected: 5

Total votes: 4

Quorum: 3

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

0x00000001 1 10.0.1.2

0x00000002 1 10.0.1.1

0x00000003 1 10.0.1.3

0x00000004 1 10.0.1.5 (local)pvecm status on new node:

Code:

dc1pve6# pvecm status

Cluster information

-------------------

Name: dc1

Config Version: 9

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Tue Jan 6 16:53:29 2026

Quorum provider: corosync_votequorum

Nodes: 1

Node ID: 0x00000005

Ring ID: 5.14

Quorate: No

Votequorum information

----------------------

Expected votes: 5

Highest expected: 5

Total votes: 1

Quorum: 3 Activity blocked

Flags:

Membership information

----------------------

Nodeid Votes Name

0x00000005 1 10.0.1.6 (local)Any suggestion is going to be highly appreciated, thank you!