Hello Community, I have a cluster with 5 nodes (currently) and I need clarification about hostname resolution.

Each node has 4 NICS and during the setup I configured a network interface for the web ui, one for the cluster network, one for a bridge entirely dedicated to the VMs and finally one dedicated to the ceph cluster.

I want to premise that everything is working fine, although most likely because of the 255.0.0.0 netmask. If I look at /etc/hosts some nodes resolve the name on 10.10.25.X others on 10.10.35.X which is the cluster network. I can't figure out where this difference is coming from. In the LAN I have the DNS service which always resolves the node name with the address of the management UI, but, actually, I can't figure out which is the right mapping between hostname and IP address to set in /etc/hosts. First 3 nodes have ceph installed, but having separate interfaces/net i don't think this could lead to problems.

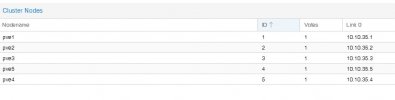

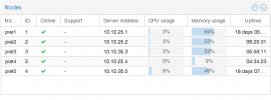

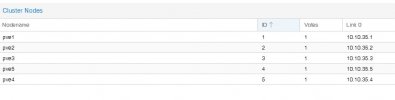

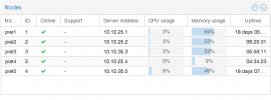

Look here at the "server address" column

I'm asking myself the problem also because the change of the netmask of the interfaces to a 255.255.255.0 is planned and I believe that as things are now I could have serious problems.

Thanks in advance to anyone who can give me suggestions.

Each node has 4 NICS and during the setup I configured a network interface for the web ui, one for the cluster network, one for a bridge entirely dedicated to the VMs and finally one dedicated to the ceph cluster.

Code:

10.10.25.x/8 Management Network

10.10.35.x/8 Cluster Network

10.10.30.x/8 Guests Network

172.16.10.x/16 Ceph Network

Code:

pvecm status

Cluster information

-------------------

Name: Cluster

Config Version: 11

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Tue Aug 22 17:54:43 2023

Quorum provider: corosync_votequorum

Nodes: 5

Node ID: 0x00000005

Ring ID: 1.52d

Quorate: Yes

Votequorum information

----------------------

Expected votes: 5

Highest expected: 5

Total votes: 5

Quorum: 3

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

0x00000001 1 10.10.35.1

0x00000002 1 10.10.35.2

0x00000003 1 10.10.35.3

0x00000004 1 10.10.35.5

0x00000005 1 10.10.35.4 (local)

Code:

root@pve4:~# cat /etc/pve/corosync.conf

logging {

debug: off

to_syslog: yes

}

nodelist {

node {

name: pve1

nodeid: 1

quorum_votes: 1

ring0_addr: 10.10.35.1

}

node {

name: pve2

nodeid: 2

quorum_votes: 1

ring0_addr: 10.10.35.2

}

node {

name: pve3

nodeid: 3

quorum_votes: 1

ring0_addr: 10.10.35.3

}

node {

name: pve4

nodeid: 5

quorum_votes: 1

ring0_addr: 10.10.35.4

}

node {

name: pve5

nodeid: 4

quorum_votes: 1

ring0_addr: 10.10.35.5

}

}

quorum {

provider: corosync_votequorum

}

totem {

cluster_name: Cluster

config_version: 11

interface {

linknumber: 0

}

ip_version: ipv4-6

link_mode: passive

secauth: on

version: 2

}I want to premise that everything is working fine, although most likely because of the 255.0.0.0 netmask. If I look at /etc/hosts some nodes resolve the name on 10.10.25.X others on 10.10.35.X which is the cluster network. I can't figure out where this difference is coming from. In the LAN I have the DNS service which always resolves the node name with the address of the management UI, but, actually, I can't figure out which is the right mapping between hostname and IP address to set in /etc/hosts. First 3 nodes have ceph installed, but having separate interfaces/net i don't think this could lead to problems.

Look here at the "server address" column

I'm asking myself the problem also because the change of the netmask of the interfaces to a 255.255.255.0 is planned and I believe that as things are now I could have serious problems.

Thanks in advance to anyone who can give me suggestions.