I've removed today a node from the cluster at work and to my surprise some backup jobs started running.

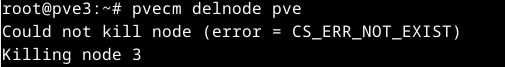

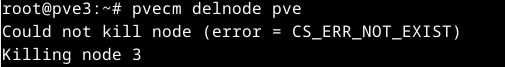

I've followed the steps in the documentation and removed from command line, and everything worked fine except for that "could not kill node" error, but the guide warns about it happening and being normal.

I don't have any backup jobs set up anywhere near the time this happened (around 1PM). And I don't remember this happening at all in all the years I've used PVE.

And the strange thing about all this is that not all the jobs got triggered, only those that are supposed to run the day that the node removal happened, (before or after didn't matter). This happened today, a Thursday.

Any ideas? Is there a condition in the node removal code that could trigger all jobs scheduled for that day?

I've followed the steps in the documentation and removed from command line, and everything worked fine except for that "could not kill node" error, but the guide warns about it happening and being normal.

I don't have any backup jobs set up anywhere near the time this happened (around 1PM). And I don't remember this happening at all in all the years I've used PVE.

- I've checked the clock of all the nodes and they are OK,

- I've checked the backups that triggered and those were NOT jobs that started the previous night and for some reason took longer than expected. No, they started around the time I've done the node removal,

- and even checked the logs going back a couple of months and this has not happened at all before. All backups happen at the time they're supposed to happen

And the strange thing about all this is that not all the jobs got triggered, only those that are supposed to run the day that the node removal happened, (before or after didn't matter). This happened today, a Thursday.

Any ideas? Is there a condition in the node removal code that could trigger all jobs scheduled for that day?