Hello,

I'm getting random errors in different lxc.

I think it's because I ran out of storage.

sda = 238GB SSD

sdb = 4TB HDD (is nearly full, but can be ignored, it's just media)

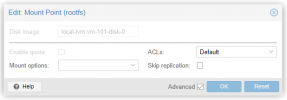

How can I proove this? Where I exactly can see, if sda is full?

And how can I reassign the data? Because in general, I don't think I'm out of space. If so, I would like to see the files that are supposed to be that large.

Thanks in advance!

fresh

I'm getting random errors in different lxc.

I think it's because I ran out of storage.

sda = 238GB SSD

sdb = 4TB HDD (is nearly full, but can be ignored, it's just media)

How can I proove this? Where I exactly can see, if sda is full?

And how can I reassign the data? Because in general, I don't think I'm out of space. If so, I would like to see the files that are supposed to be that large.

Code:

root@pve:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 238.5G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 512M 0 part /boot/efi

└─sda3 8:3 0 238G 0 part

├─pve-swap 253:0 0 8G 0 lvm [SWAP]

├─pve-root 253:1 0 59.3G 0 lvm /

├─pve-data_tmeta 253:2 0 1.6G 0 lvm

│ └─pve-data-tpool 253:4 0 151.6G 0 lvm

│ ├─pve-data 253:5 0 151.6G 1 lvm

│ ├─pve-vm--102--disk--0 253:6 0 32G 0 lvm

│ ├─pve-vm--104--disk--0 253:7 0 8G 0 lvm

│ ├─pve-vm--105--disk--0 253:8 0 8G 0 lvm

│ ├─pve-vm--103--disk--0 253:9 0 8G 0 lvm

│ ├─pve-vm--101--disk--0 253:10 0 100G 0 lvm

│ ├─pve-vm--199--disk--0 253:11 0 8G 0 lvm

│ ├─pve-vm--106--disk--0 253:12 0 32G 0 lvm

│ └─pve-vm--107--disk--0 253:13 0 4G 0 lvm

└─pve-data_tdata 253:3 0 151.6G 0 lvm

└─pve-data-tpool 253:4 0 151.6G 0 lvm

├─pve-data 253:5 0 151.6G 1 lvm

├─pve-vm--102--disk--0 253:6 0 32G 0 lvm

├─pve-vm--104--disk--0 253:7 0 8G 0 lvm

├─pve-vm--105--disk--0 253:8 0 8G 0 lvm

├─pve-vm--103--disk--0 253:9 0 8G 0 lvm

├─pve-vm--101--disk--0 253:10 0 100G 0 lvm

├─pve-vm--199--disk--0 253:11 0 8G 0 lvm

├─pve-vm--106--disk--0 253:12 0 32G 0 lvm

└─pve-vm--107--disk--0 253:13 0 4G 0 lvm

sdb 8:16 0 3.6T 0 disk

├─sdb1 8:17 0 16M 0 part

└─sdb2 8:18 0 3.6T 0 part /mnt/pve/data

Code:

root@pve:~# df -h

Filesystem Size Used Avail Use% Mounted on

udev 7.5G 8.0K 7.5G 1% /dev

tmpfs 1.5G 1.2M 1.5G 1% /run

/dev/mapper/pve-root 59G 21G 35G 37% /

tmpfs 7.5G 46M 7.5G 1% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/sda2 511M 328K 511M 1% /boot/efi

/dev/sdb2 3.7T 3.6T 57G 99% /mnt/pve/data

/dev/fuse 128M 20K 128M 1% /etc/pve

tmpfs 1.5G 0 1.5G 0% /run/user/0Thanks in advance!

fresh

Last edited: