Hello,

My understanding was that with the latest version of PVE, which I have updated to (this was a clean install of 8.0.3 that I've kept up to date), the volblocksize for new pools is now 16k.

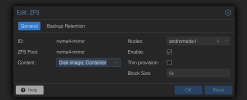

I just created an NVME mirror pool in the GUI, with default settings, and it set it up as shown below.

I'm going to enable thin provisioning, but should I go ahead and change the block size to 16k? 64k?

Someday all this won't be confusing.

Thanks!

My understanding was that with the latest version of PVE, which I have updated to (this was a clean install of 8.0.3 that I've kept up to date), the volblocksize for new pools is now 16k.

I just created an NVME mirror pool in the GUI, with default settings, and it set it up as shown below.

I'm going to enable thin provisioning, but should I go ahead and change the block size to 16k? 64k?

Someday all this won't be confusing.

Thanks!

Code:

# pveversion

pve-manager/8.1.4/ec5affc9e41f1d79 (running kernel: 6.5.13-1-pve)