Hello,

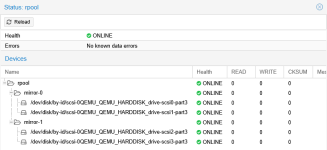

in our small office I run a standalone Proxmox VE on an older machine from 2020: Asus P11C-M/4L Mainboard, Intel Xeon E-2288G 8-Core 3,7 GHz, 64GB RAM, ZFS Raid Z1 with 3x 4TB WD Red SA500 SSDs on SATA.

The machine runs 3 linux servers and 3-4 Windows guests for homeoffice use and did that very well for some time. But since we canged Win10 to Win11, we have constant IO delay in a range of 1 - 10 % with peaks even higher. And the windows guests often get slow.

I understand that the Nas SSDs on SATA with ZFS are the bottleneck, but budget is low and better SSDs quite expensive these days. So I thought of getting a 6 or 8 port hardware raid controller, 2 additional smaller SSDs in raid 1 for the system and the 3 4TB SSDs in raid 5 for the VMs.

Do you think this change could possibly solve my IO delay issue?

As I am not much in hardware, I did not find a suitable raid controller with at least 6 SATA ports. Any recommenadtions here?

I know that my setup is not great for our use case, but it did perfectly well with Win10 guests, so I am hoping to keep it running for a while, until hardware prices hopefullyget back to normal.

Thanks for your thoughts!

Greetings, Michael

in our small office I run a standalone Proxmox VE on an older machine from 2020: Asus P11C-M/4L Mainboard, Intel Xeon E-2288G 8-Core 3,7 GHz, 64GB RAM, ZFS Raid Z1 with 3x 4TB WD Red SA500 SSDs on SATA.

The machine runs 3 linux servers and 3-4 Windows guests for homeoffice use and did that very well for some time. But since we canged Win10 to Win11, we have constant IO delay in a range of 1 - 10 % with peaks even higher. And the windows guests often get slow.

I understand that the Nas SSDs on SATA with ZFS are the bottleneck, but budget is low and better SSDs quite expensive these days. So I thought of getting a 6 or 8 port hardware raid controller, 2 additional smaller SSDs in raid 1 for the system and the 3 4TB SSDs in raid 5 for the VMs.

Do you think this change could possibly solve my IO delay issue?

As I am not much in hardware, I did not find a suitable raid controller with at least 6 SATA ports. Any recommenadtions here?

I know that my setup is not great for our use case, but it did perfectly well with Win10 guests, so I am hoping to keep it running for a while, until hardware prices hopefullyget back to normal.

Thanks for your thoughts!

Greetings, Michael