Hello,

I'm running a Ubuntu Virtual Machine on a Proxmox node and have been having experiences some strange behaviours when using multiple NICs with different VLANS.

When loading a website it will take 5-11s to load even though the server is locally hosted running nginx. It appears the time taken is related to TCP? I'm not sure what this means.

Another symptom is when connecting to the SSH it will also take a similar time 5-11s to establish a connection.

Strangely this behaviour only occurs when client is on VLAN 14 connecting to server's IP on VLAN 10. These issues are non-existent when communicating within the same VLAN client to server. I have scoured the entire internet and tried everything from ensuring only a default route is set to capturing traffic via tcpdump and trying to troubleshoot Asymmetric routing but I have failed to spot anything that might be causing this, let alone resolve it.

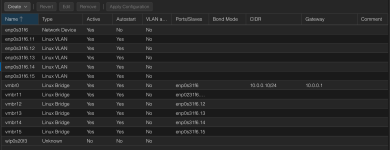

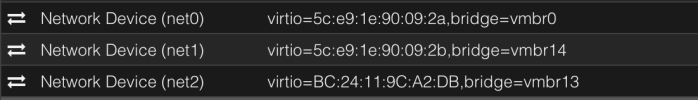

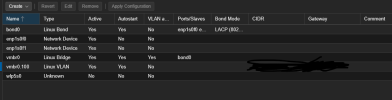

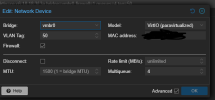

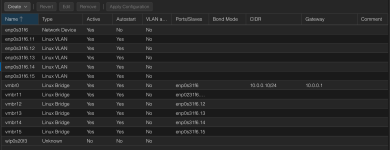

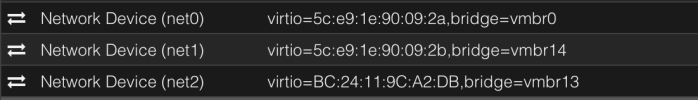

For some perspective the Virtual Machines is attached to vmbr10 with bridge port VLAN 10, vmbr14 with bridge port VLAN 13, vmbr13 with bridge port VLAN 13. I have also tried using a single vmbr with Vlan Aware enabled and configuring VLAN Tag when adding the interface to the VM.

The reason server's IP on VLAN 10 is being accessed by all other VLANs is due to it being used for DNS records. The only purpose of having other VLANs attached as seperate interfaces are for mDNS. I understand that this may be broader issue potentially more Linux / Networking rather than Proxmox but if you're a smart person please help me out with this one!

The client and server are directly connected to a Mikrotik RB5009 which has all VLANs in a bridge allowing for inter-VLAN routing, I doubt that this issue is related to the router but I thought it was worth mentioning anyway.

I'm running a Ubuntu Virtual Machine on a Proxmox node and have been having experiences some strange behaviours when using multiple NICs with different VLANS.

When loading a website it will take 5-11s to load even though the server is locally hosted running nginx. It appears the time taken is related to TCP? I'm not sure what this means.

Another symptom is when connecting to the SSH it will also take a similar time 5-11s to establish a connection.

Strangely this behaviour only occurs when client is on VLAN 14 connecting to server's IP on VLAN 10. These issues are non-existent when communicating within the same VLAN client to server. I have scoured the entire internet and tried everything from ensuring only a default route is set to capturing traffic via tcpdump and trying to troubleshoot Asymmetric routing but I have failed to spot anything that might be causing this, let alone resolve it.

For some perspective the Virtual Machines is attached to vmbr10 with bridge port VLAN 10, vmbr14 with bridge port VLAN 13, vmbr13 with bridge port VLAN 13. I have also tried using a single vmbr with Vlan Aware enabled and configuring VLAN Tag when adding the interface to the VM.

The reason server's IP on VLAN 10 is being accessed by all other VLANs is due to it being used for DNS records. The only purpose of having other VLANs attached as seperate interfaces are for mDNS. I understand that this may be broader issue potentially more Linux / Networking rather than Proxmox but if you're a smart person please help me out with this one!

The client and server are directly connected to a Mikrotik RB5009 which has all VLANs in a bridge allowing for inter-VLAN routing, I doubt that this issue is related to the router but I thought it was worth mentioning anyway.