Hello everyone,

Thanks by advance for reading my post.

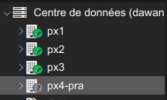

I have a cluster of 4 hosts(pve v8.2.3):

PX1|2|3 in OVH DC( Gravelines)

PX4-PRA in OVH DC (Roubaix)

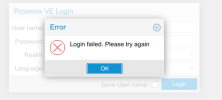

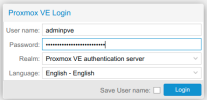

The last one is seen in the web UI as degraded:

I was looking for the problem when I saw an error in corosync service:

[KNET ] host: host: 1 has no active links

However after restarting the service, this message disappeared. I successfuly ping the interfaces of all host:

px4-pra => px1

=> px2

=> px3

I also restarted the pveproxy to be sure but In the web UI nothing changed.

Here are some other commands:

Anyone have an idea?

Thanks by advance for reading my post.

I have a cluster of 4 hosts(pve v8.2.3):

PX1|2|3 in OVH DC( Gravelines)

PX4-PRA in OVH DC (Roubaix)

The last one is seen in the web UI as degraded:

I was looking for the problem when I saw an error in corosync service:

[KNET ] host: host: 1 has no active links

However after restarting the service, this message disappeared. I successfuly ping the interfaces of all host:

px4-pra => px1

=> px2

=> px3

Code:

$ fping -qag 192.168.199.11/24

192.168.199.11

192.168.199.12

192.168.199.13

192.168.199.14I also restarted the pveproxy to be sure but In the web UI nothing changed.

Here are some other commands:

Code:

$ pvecm nodes

Membership information

----------------------

Nodeid Votes Name

1 1 px1

2 1 px3

4 1 px2

5 1 px4-pra (local)

Code:

$ pvecm status

Cluster information

-------------------

Name: dawan

Config Version: 12

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Thu Sep 5 09:41:50 2024

Quorum provider: corosync_votequorum

Nodes: 4

Node ID: 0x00000005

Ring ID: 1.404b

Quorate: Yes

Votequorum information

----------------------

Expected votes: 4

Highest expected: 4

Total votes: 4

Quorum: 3

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

0x00000001 1 192.168.199.11

0x00000002 1 192.168.199.13

0x00000004 1 192.168.199.12

0x00000005 1 192.168.199.14 (local)Anyone have an idea?