Hi,

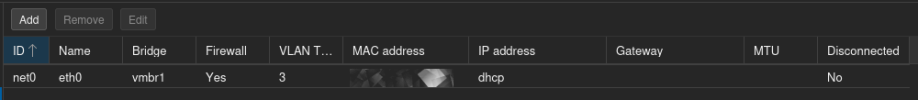

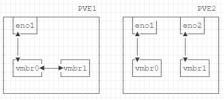

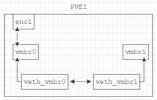

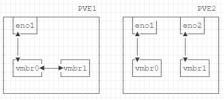

I have two nodes with different quantity of NIC's.

Let's assume PVE1 has only a single NIC eno1 which is connected to vmbr0

PVE2 has two NIC's eno1 and eno2, which are connected to vmbr0 and vmbr1.

I'd like to connect on PVE1 the vmbr1 bridge to vmbr0.

So i could run a VM on PVE2 and can easily migrate it to PVE1, without having the need to change the network bridge setting in the VM to have it boot.

vmbr1 setting would be "aliased" on the host without a second NIC and second bridge.

I was already reading and following the advice from this thread:

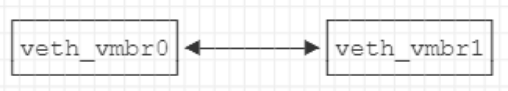

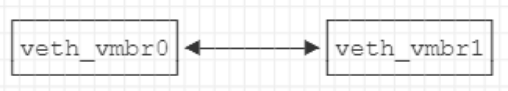

I have achieved to setup a veth-peer tunnel regarding the veth manpage : https://man7.org/linux/man-pages/man4/veth.4.html

But it's not yet connected to the bridges.

If a Linux Bridge can be considered a "virtual" Switch.

Then a veth-peer-tunnel is the equivalent of a "patch"-cable to connect those "Switches". (or CrossOver Cable so to say)

What is now the correct way to attach the veth interfaces to the bridge-ports setting in the vmbr0 and vmbr1.

May i connect on vmbr0 two devices (eno1 & veth_vmbr0) to bridge-ports? And on vmbr1 only veth_vmbr1 ?

How to achieve that correctly without breaking PVE Host Networking in the file /etc/network/interfaces ?

I have two nodes with different quantity of NIC's.

Let's assume PVE1 has only a single NIC eno1 which is connected to vmbr0

PVE2 has two NIC's eno1 and eno2, which are connected to vmbr0 and vmbr1.

I'd like to connect on PVE1 the vmbr1 bridge to vmbr0.

So i could run a VM on PVE2 and can easily migrate it to PVE1, without having the need to change the network bridge setting in the VM to have it boot.

vmbr1 setting would be "aliased" on the host without a second NIC and second bridge.

I was already reading and following the advice from this thread:

This is an example scheme

VM3 and VM4 are connected to vmbr2, but are accessible to eno1, through vmbr1.

I can't figure out if it is possible to create the link between vmbr1 and vmbr2.

Code:

eno1

|

V

vmbr1 <----------> vmbr2

| |

V V

VM1-VM2 VM3-VM4I can't figure out if it is possible to create the link between vmbr1 and vmbr2.

I have achieved to setup a veth-peer tunnel regarding the veth manpage : https://man7.org/linux/man-pages/man4/veth.4.html

But it's not yet connected to the bridges.

root@pve1:~# cat /etc/network/interfaces.d/veth_peer

auto veth_vmbr0

iface veth_vmbr0 inet manual

link-type veth

veth-peer-name veth_vmbr1

auto veth_vmbr1

iface veth_vmbr1 inet manual

link-type veth

veth-peer-name veth_vmbr0

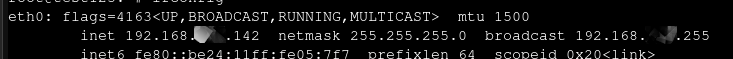

root@pve1:~# ifconfig | grep veth_vmbr

veth_vmbr0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

veth_vmbr1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

root@pve1:~# ifconfig

...

veth_vmbr0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet6 fe80::8828:1dff:xxxx:4ed1 prefixlen 64 scopeid 0x20<link>

ether 8a:28:1d:f9:xx:xx txqueuelen 1000 (Ethernet)

RX packets 167 bytes 11786 (11.5 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 170 bytes 11996 (11.7 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

veth_vmbr1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet6 fe80::94bc:caff:xxxx:8003 prefixlen 64 scopeid 0x20<link>

ether 96:bc:ca:91:xx:xx txqueuelen 1000 (Ethernet)

RX packets 170 bytes 11996 (11.7 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 167 bytes 11786 (11.5 KiB)

...

root@pve1:~# ip a show veth_vmbr0

54: veth_vmbr0@veth_vmbr1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 8a:28:1d:f9:xx:xx brd ff:ff:ff:ff:ff:ff

inet6 fe80::8828:1dff:xxxx:4ed1/64 scope link

valid_lft forever preferred_lft forever

root@pve1:~# ip a show veth_vmbr1

53: veth_vmbr1@veth_vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 96:bc:ca:91:xx:xx brd ff:ff:ff:ff:ff:ff

inet6 fe80::94bc:caff:xxxx:8003/64 scope link

valid_lft forever preferred_lft forever

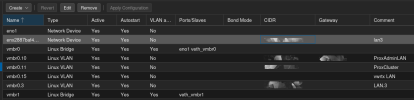

root@pve1:~# cat /etc/network/interfaces

# network interface settings; autogenerated

# Please do NOT modify this file directly, unless you know what

# you're doing.

#

# If you want to manage parts of the network configuration manually,

# please utilize the 'source' or 'source-directory' directives to do

# so.

# PVE will preserve these directives, but will NOT read its network

# configuration from sourced files, so do not attempt to move any of

# the PVE managed interfaces into external files!

source /etc/network/interfaces.d/*

auto lo

iface lo inet loopback

auto eno1

iface eno1 inet manual

auto vmbr0

iface vmbr0 inet manual

bridge-ports eno1

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

...

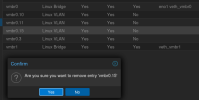

auto vmbr1

iface vmbr1 inet manual

bridge-ports none

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

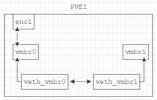

If a Linux Bridge can be considered a "virtual" Switch.

Then a veth-peer-tunnel is the equivalent of a "patch"-cable to connect those "Switches". (or CrossOver Cable so to say)

What is now the correct way to attach the veth interfaces to the bridge-ports setting in the vmbr0 and vmbr1.

May i connect on vmbr0 two devices (eno1 & veth_vmbr0) to bridge-ports? And on vmbr1 only veth_vmbr1 ?

How to achieve that correctly without breaking PVE Host Networking in the file /etc/network/interfaces ?

auto vmbr0

iface vmbr0 inet manual

bridge-ports eno1 veth_vmbr0

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

...

auto vmbr1

iface vmbr1 inet manual

bridge-ports veth_vmbr1

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094