Dear Members,

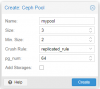

Is it possible to enable compression in rbd?

ceph osd pool set mypool compression_algorithm snappy

ceph osd pool set mypool compression_mode aggressive

don't working.

Is it possible to enable compression in rbd?

ceph osd pool set mypool compression_algorithm snappy

ceph osd pool set mypool compression_mode aggressive

don't working.