Hey everyone,

It's been over 2 years and sadly this is still an issue.

Im using myself my own script since 2 years, however i had always some troubles with arp and multicast, not big issues, but issues.

Let me explain:

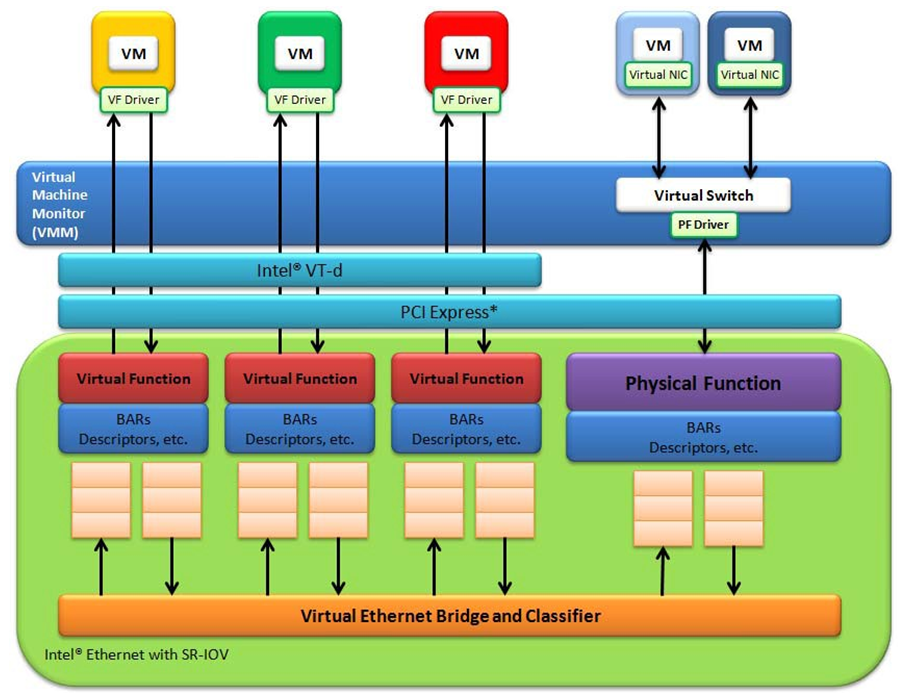

I have a bridge for containers and VM's, but a VF for Opnsense, vf has simply a lot more performance here.

Maybe vf isn't important for some 1gbe networks, but here it's all 10gbe and i have 5 vlans to route between.

The opnsense is the router for all networks here.

However, the cool thing is, i need only one 10gbe port for the Server itself, because the vmbridge sits on the Physical Interface and the Virtual Funtion of the Physical Interface is assigned to Opnsense. Actually a nice solution, but well, i abandoned this setup, because of the weird behaviour i had always with opnsense.

So after digging and testing around i want to share some "things" i found.

Maybe thats interresting for one or another.

1. Avoid using

bridge-vids 2-4094, use instead exatly the vlans you need! Example:

bridge-vids 20 23-28

-> The issue is, that the fdb table gets simply flooded with all the vlans you never need. Check yourself with

bridge fdb show

-> I don't know if its actually a performance concern, but i think that a smaller fdb table is at least better.

2.

/usr/bin/ip link set enp35s0f0 vf 0 mac d0:20:55:db:fb:20 vlan 0 trust on spoofchk off

-> 1. vlan 0 = vlan filtering off

-> 2. if you see in your logs "spoofed packages detected":

-----> either disable spoofchk

-----> or set the proper vlan for your vm, example:

ip link set enp35s0f0 vf 0 vlan 25

-----> or even setting vlan 0, that will fix it either, i don't know why...

-----> I do simply "vlan 0" and "spoofchk off", that worked simply the best for me, however if you disable vlan filtering with "vlan 0" and you're running opnsense, you need to disable in opnsense hardware vlan filtering either. So for opnsense i recommend to not set the vlan option at all, just spoofchk off. Then hardware vlan filtering works. However, it would be nicer to know how to add trunk vlans to the vf interface.

---------> i tryed

ip link set enp35s0f0 vf 1 vlan 25 proto 802.1Q trunk 23-28, but that doesn't work. But you can passthrough multiple VF's with individual vlans to opnsense, instead of one VF and creating vlans inside opnsense (with hw vlan filtering)

-> 3. Windows VM's (at least W11), requires

trust on, the Windows VM change the Mac-Address always to something random, so you need the trust mode.

3. What i've switched to & issues i had:

-> 1. I was never able to get WSS Working for my samba shares, if the Samba Server is in another Vlan as the Windows Client. (Multicast)

-> 2. Jellyfin/Plex UPnP was never working between vlan's. Same as above (Multicast)

-> 3. Opnsense-HA never worked reliable (Arp)

----- That were actually the only issues i had. (I thought at least before switching)

> My X550 has 2x10Gbe ports, so 2 PF.

-> I use one PF directly for vmbr0 now and the other PF i passtrough directly to opnsense.

--> This has the Downside that i need 2 10GBe ports to be connected to the switch.

--> What works perfectly well either is using 1PF for vmbr and making as much VF's as you want on the Second PF, that works perfectly either!

-> That way everything starts to work, i even see my printers and gadgets and ehatever else between vlans.

4. A weird workaround, which works without needing to edit the fdb table:

-> Set the PF to Auto:

iface enp35s0f0 inet auto, don't attach vmbr to it!

--> add

iface enp35s0f0v0 inet manual and use it for your vmbr!

-> Why ever, but if you use the vmbr on the virtual function instead of pf, everything can communicate with each other.

-> However, you need to unbind ixgbev and attach vfio-pci to every function that you want to passthrough. See below how to do that:

5. How to passthrough only one of 2 identical devices, in case of 2 Primary Functions, or Multiple Virtual Functions:

-> 1. Check the bus of your VF or PF, that you want to passthough with:

lspci -nnk | grep -A4 Eth

-> In my case it's a PF with the busid of 0000:23:00.1 and device id of "[8086:1563]"

-> So the Service would look as fullowing:

-> /etc/systemd/system/sriov-Blacklist.service

Code:

[Unit]

Description=Script to Blacklist one X550 Port on boot

Before=network-pre.target

After=sysinit.target local-fs.target

[Service]

Type=oneshot

# Blacklisting Passthrough Port

ExecStart=/usr/bin/sriov-blacklist.sh

[Install]

WantedBy=multi-user.target

-> /usr/bin/sriov-blacklist.sh

Code:

#!/usr/bin/sh

/usr/bin/echo "0000:23:00.1" > "/sys/bus/pci/devices/0000:23:00.1/driver/unbind"

sleep 0.1

/usr/bin/echo "8086 1563" > "/sys/bus/pci/drivers/vfio-pci/new_id"

-> You can add in case of VF's as much "unbind's" as you want, just multiple lines before the "sleep 0.1"

-> vfio-pci will bind automatically every "unbinded" device through it's device-id, so you don't need that multiple times, because all your VF's have anyway the same device id's.

6. This whole linux bridge fdb issue, has nothing todo with proxmox, this happens even with esxi or any other linux distribution. I don't actually know why this can't be somehow fixed.

Seems like enterprises never mix VF's and Linux Bridges, the use either one or another, dunno.

However, it's sad that we can't have a better workaround.

And as last, this isn't even an Intel Issue, it happens actually with every sr-iov capable cards (mellanox etc..) either. So it needs actually somewhat of an kernel implementation.

Cheers