Currently running a 3 node cluster with Ceph, suffering from unusably bad random read/write performance.

Each node is running 2x 22 core Xeon chips with 256GB RAM.

Ceph network is 10G, MTU 9000 - I have verified this is being used correctly.

Currently running 2 SATA SSD OSDs per node, increasing to 7 OSDs per node didn't make a difference.

SSDs are consumer grade, however using Kingston DC600M enterprise disks made no difference.

Disk level caching is ON, disabling it just made things worse

Increasing threads per shard made no difference.

Ceph pool configured as 3 way replication.

Guest configured with 8 cores, 16GB RAM, VirtIO SCSI single, CPU Type=Host, NUMA=ON.

Write through and write back caching increase write performance to acceptable levels but doesn't impact read performance.

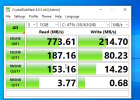

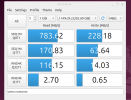

I've attached a CrystalDisk screenshot from a W10 VM, and kdiskmark from Ubuntu.

Is this the performance I should expect from 3 nodes? I'm looking to scale up to 4 or 5 with 6-8 OSDs per node however if I can't get anything close to acceptable figures with 3 nodes I won't be able to justify the cost.

Each node is running 2x 22 core Xeon chips with 256GB RAM.

Ceph network is 10G, MTU 9000 - I have verified this is being used correctly.

Currently running 2 SATA SSD OSDs per node, increasing to 7 OSDs per node didn't make a difference.

SSDs are consumer grade, however using Kingston DC600M enterprise disks made no difference.

Disk level caching is ON, disabling it just made things worse

Increasing threads per shard made no difference.

Ceph pool configured as 3 way replication.

Guest configured with 8 cores, 16GB RAM, VirtIO SCSI single, CPU Type=Host, NUMA=ON.

Write through and write back caching increase write performance to acceptable levels but doesn't impact read performance.

I've attached a CrystalDisk screenshot from a W10 VM, and kdiskmark from Ubuntu.

Is this the performance I should expect from 3 nodes? I'm looking to scale up to 4 or 5 with 6-8 OSDs per node however if I can't get anything close to acceptable figures with 3 nodes I won't be able to justify the cost.