Hello

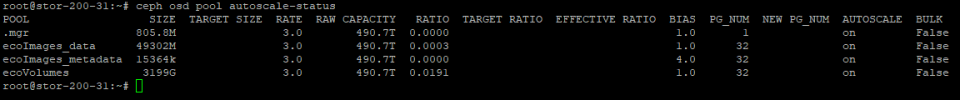

Out of the 16 PGS, lets zoom into these 2 PGS 8.14 and 7.1b. since 8.14 is active+clean why does it still listed in the 16 pgs not deep-scrubbed in time and pgs not scrubbed in time?

I have performed the following ceph config set global osd_deep_scrub_interval 1209600 https://heiterbiswolkig.blogs.nde.ag/2024/09/06/pgs-not-deep-scrubbed-in-time/ just because we are using HDD with SSD as WAL Device (400G per OSD) and 4x10G SFP+ Bonded Interface.

This is ceph dedicated node without any compute VMs on it.

Any tips is very much appreciated.

Some information

root@stor-200-31:~# ceph health detail

HEALTH_WARN 16 pgs not deep-scrubbed in time; 16 pgs not scrubbed in time

[WRN] PG_NOT_DEEP_SCRUBBED: 16 pgs not deep-scrubbed in time

pg 8.14 not deep-scrubbed since 2024-09-27T19:42:47.463766+0700

pg 7.1b not deep-scrubbed since 2024-09-30T21:54:16.584697+0700

pg 8.1b not deep-scrubbed since 2024-09-29T23:22:37.812579+0700

pg 8.1d not deep-scrubbed since 2024-10-01T03:51:01.714780+0700

pg 6.13 not deep-scrubbed since 2024-09-26T07:16:31.387575+0700

pg 6.12 not deep-scrubbed since 2024-09-27T04:55:34.699188+0700

pg 8.3 not deep-scrubbed since 2024-09-29T20:57:40.973759+0700

pg 7.c not deep-scrubbed since 2024-10-01T22:57:28.955052+0700

pg 8.b not deep-scrubbed since 2024-10-01T09:15:55.223268+0700

pg 7.3 not deep-scrubbed since 2024-10-02T05:12:50.743544+0700

pg 7.18 not deep-scrubbed since 2024-09-30T17:57:34.550318+0700

pg 8.a not deep-scrubbed since 2024-09-30T10:37:06.429820+0700

pg 8.5 not deep-scrubbed since 2024-09-25T19:23:57.422280+0700

pg 8.9 not deep-scrubbed since 2024-09-30T17:57:33.493494+0700

pg 8.4 not deep-scrubbed since 2024-09-30T21:54:13.122287+0700

pg 8.10 not deep-scrubbed since 2024-10-02T20:07:42.793192+0700

[WRN] PG_NOT_SCRUBBED: 16 pgs not scrubbed in time

pg 8.14 not scrubbed since 2024-10-02T08:04:26.363903+0700

pg 7.1b not scrubbed since 2024-10-02T03:14:53.854041+0700

pg 8.1b not scrubbed since 2024-10-02T15:06:12.351794+0700

pg 8.1d not scrubbed since 2024-10-02T11:57:57.600594+0700

pg 6.13 not scrubbed since 2024-10-02T01:48:49.382133+0700

pg 6.12 not scrubbed since 2024-10-02T08:19:53.026422+0700

pg 8.3 not scrubbed since 2024-10-02T04:29:55.426251+0700

pg 7.c not scrubbed since 2024-10-01T22:57:28.955052+0700

pg 8.b not scrubbed since 2024-10-02T09:36:03.568661+0700

pg 7.3 not scrubbed since 2024-10-02T05:12:50.743544+0700

pg 7.18 not scrubbed since 2024-10-01T22:17:53.504919+0700

pg 8.a not scrubbed since 2024-10-01T19:42:53.953996+0700

pg 8.5 not scrubbed since 2024-10-02T10:04:33.412894+0700

pg 8.9 not scrubbed since 2024-10-01T20:22:40.181880+0700

pg 8.4 not scrubbed since 2024-10-01T21:56:08.596293+0700

pg 8.10 not scrubbed since 2024-10-02T20:07:42.793192+0700

root@stor-200-31:~# ceph pg dump

version 6071487

stamp 2024-11-10T07:14:57.114229+0700

last_osdmap_epoch 0

last_pg_scan 0

PG_STAT OBJECTS MISSING_ON_PRIMARY DEGRADED MISPLACED UNFOUND BYTES OMAP_BYTES* OMAP_KEYS* LOG LOG_DUPS DISK_LOG STATE STATE_STAMP VERSION REPORTED UP UP_PRIMARY ACTING ACTING_PRIMARY LAST_SCRUB SCRUB_STAMP LAST_DEEP_SCRUB DEEP_SCRUB_STAMP SNAPTRIMQ_LEN LAST_SCRUB_DURATION SCRUB_SCHEDULING OBJECTS_SCRUBBED OBJECTS_TRIMMED

8.14 27860 0 0 0 0 111874754688 304 32 10086 0 10086 active+clean 2024-11-10T07:14:50.216826+0700 17929'332796369 17929:392978053 [13,56,34] 13 [13,56,34] 13 15754'216006287 2024-10-02T08:04:26.363903+0700 15500'202479289 2024-09-27T19:42:47.463766+0700 0 25 queued for deep scrub 26167 113

7.1b 1 0 0 0 0 4194304 0 0 1234 0 1234 active+clean+scrubbing+deep 2024-11-10T07:14:23.378798+0700 13029'1234 17929:1224489 [47,56,31] 47 [47,56,31] 47 13029'1234 2024-10-02T03:14:53.854041+0700 13029'1234 2024-09-30T21:54:16.584697+0700 0 1 queued for deep scrub 1 0

root@stor-200-31:~# ceph -s

cluster:

id: cc41de92-1edd-4f12-9803-884cdcea0c13

health: HEALTH_WARN

16 pgs not deep-scrubbed in time

16 pgs not scrubbed in time

services:

mon: 3 daemons, quorum stor-200-31,stor-200-30,stor-200-29 (age 3M)

mgr: stor-200-29(active, since 4M), standbys: stor-200-30, stor-200-31

mds: 1/1 daemons up, 2 standby

osd: 64 osds: 64 up (since 7d), 64 in (since 7d)

data:

volumes: 1/1 healthy

pools: 4 pools, 97 pgs

objects: 910.68k objects, 3.3 TiB

usage: 34 TiB used, 456 TiB / 491 TiB avail

pgs: 92 active+clean

5 active+clean+scrubbing+deep

io:

client: 17 KiB/s rd, 11 MiB/s wr, 6 op/s rd, 1.39k op/s wr

installed software:

proxmox-ve: 8.2.0 (running kernel: 6.8.8-1-pve)

pve-manager: 8.2.4 (running version: 8.2.4/faa83925c9641325)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.8: 6.8.8-1

proxmox-kernel-6.8.8-1-pve-signed: 6.8.8-1

proxmox-kernel-6.8.4-3-pve-signed: 6.8.4-3

proxmox-kernel-6.8.4-2-pve-signed: 6.8.4-2

ceph-fuse: 18.2.2-pve1

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx8

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.1

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.3

libpve-access-control: 8.1.4

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.0.7

libpve-cluster-perl: 8.0.7

libpve-common-perl: 8.2.1

libpve-guest-common-perl: 5.1.3

libpve-http-server-perl: 5.1.0

libpve-network-perl: 0.9.8

libpve-rs-perl: 0.8.9

libpve-storage-perl: 8.2.2

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.2.4-1

proxmox-backup-file-restore: 3.2.4-1

proxmox-firewall: 0.4.2

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.3

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.6

proxmox-widget-toolkit: 4.2.3

pve-cluster: 8.0.7

pve-container: 5.1.12

pve-docs: 8.2.2

pve-edk2-firmware: 4.2023.08-4

pve-esxi-import-tools: 0.7.1

pve-firewall: 5.0.7

pve-firmware: 3.12-1

pve-ha-manager: 4.0.5

pve-i18n: 3.2.2

pve-qemu-kvm: 8.1.5-6

pve-xtermjs: 5.3.0-3

qemu-server: 8.2.1

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.4-pve1

Out of the 16 PGS, lets zoom into these 2 PGS 8.14 and 7.1b. since 8.14 is active+clean why does it still listed in the 16 pgs not deep-scrubbed in time and pgs not scrubbed in time?

I have performed the following ceph config set global osd_deep_scrub_interval 1209600 https://heiterbiswolkig.blogs.nde.ag/2024/09/06/pgs-not-deep-scrubbed-in-time/ just because we are using HDD with SSD as WAL Device (400G per OSD) and 4x10G SFP+ Bonded Interface.

This is ceph dedicated node without any compute VMs on it.

Any tips is very much appreciated.

Some information

root@stor-200-31:~# ceph health detail

HEALTH_WARN 16 pgs not deep-scrubbed in time; 16 pgs not scrubbed in time

[WRN] PG_NOT_DEEP_SCRUBBED: 16 pgs not deep-scrubbed in time

pg 8.14 not deep-scrubbed since 2024-09-27T19:42:47.463766+0700

pg 7.1b not deep-scrubbed since 2024-09-30T21:54:16.584697+0700

pg 8.1b not deep-scrubbed since 2024-09-29T23:22:37.812579+0700

pg 8.1d not deep-scrubbed since 2024-10-01T03:51:01.714780+0700

pg 6.13 not deep-scrubbed since 2024-09-26T07:16:31.387575+0700

pg 6.12 not deep-scrubbed since 2024-09-27T04:55:34.699188+0700

pg 8.3 not deep-scrubbed since 2024-09-29T20:57:40.973759+0700

pg 7.c not deep-scrubbed since 2024-10-01T22:57:28.955052+0700

pg 8.b not deep-scrubbed since 2024-10-01T09:15:55.223268+0700

pg 7.3 not deep-scrubbed since 2024-10-02T05:12:50.743544+0700

pg 7.18 not deep-scrubbed since 2024-09-30T17:57:34.550318+0700

pg 8.a not deep-scrubbed since 2024-09-30T10:37:06.429820+0700

pg 8.5 not deep-scrubbed since 2024-09-25T19:23:57.422280+0700

pg 8.9 not deep-scrubbed since 2024-09-30T17:57:33.493494+0700

pg 8.4 not deep-scrubbed since 2024-09-30T21:54:13.122287+0700

pg 8.10 not deep-scrubbed since 2024-10-02T20:07:42.793192+0700

[WRN] PG_NOT_SCRUBBED: 16 pgs not scrubbed in time

pg 8.14 not scrubbed since 2024-10-02T08:04:26.363903+0700

pg 7.1b not scrubbed since 2024-10-02T03:14:53.854041+0700

pg 8.1b not scrubbed since 2024-10-02T15:06:12.351794+0700

pg 8.1d not scrubbed since 2024-10-02T11:57:57.600594+0700

pg 6.13 not scrubbed since 2024-10-02T01:48:49.382133+0700

pg 6.12 not scrubbed since 2024-10-02T08:19:53.026422+0700

pg 8.3 not scrubbed since 2024-10-02T04:29:55.426251+0700

pg 7.c not scrubbed since 2024-10-01T22:57:28.955052+0700

pg 8.b not scrubbed since 2024-10-02T09:36:03.568661+0700

pg 7.3 not scrubbed since 2024-10-02T05:12:50.743544+0700

pg 7.18 not scrubbed since 2024-10-01T22:17:53.504919+0700

pg 8.a not scrubbed since 2024-10-01T19:42:53.953996+0700

pg 8.5 not scrubbed since 2024-10-02T10:04:33.412894+0700

pg 8.9 not scrubbed since 2024-10-01T20:22:40.181880+0700

pg 8.4 not scrubbed since 2024-10-01T21:56:08.596293+0700

pg 8.10 not scrubbed since 2024-10-02T20:07:42.793192+0700

root@stor-200-31:~# ceph pg dump

version 6071487

stamp 2024-11-10T07:14:57.114229+0700

last_osdmap_epoch 0

last_pg_scan 0

PG_STAT OBJECTS MISSING_ON_PRIMARY DEGRADED MISPLACED UNFOUND BYTES OMAP_BYTES* OMAP_KEYS* LOG LOG_DUPS DISK_LOG STATE STATE_STAMP VERSION REPORTED UP UP_PRIMARY ACTING ACTING_PRIMARY LAST_SCRUB SCRUB_STAMP LAST_DEEP_SCRUB DEEP_SCRUB_STAMP SNAPTRIMQ_LEN LAST_SCRUB_DURATION SCRUB_SCHEDULING OBJECTS_SCRUBBED OBJECTS_TRIMMED

8.14 27860 0 0 0 0 111874754688 304 32 10086 0 10086 active+clean 2024-11-10T07:14:50.216826+0700 17929'332796369 17929:392978053 [13,56,34] 13 [13,56,34] 13 15754'216006287 2024-10-02T08:04:26.363903+0700 15500'202479289 2024-09-27T19:42:47.463766+0700 0 25 queued for deep scrub 26167 113

7.1b 1 0 0 0 0 4194304 0 0 1234 0 1234 active+clean+scrubbing+deep 2024-11-10T07:14:23.378798+0700 13029'1234 17929:1224489 [47,56,31] 47 [47,56,31] 47 13029'1234 2024-10-02T03:14:53.854041+0700 13029'1234 2024-09-30T21:54:16.584697+0700 0 1 queued for deep scrub 1 0

root@stor-200-31:~# ceph -s

cluster:

id: cc41de92-1edd-4f12-9803-884cdcea0c13

health: HEALTH_WARN

16 pgs not deep-scrubbed in time

16 pgs not scrubbed in time

services:

mon: 3 daemons, quorum stor-200-31,stor-200-30,stor-200-29 (age 3M)

mgr: stor-200-29(active, since 4M), standbys: stor-200-30, stor-200-31

mds: 1/1 daemons up, 2 standby

osd: 64 osds: 64 up (since 7d), 64 in (since 7d)

data:

volumes: 1/1 healthy

pools: 4 pools, 97 pgs

objects: 910.68k objects, 3.3 TiB

usage: 34 TiB used, 456 TiB / 491 TiB avail

pgs: 92 active+clean

5 active+clean+scrubbing+deep

io:

client: 17 KiB/s rd, 11 MiB/s wr, 6 op/s rd, 1.39k op/s wr

installed software:

proxmox-ve: 8.2.0 (running kernel: 6.8.8-1-pve)

pve-manager: 8.2.4 (running version: 8.2.4/faa83925c9641325)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.8: 6.8.8-1

proxmox-kernel-6.8.8-1-pve-signed: 6.8.8-1

proxmox-kernel-6.8.4-3-pve-signed: 6.8.4-3

proxmox-kernel-6.8.4-2-pve-signed: 6.8.4-2

ceph-fuse: 18.2.2-pve1

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx8

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.1

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.3

libpve-access-control: 8.1.4

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.0.7

libpve-cluster-perl: 8.0.7

libpve-common-perl: 8.2.1

libpve-guest-common-perl: 5.1.3

libpve-http-server-perl: 5.1.0

libpve-network-perl: 0.9.8

libpve-rs-perl: 0.8.9

libpve-storage-perl: 8.2.2

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.2.4-1

proxmox-backup-file-restore: 3.2.4-1

proxmox-firewall: 0.4.2

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.3

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.6

proxmox-widget-toolkit: 4.2.3

pve-cluster: 8.0.7

pve-container: 5.1.12

pve-docs: 8.2.2

pve-edk2-firmware: 4.2023.08-4

pve-esxi-import-tools: 0.7.1

pve-firewall: 5.0.7

pve-firmware: 3.12-1

pve-ha-manager: 4.0.5

pve-i18n: 3.2.2

pve-qemu-kvm: 8.1.5-6

pve-xtermjs: 5.3.0-3

qemu-server: 8.2.1

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.4-pve1