Ceph RBD Storage Shrinking Over Time – From 10TB up to 8.59TB

- Thread starter jorcrox

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Could you share us your OSDs Config, Crush Map and your Configured Pools in Ceph?I have a cluster with three Proxmox servers connected via Ceph. Since the beginning, the effective storage was 10TB, but over time, it has decreased to 8.59TB, and I don’t know why. The filesystem is RBD. Why is my Ceph RBD storage shrinking? How can I reclaim lost space?

View attachment 83286

Last edited:

Please post the output ofyour Configured Pools in Ceph?

ceph df.Could you share us your OSDs Config, Crush Map and your Configured Pools in Ceph?

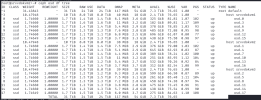

this is Config, Crush Map and config

Attachments

Last edited:

Please post the output ofceph df.

Attachments

Why do you have set a max size of 10 and a min size of 1?this is Config, Crush Map and config

I only saw 3 Nodes, so the max size should be 3 and min size should be 2.

Code:

# rules

rule replicated_rule {

id 0

type replicated

min_size 1

max_size 10

step take default

step chooseleaf firstn 0 type host

step emit

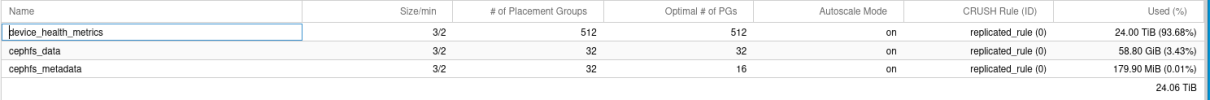

}Why are there 8TB data stored in device_health_metrics?

Do you store RBD data in this pool? You should create a separate pool for RBD data.

https://pve.proxmox.com/wiki/Ceph_P...ages_are_stored_on_pool_device_health_metrics

Do you store RBD data in this pool? You should create a separate pool for RBD data.

https://pve.proxmox.com/wiki/Ceph_P...ages_are_stored_on_pool_device_health_metrics

This is for the rule, it is not the size of the pool.Why do you have set a max size of 10 and a min size of 1?

An external company configured our server, and I don’t fully understand the settings they applied. Now, I am facing a major issue because the storage is almost full, and I don’t know how to fix it. I need guidance on how to resolve this problem. Is there any way to address it?"

Have you tried contacting the Company that installed the servers for you?An external company configured our server, and I don’t fully understand the settings they applied. Now, I am facing a major issue because the storage is almost full, and I don’t know how to fix it. I need guidance on how to resolve this problem. Is there any way to address it?"

I am now in charge of our server, and I am looking for a solution to this issue. However, I don’t know how to fix it. Any guidance would be greatly appreciated.Have you tried contacting the Company that installed the servers for you?

Since the "device_health_metrics" pool is present, you must be on older versions of Ceph & Proxmox VE.

Before you upgrade, create a new pool and move all the disk of the VMs to it. If you check the configuration of the "CephPool01" storage in Datacenter -> Storage, it will most likely show that the "device_health_metrics" pool is used.

It was possible to use it for disk image storage due to not enough safety checks in older versions of Proxmox VE.

Only once you have moved all disk to the new pool, can you consider upgrading.

The reason is that in newer Ceph versions, this pool gets renamed to ".mgr" and will break the disk images in the process!

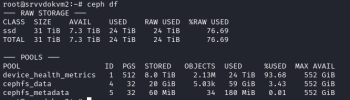

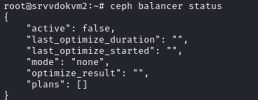

The reason why you see the overall space go down is because some OSDs are quite a bit fuller than others. Check if the balancer is enabled.

It will make sure that OSDs are used more evenly by moving PGs around in cases where the CRUSH algorithm alone is not able to provide a good even distribution of the data across OSDs.

You should be at max on Pacific (v16). The Balancer docs for this version can be found at https://docs.ceph.com/en/pacific/rados/operations/balancer/

Before you upgrade, create a new pool and move all the disk of the VMs to it. If you check the configuration of the "CephPool01" storage in Datacenter -> Storage, it will most likely show that the "device_health_metrics" pool is used.

It was possible to use it for disk image storage due to not enough safety checks in older versions of Proxmox VE.

Only once you have moved all disk to the new pool, can you consider upgrading.

The reason is that in newer Ceph versions, this pool gets renamed to ".mgr" and will break the disk images in the process!

The reason why you see the overall space go down is because some OSDs are quite a bit fuller than others. Check if the balancer is enabled.

Code:

ceph balancer statusYou should be at max on Pacific (v16). The Balancer docs for this version can be found at https://docs.ceph.com/en/pacific/rados/operations/balancer/

What are the repercussions of activating the balance? How long will it last? I need to consider that these nodes are in production.Since the "device_health_metrics" pool is present, you must be on older versions of Ceph & Proxmox VE.

Before you upgrade, create a new pool and move all the disk of the VMs to it. If you check the configuration of the "CephPool01" storage in Datacenter -> Storage, it will most likely show that the "device_health_metrics" pool is used.

It was possible to use it for disk image storage due to not enough safety checks in older versions of Proxmox VE.

Only once you have moved all disk to the new pool, can you consider upgrading.

The reason is that in newer Ceph versions, this pool gets renamed to ".mgr" and will break the disk images in the process!

The reason why you see the overall space go down is because some OSDs are quite a bit fuller than others. Check if the balancer is enabled.

It will make sure that OSDs are used more evenly by moving PGs around in cases where the CRUSH algorithm alone is not able to provide a good even distribution of the data across OSDs.Code:ceph balancer status

You should be at max on Pacific (v16). The Balancer docs for this version can be found at https://docs.ceph.com/en/pacific/rados/operations/balancer/