I ask for your help in trying to understand. Always a pleasure to come to this forum.

I've been reading the forum. I saw that in general all the answers paste the link: https://docs.ceph.com/en/latest/rados/operations/placement-groups/

But there is something I do not understand about the calculation of PG

in the documentation they say that by default they are 100 PG for each OSD. but i have 225 active and i have the warning

"1 pools have too many placement groups"

the ceph web calculator gives me much higher values, if I assign those my cluster is in warinng.

https://ceph.io/pgcalc/

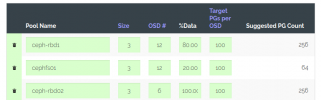

In the image are all the pools. use 100% for the first 2 because a rule applies. The other pool has its own rule.

My configuration:

I have 3 ceph nodes.

I have 2 rules created, by disk types.

root @ pve01: ~ # ceph osd crush rule ls

replicated_rule

rule_1

rule_2

I have 18 OSD evenly distributed

root @ pve01: ~ # ceph osd ls | wc -l

18

root@pve01:~# cat /etc/pve/ceph.conf

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 192.168.30.3/24

fsid = 80e7521d-57fb-4683-9d67-943eef4a91b5

mon_allow_pool_delete = true

mon_host = 192.168.30.5 192.168.30.3 192.168.30.4

osd_pool_default_min_size = 2

osd_pool_default_size = 3

public_network = 192.168.30.3/24

┌───────────────────────┬──────┬──────────┬────────┬───────────────────┬──────────────────┬──────────────────────┬──────────────┐

│ Name │ Size │ Min Size │ PG Num │ PG Autoscale Mode │ Crush Rule Name │ %-Used │ Used │

╞═══════════════════════╪══════╪══════════╪════════╪═══════════════════╪══════════════════╪══════════════════════╪══════════════╡

│ ceph-rbd1 │ 3 │ 2 │ 32 │ warn │ rule_1 │ 0.0120256366208196 │ 129596165718 │

├───────────────────────┼──────┼──────────┼────────┼───────────────────┼──────────────────┼──────────────────────┼──────────────┤

│ ceph_rbd2 │ 3 │ 2 │ 128 │ warn │ rule_2 │ 0.00215974287129939 │ 39375153024 │

├───────────────────────┼──────┼──────────┼────────┼───────────────────┼──────────────────┼──────────────────────┼──────────────┤

│ cephfs01_data │ 3 │ 2 │ 32 │ on │ rule_1 │ 0.0104792825877666 │ 112755146742 │

├───────────────────────┼──────┼──────────┼────────┼───────────────────┼──────────────────┼──────────────────────┼──────────────┤

│ cephfs01_metadata │ 3 │ 2 │ 32 │ on │ rule_1 │ 6.70525651003118e-07 │ 7139132 │

├───────────────────────┼──────┼──────────┼────────┼───────────────────┼──────────────────┼──────────────────────┼──────────────┤

│ device_health_metrics │ 3 │ 2 │ 1 │ on │ replicated_rule │ 4.79321187185633e-08 │ 1360904 │

└───────────────────────┴──────┴──────────┴────────┴───────────────────┴──────────────────┴──────────────────────┴──────────────┘

I've been reading the forum. I saw that in general all the answers paste the link: https://docs.ceph.com/en/latest/rados/operations/placement-groups/

But there is something I do not understand about the calculation of PG

in the documentation they say that by default they are 100 PG for each OSD. but i have 225 active and i have the warning

"1 pools have too many placement groups"

the ceph web calculator gives me much higher values, if I assign those my cluster is in warinng.

https://ceph.io/pgcalc/

In the image are all the pools. use 100% for the first 2 because a rule applies. The other pool has its own rule.

My configuration:

I have 3 ceph nodes.

I have 2 rules created, by disk types.

root @ pve01: ~ # ceph osd crush rule ls

replicated_rule

rule_1

rule_2

I have 18 OSD evenly distributed

root @ pve01: ~ # ceph osd ls | wc -l

18

root@pve01:~# cat /etc/pve/ceph.conf

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 192.168.30.3/24

fsid = 80e7521d-57fb-4683-9d67-943eef4a91b5

mon_allow_pool_delete = true

mon_host = 192.168.30.5 192.168.30.3 192.168.30.4

osd_pool_default_min_size = 2

osd_pool_default_size = 3

public_network = 192.168.30.3/24

┌───────────────────────┬──────┬──────────┬────────┬───────────────────┬──────────────────┬──────────────────────┬──────────────┐

│ Name │ Size │ Min Size │ PG Num │ PG Autoscale Mode │ Crush Rule Name │ %-Used │ Used │

╞═══════════════════════╪══════╪══════════╪════════╪═══════════════════╪══════════════════╪══════════════════════╪══════════════╡

│ ceph-rbd1 │ 3 │ 2 │ 32 │ warn │ rule_1 │ 0.0120256366208196 │ 129596165718 │

├───────────────────────┼──────┼──────────┼────────┼───────────────────┼──────────────────┼──────────────────────┼──────────────┤

│ ceph_rbd2 │ 3 │ 2 │ 128 │ warn │ rule_2 │ 0.00215974287129939 │ 39375153024 │

├───────────────────────┼──────┼──────────┼────────┼───────────────────┼──────────────────┼──────────────────────┼──────────────┤

│ cephfs01_data │ 3 │ 2 │ 32 │ on │ rule_1 │ 0.0104792825877666 │ 112755146742 │

├───────────────────────┼──────┼──────────┼────────┼───────────────────┼──────────────────┼──────────────────────┼──────────────┤

│ cephfs01_metadata │ 3 │ 2 │ 32 │ on │ rule_1 │ 6.70525651003118e-07 │ 7139132 │

├───────────────────────┼──────┼──────────┼────────┼───────────────────┼──────────────────┼──────────────────────┼──────────────┤

│ device_health_metrics │ 3 │ 2 │ 1 │ on │ replicated_rule │ 4.79321187185633e-08 │ 1360904 │

└───────────────────────┴──────┴──────────┴────────┴───────────────────┴──────────────────┴──────────────────────┴──────────────┘