Hi everyone,

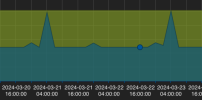

we are using ceph for some days now. Now the VMs running but ceph seams "offline", the overview shows "got timeout (500)". (on every node)

All ceph mon / mgr / osd services are running but some report the following:

PVE Versions:

Any idea how to fix it?

we are using ceph for some days now. Now the VMs running but ceph seams "offline", the overview shows "got timeout (500)". (on every node)

Code:

# pveceph status

command 'ceph -s' failed: got timeout

# ceph -s

2024-03-22T07:58:06.965+0100 736240a846c0 0 monclient(hunting): authenticate timed out after 300

[errno 110] RADOS timed out (error connecting to the cluster)All ceph mon / mgr / osd services are running but some report the following:

Code:

22 07:54:48 host04 ceph-mon[723835]: 2024-03-22T07:54:48.727+0100 73dafe6356c0 -1 mon.host04@1(probing) e8 get_health_metrics reporting 1642 slow ops, oldest is log(7 entries from seq 66 at 2024-03-21T05:06:34.539449+0100)

Mar 22 07:54:53 host04 ceph-mon[723835]: 2024-03-22T07:54:53.727+0100 73dafe6356c0 -1 mon.host04@1(probing) e8 get_health_metrics reporting 1651 slow ops, oldest is log(7 entries from seq 66 at 2024-03-21T05:06:34.539449+0100)

Mar 22 07:54:58 host04 ceph-mon[723835]: 2024-03-22T07:54:58.727+0100 73dafe6356c0 -1 mon.host04@1(probing) e8 get_health_metrics reporting 1661 slow ops, oldest is log(7 entries from seq 66 at 2024-03-21T05:06:34.539449+0100)

Mar 22 07:55:03 host04 ceph-mon[723835]: 2024-03-22T07:55:03.727+0100 73dafe6356c0 -1 mon.host04@1(probing) e8 get_health_metrics reporting 1670 slow ops, oldest is log(7 entries from seq 66 at 2024-03-21T05:06:34.539449+0100)

Mar 22 07:55:08 host04 ceph-mon[723835]: 2024-03-22T07:55:08.727+0100 73dafe6356c0 -1 mon.host04@1(probing) e8 get_health_metrics reporting 1676 slow ops, oldest is log(7 entries from seq 66 at 2024-03-21T05:06:34.539449+0100)

Mar 22 07:55:13 host04 ceph-mon[723835]: 2024-03-22T07:55:13.728+0100 73dafe6356c0 -1 mon.host04@1(probing) e8 get_health_metrics reporting 1688 slow ops, oldest is log(7 entries from seq 66 at 2024-03-21T05:06:34.539449+0100)

Mar 22 07:55:18 host04 ceph-mon[723835]: 2024-03-22T07:55:18.728+0100 73dafe6356c0 -1 mon.host04@1(probing) e8 get_health_metrics reporting 1705 slow ops, oldest is log(7 entries from seq 66 at 2024-03-21T05:06:34.539449+0100)

Mar 22 07:55:23 host04 ceph-mon[723835]: 2024-03-22T07:55:23.728+0100 73dafe6356c0 -1 mon.host04@1(probing) e8 get_health_metrics reporting 1708 slow ops, oldest is log(7 entries from seq 66 at 2024-03-21T05:06:34.539449+0100)

Mar 22 07:55:28 host04 ceph-mon[723835]: 2024-03-22T07:55:28.728+0100 73dafe6356c0 -1 mon.host04@1(probing) e8 get_health_metrics reporting 1718 slow ops, oldest is log(7 entries from seq 66 at 2024-03-21T05:06:34.539449+0100)

Mar 22 07:55:33 host04 ceph-mon[723835]: 2024-03-22T07:55:33.728+0100 73dafe6356c0 -1 mon.host04@1(probing) e8 get_health_metrics reporting 1731 slow ops, oldest is log(7 entries from seq 66 at 2024-03-21T05:06:34.539449+0100)PVE Versions:

Code:

proxmox-ve: 8.1.0 (running kernel: 6.5.13-1-pve)

pve-manager: 8.1.4 (running version: 8.1.4/ec5affc9e41f1d79)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.5.13-1-pve-signed: 6.5.13-1

proxmox-kernel-6.5: 6.5.13-1

proxmox-kernel-6.5.11-8-pve-signed: 6.5.11-8

ceph: 18.2.1-pve2

ceph-fuse: 18.2.1-pve2

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx8

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.0

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.3

libpve-access-control: 8.1.2

libpve-apiclient-perl: 3.3.1

libpve-common-perl: 8.1.1

libpve-guest-common-perl: 5.0.6

libpve-http-server-perl: 5.0.5

libpve-network-perl: 0.9.5

libpve-rs-perl: 0.8.8

libpve-storage-perl: 8.1.0

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 5.0.2-4

lxcfs: 5.0.3-pve4

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.1.4-1

proxmox-backup-file-restore: 3.1.4-1

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.3

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.5

proxmox-widget-toolkit: 4.1.4

pve-cluster: 8.0.5

pve-container: 5.0.8

pve-docs: 8.1.4

pve-edk2-firmware: 4.2023.08-4

pve-firewall: 5.0.3

pve-firmware: 3.9-2

pve-ha-manager: 4.0.3

pve-i18n: 3.2.1

pve-qemu-kvm: 8.1.5-3

pve-xtermjs: 5.3.0-3

qemu-server: 8.0.10

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.2-pve2Any idea how to fix it?

Last edited: